Sky voice actor says nobody ever compared her to ScarJo before OpenAI drama

Enlarge / Scarlett Johansson attends the Golden Heart Awards in 2023.

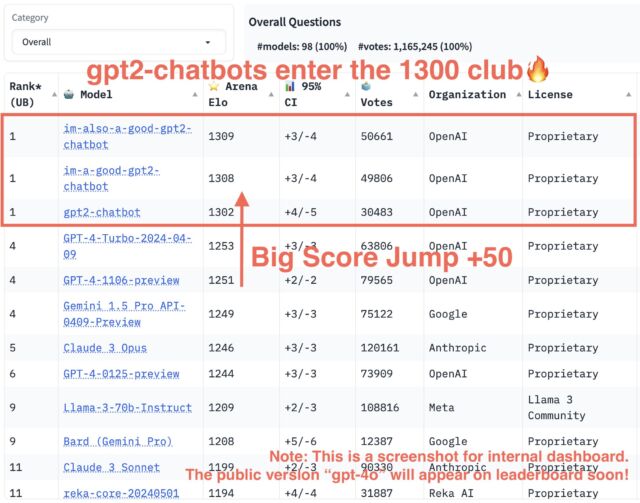

OpenAI is sticking to its story that it never intended to copy Scarlett Johansson’s voice when seeking an actor for ChatGPT’s “Sky” voice mode.

The company provided The Washington Post with documents and recordings clearly meant to support OpenAI CEO Sam Altman’s defense against Johansson’s claims that Sky was made to sound “eerily similar” to her critically acclaimed voice acting performance in the sci-fi film Her.

Johansson has alleged that OpenAI hired a soundalike to steal her likeness and confirmed that she declined to provide the Sky voice. Experts have said that Johansson has a strong case should she decide to sue OpenAI for violating her right to publicity, which gives the actress exclusive rights to the commercial use of her likeness.

In OpenAI’s defense, The Post reported that the company’s voice casting call flier did not seek a “clone of actress Scarlett Johansson,” and initial voice test recordings of the unnamed actress hired to voice Sky showed that her “natural voice sounds identical to the AI-generated Sky voice.” Because of this, OpenAI has argued that “Sky’s voice is not an imitation of Scarlett Johansson.”

What’s more, an agent for the unnamed Sky actress who was cast—both granted anonymity to protect her client’s safety—confirmed to The Post that her client said she was never directed to imitate either Johansson or her character in Her. She simply used her own voice and got the gig.

The agent also provided a statement from her client that claimed that she had never been compared to Johansson before the backlash started.

This all “feels personal,” the voice actress said, “being that it’s just my natural voice and I’ve never been compared to her by the people who do know me closely.”

However, OpenAI apparently reached out to Johansson after casting the Sky voice actress. During outreach last September and again this month, OpenAI seemed to want to substitute the Sky voice actress’s voice with Johansson’s voice—which is ironically what happened when Johansson got cast to replace the original actress hired to voice her character in Her.

Altman has clarified that timeline in a statement provided to Ars that emphasized that the company “never intended” Sky to sound like Johansson. Instead, OpenAI tried to snag Johansson to voice the part after realizing—seemingly just as Her director Spike Jonze did—that the voice could potentially resonate with more people if Johansson did it.

“We are sorry to Ms. Johansson that we didn’t communicate better,” Altman’s statement said.

Johansson has not yet made any public indications that she intends to sue OpenAI over this supposed miscommunication. But if she did, legal experts told The Post and Reuters that her case would be strong because of legal precedent set in high-profile lawsuits raised by singers Bette Midler and Tom Waits blocking companies from misappropriating their voices.

Why Johansson could win if she sued OpenAI

In 1988, Bette Midler sued Ford Motor Company for hiring a soundalike to perform Midler’s song “Do You Want to Dance?” in a commercial intended to appeal to “young yuppies” by referencing popular songs from their college days. Midler had declined to do the commercial and accused Ford of exploiting her voice to endorse its product without her consent.

This groundbreaking case proved that a distinctive voice like Midler’s cannot be deliberately imitated to sell a product. It did not matter that the singer used in the commercial had used her natural singing voice, because “a number of people” told Midler that the performance “sounded exactly” like her.

Midler’s case set a powerful precedent preventing companies from appropriating parts of performers’ identities—essentially stopping anyone from stealing a well-known voice that otherwise could not be bought.

“A voice is as distinctive and personal as a face,” the court ruled, concluding that “when a distinctive voice of a professional singer is widely known and is deliberately imitated in order to sell a product, the sellers have appropriated what is not theirs.”

Like in Midler’s case, Johansson could argue that plenty of people think that the Sky voice sounds like her and that OpenAI’s product might be more popular if it had a Her-like voice mode. Comics on popular late-night shows joked about the similarity, including Johansson’s husband, Saturday Night Live comedian Colin Jost. And other people close to Johansson agreed that Sky sounded like her, Johansson has said.

Johansson’s case differs from Midler’s case seemingly primarily because of the casting timeline that OpenAI is working hard to defend.

OpenAI seems to think that because Johansson was offered the gig after the Sky voice actor was cast that she has no case to claim that they hired the other actor after she declined.

The timeline may not matter as much as OpenAI may think, though. In the 1990s, Tom Waits cited Midler’s case when he won a $2.6 million lawsuit after Frito-Lay hired a Waits impersonator to perform a song that “echoed the rhyming word play” of a Waits song in a Doritos commercial. Waits won his suit even though Frito-Lay never attempted to hire the singer before casting the soundalike.

Sky voice actor says nobody ever compared her to ScarJo before OpenAI drama Read More »