In the room where it happened: When NASA nearly gave Boeing all the crew funding

The story behind the story —

“In all my years of working with Boeing I never saw them sign up for additional work for free.”

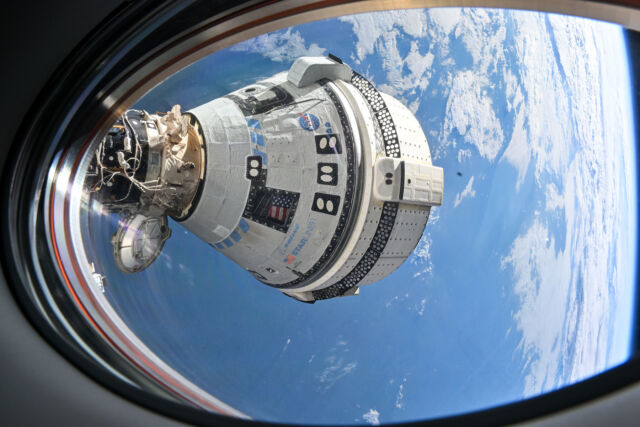

Enlarge / But for a fateful meeting in the summer of 2014, Crew Dragon probably never would have happened.

SpaceX

This is an excerpt from Chapter 11 of the book REENTRY: SpaceX, Elon Musk and the Reusable Rockets that Launched a Second Space Age by our own Eric Berger. The book will be published on September 24, 2024. This excerpt describes a fateful meeting 10 years ago at NASA Headquarters in Washington, DC, where the space agency’s leaders met to decide which companies should be awarded billions of dollars to launch astronauts into orbit.

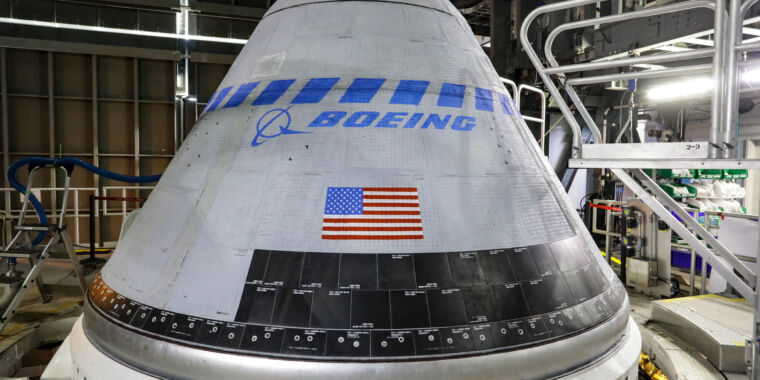

In the early 2010s, NASA’s Commercial Crew competition boiled down to three players: Boeing, SpaceX, and a Colorado-based company building a spaceplane, Sierra Nevada Corporation. Each had its own advantages. Boeing was the blue blood, with decades of spaceflight experience. SpaceX had already built a capsule, Dragon. And some NASA insiders nostalgically loved Sierra Nevada’s Dream Chaser space plane, which mimicked the shuttle’s winged design.

This competition neared a climax in 2014 as NASA prepared to winnow the field to one company, or at most two, to move from the design phase into actual development. In May of that year Musk revealed his Crew Dragon spacecraft to the world with a characteristically showy event at the company’s headquarters in Hawthorne. As lights flashed and a smoke machine vented, Musk quite literally raised a curtain on a black-and-white capsule. He was most proud to reveal how Dragon would land. Never before had a spacecraft come back from orbit under anything but parachutes or gliding on wings. Not so with the new Dragon. It had powerful thrusters, called SuperDracos, that would allow it to land under its own power.

“You’ll be able to land anywhere on Earth with the accuracy of a helicopter,” Musk bragged. “Which is something that a modern spaceship should be able to do.”

A few weeks later I had an interview with John Elbon, a long-time engineer at Boeing who managed the company’s commercial program. As we talked, he tut-tutted SpaceX’s performance to date, noting its handful of Falcon 9 launches a year and inability to fly at a higher cadence. As for Musk’s little Dragon event, Elbon was dismissive.

“We go for substance,” Elbon told me. “Not pizzazz.”

Elbon’s confidence was justified. That spring the companies were finalizing bids to develop a spacecraft and fly six operational missions to the space station. These contracts were worth billions of dollars. Each company told NASA how much it needed for the job, and if selected, would receive a fixed price award for that amount. Boeing, SpaceX, and Sierra Nevada wanted as much money as they could get, of course. But each had an incentive to keep their bids low, as NASA had a finite budget for the program. Boeing had a solution, telling NASA it needed the entire Commercial Crew budget to succeed. Because a lot of decision-makers believed that only Boeing could safely fly astronauts, the company’s gambit very nearly worked.

Scoring the bids

The three competitors submitted initial bids to NASA in late January 2014, and after about six months of evaluations and discussions with the “source evaluation board,” submitted their final bids in July. During this initial round of judging, subject-matter experts scored the proposals and gathered to make their ratings. Sierra Nevada was eliminated because their overall scores were lower, and the proposed cost not low enough to justify remaining in the competition. This left Boeing and SpaceX, with likely only one winner.

“We really did not have the budget for two companies at the time,” said Phil McAlister, the NASA official at the agency’s headquarters in Washington overseeing the Commercial Crew program. “No one thought we were going to award two. I would always say, ‘One or more,’ and people would roll their eyes at me.”

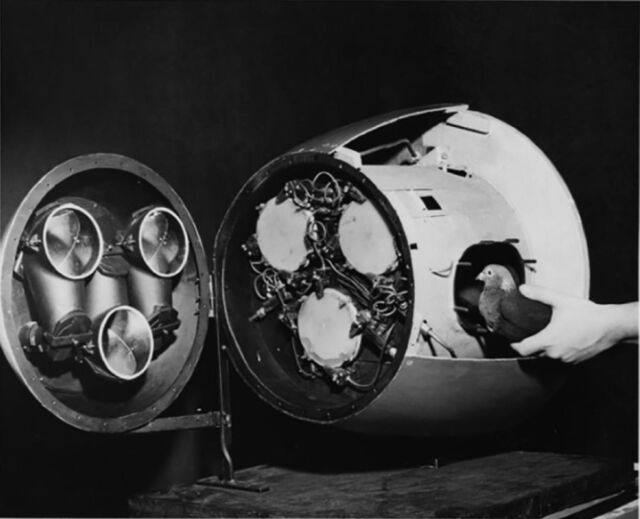

Boeing’s John Elbon, center, is seen in Orbiter Processing Facility-3 at NASA’s Kennedy Space Center in Florida in 2012.

NASA

The members of the evaluation board scored the companies based on three factors. Price was the most important consideration, given NASA’s limited budget. This was followed by “mission suitability,” and finally, “past performance.” These latter two factors, combined, were about equally weighted to price. SpaceX dominated Boeing on price.

Boeing asked for $4.2 billion, 60 percent more than SpaceX’s bid of $2.6 billion. The second category, mission suitability, assessed whether a company could meet NASA’s requirements and actually safely fly crew to and from the station. For this category, Boeing received an “excellent” rating, above SpaceX’s “very good.” The third factor, past performance, evaluated a company’s recent work. Boeing received a rating of “very high,” whereas SpaceX received a rating of “high.”

While this makes it appear as though the bids were relatively even, McAlister said the score differences in mission suitability and past performance were, in fact, modest. It was a bit like grades in school. SpaceX scored something like an 88, and got a B; whereas Boeing got a 91 and scored an A. Because of the significant difference in price, McAlister said, the source evaluation board assumed SpaceX would win the competition. He was thrilled, because he figured this meant that NASA would have to pick two companies, SpaceX based on price, and Boeing due to its slightly higher technical score. He wanted competition to spur both of the companies on.

In the room where it happened: When NASA nearly gave Boeing all the crew funding Read More »