US can’t deport hate speech researcher for protected speech, lawsuit says

On Monday, US officials must explain what steps they took to enforce shocking visa bans.

Imran Ahmed, the founder of the Center for Countering Digital Hate (CCDH), giving evidence to joint committee seeking views on how to improve the draft Online Safety Bill designed to tackle social media abuse. Credit: House of Commons – PA Images / Contributor | PA Images

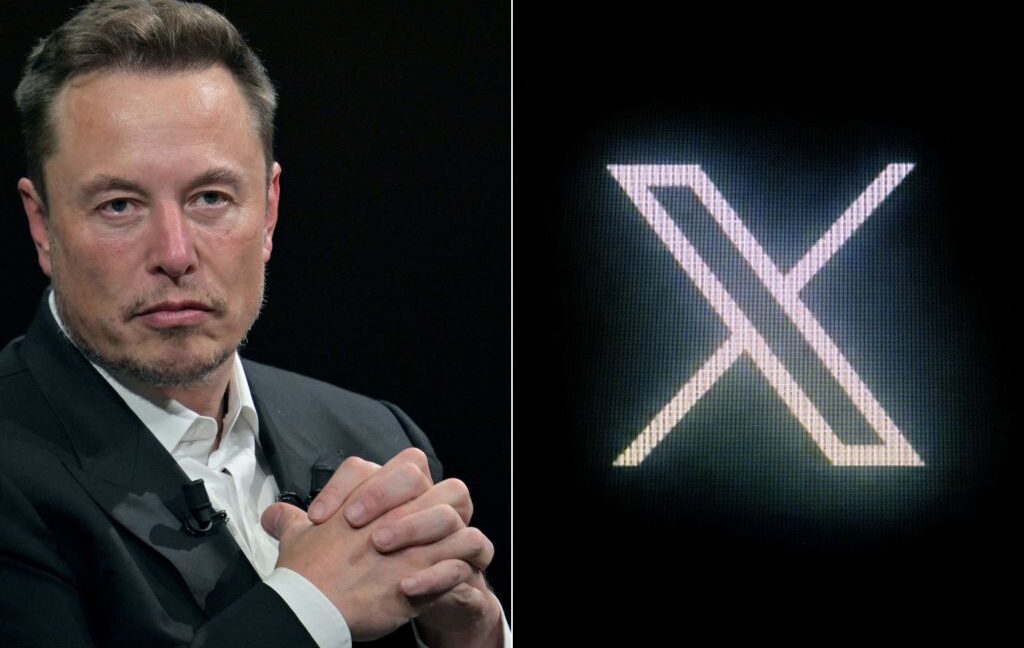

Imran Ahmed’s biggest thorn in his side used to be Elon Musk, who made the hate speech researcher one of his earliest legal foes during his Twitter takeover.

Now, it’s the Trump administration, which planned to deport Ahmed, a legal permanent resident, just before Christmas. It would then ban him from returning to the United States, where he lives with his wife and young child, both US citizens.

After suing US officials to block any attempted arrest or deportation, Ahmed was quickly granted a temporary restraining order on Christmas Day. Ahmed had successfully argued that he risked irreparable harm without the order, alleging that Trump officials continue “to abuse the immigration system to punish and punitively detain noncitizens for protected speech and silence viewpoints with which it disagrees” and confirming that his speech had been chilled.

US officials are attempting to sanction Ahmed seemingly due to his work as the founder of a British-American non-governmental organization, the Center for Countering Digital Hate (CCDH).

“An egregious act of government censorship”

In a shocking announcement last week, Secretary of State Marco Rubio confirmed that five individuals—described as “radical activists” and leaders of “weaponized NGOs”—would face US visa bans since “their entry, presence, or activities in the United States have potentially serious adverse foreign policy consequences” for the US.

Nobody was named in that release, but Under Secretary for Public Diplomacy, Sarah Rogers, later identified the targets in an X post she currently has pinned to the top of her feed.

Alongside Ahmed, sanctioned individuals included former European commissioner for the internal market, Thierry Breton; the leader of UK-based Global Disinformation Index (GDI), Clare Melford; and co-leaders of Germany-based HateAid, Anna-Lena von Hodenberg and Josephine Ballon. A GDI spokesperson told The Guardian that the visa bans are “an authoritarian attack on free speech and an egregious act of government censorship.”

While all targets were scrutinized for supporting some of the European Union’s strictest tech regulations, including the Digital Services Act (DSA), Ahmed was further accused of serving as a “key collaborator with the Biden Administration’s effort to weaponize the government against US citizens.” As evidence of Ahmed’s supposed threat to US foreign policy, Rogers cited a CCDH report flagging Robert F. Kennedy, Jr. among the so-called “disinformation dozen” driving the most vaccine hoaxes on social media.

Neither official has really made it clear what exact threat these individuals pose if operating from within the US, as opposed to from anywhere else in the world. Echoing Rubio’s press release, Rogers wrote that the sanctions would reinforce a “red line,” supposedly ending “extraterritorial censorship of Americans” by targeting the “censorship-NGO ecosystem.”

For Ahmed’s group, specifically, she pointed to Musk’s failed lawsuit, which accused CCDH of illegally scraping Twitter—supposedly, it offered evidence of extraterritorial censorship. That lawsuit surfaced “leaked documents” allegedly showing that CCDH planned to “kill Twitter” by sharing research that could be used to justify big fines under the DSA or the UK’s Online Safety Act. Following that logic, seemingly any group monitoring misinformation or sharing research that lawmakers weigh when implementing new policies could be maligned as seeking mechanisms to censor platforms.

Notably, CCDH won its legal fight with Musk after a judge mocked X’s legal argument as “vapid” and dismissed the lawsuit as an obvious attempt to punish CCDH for exercising free speech that Musk didn’t like.

In his complaint last week, Ahmed alleged that US officials were similarly encroaching on his First Amendment rights by unconstitutionally wielding immigration law as “a tool to punish noncitizen speakers who express views disfavored by the current administration.”

Both Rubio and Rogers are named as defendants in the suit, as well as Attorney General Pam Bondi, Secretary of Homeland Security Kristi Noem, and Acting Director of US Immigration and Customs Enforcement Todd Lyons. In a loss, officials would potentially not only be forced to vacate Rubio’s actions implementing visa bans, but also possibly stop furthering a larger alleged Trump administration pattern of “targeting noncitizens for removal based on First Amendment protected speech.”

Lawsuit may force Rubio to justify visa bans

For Ahmed, securing the temporary restraining order was urgent, as he was apparently the only target currently located in the US when Rubio’s announcement dropped. In a statement provided to Ars, Ahmed’s attorney, Roberta Kaplan, suggested that the order was granted “so quickly because it is so obvious that Marco Rubio and the other defendants’ actions were blatantly unconstitutional.”

Ahmed founded CCDH in 2019, hoping to “call attention to the enormous problem of digitally driven disinformation and hate online.” According to the suit, he became particularly concerned about antisemitism online while living in the United Kingdom in 2016, having watched “the far-right party, Britain First,” launching “the dangerous conspiracy theory that the EU was attempting to import Muslims and Black people to ‘destroy’ white citizens.” That year, a Member of Parliament and Ahmed’s colleague, Jo Cox, was “shot and stabbed in a brutal politically motivated murder, committed by a man who screamed ‘Britain First’” during the attack. That tragedy motivated Ahmed to start CCDH.

He moved to the US in 2021 and was granted a green card in 2024, starting his family and continuing to lead CCDH efforts monitoring not just Twitter/X, but also Meta platforms, TikTok, and, more recently, AI chatbots. In addition to supporting the DSA and UK’s Online Safety Act, his group has supported US online safety laws and Section 230 reforms intended to protect kids online.

“Mr. Ahmed studies and engages in civic discourse about the content moderation policies of major social media companies in the United States, the United Kingdom, and the European Union,” his lawsuit said. “There is no conceivable foreign policy impact from his speech acts whatsoever.”

In his complaint, Ahmed alleged that Rubio has so far provided no evidence that Ahmed poses such a great threat that he must be removed. He argued that “applicable statutes expressly prohibit removal based on a noncitizen’s ‘past, current, or expected beliefs, statements, or associations.’”

According to DHS guidance from 2021 cited in the suit, “A noncitizen’ s exercise of their First Amendment rights … should never be a factor in deciding to take enforcement action.”

To prevent deportation based solely on viewpoints, Rubio was supposed to notify chairs of the House Foreign Affairs, Senate Foreign Relations, and House and Senate Judiciary Committees, to explain what “compelling US foreign policy interest” would be compromised if Ahmed or others targeted with visa bans were to enter the US. But there’s no evidence Rubio took those steps, Ahmed alleged.

“The government has no power to punish Mr. Ahmed for his research, protected speech, and advocacy, and Defendants cannot evade those constitutional limitations by simply claiming that Mr. Ahmed’s presence or activities have ‘potentially serious adverse foreign policy consequences for the United States,’” a press release from his legal team said. “There is no credible argument for Mr. Ahmed’s immigration detention, away from his wife and young child.”

X lawsuit offers clues to Trump officials’ defense

To some critics, it looks like the Trump administration is going after CCDH in order to take up the fight that Musk already lost. In his lawsuit against CCDH, Musk’s X echoed US Senator Josh Hawley (R-Mo.) by suggesting that CCDH was a “foreign dark money group” that allowed “foreign interests” to attempt to “influence American democracy.” It seems likely that US officials will put forward similar arguments in their CCDH fight.

Rogers’ X post offers some clues that the State Department will be mining Musk’s failed litigation to support claims of what it calls a “global censorship-industrial complex.” What she detailed suggested that the Trump administration plans to argue that NGOs like CCDH support strict tech laws, then conduct research bent on using said laws to censor platforms. That logic seems to ignore the reality that NGOs cannot control what laws get passed or enforced, Breton suggested in his first TV interview after his visa ban was announced.

Breton, whom Rogers villainized as the “mastermind” behind the DSA, urged EU officials to do more now defend their tough tech regulations—which Le Monde noted passed with overwhelming bipartisan support and very little far-right resistance—and fight the visa bans, Bloomberg reported.

“They cannot force us to change laws that we voted for democratically just to please [US tech companies],” Breton said. “No, we must stand up.”

While EU officials seemingly drag their feet, Ahmed is hoping that a judge will declare that all the visa bans that Rubio announced are unconstitutional. The temporary restraining order indicates there will be a court hearing Monday at which Ahmed will learn precisely “what steps Defendants have taken to impose visa restrictions and initiate removal proceedings against” him and any others. Until then, Ahmed remains in the dark on why Rubio deemed him as having “potentially serious adverse foreign policy consequences” if he stayed in the US.

Ahmed, who argued that X’s lawsuit sought to chill CCDH’s research and alleged that the US attack seeks to do the same, seems confident that he can beat the visa bans.

“America is a great nation built on laws, with checks and balances to ensure power can never attain the unfettered primacy that leads to tyranny,” Ahmed said. “The law, clear-eyed in understanding right and wrong, will stand in the way of those who seek to silence the truth and empower the bold who stand up to power. I believe in this system, and I am proud to call this country my home. I will not be bullied away from my life’s work of fighting to keep children safe from social media’s harm and stopping antisemitism online. Onward.”

US can’t deport hate speech researcher for protected speech, lawsuit says Read More »