Man got $2,500 whole-body MRI that found no problems—then had massive stroke

A New York man is suing Prenuvo, a celebrity-endorsed whole-body magnetic resonance imaging (MRI) provider, claiming that the company missed clear signs of trouble in his $2,500 whole-body scan—and if it hadn’t, he could have acted to avert the catastrophic stroke he suffered months later.

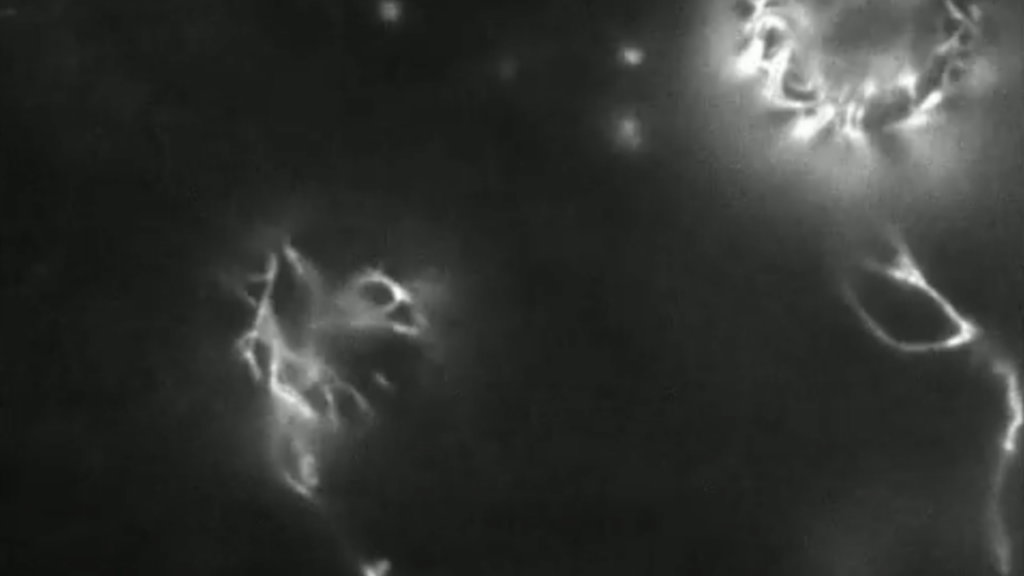

Sean Clifford and his legal team claim that his scan on July 15, 2023, showed a 60 percent narrowing and irregularity in a major artery in his brain—the proximal right middle cerebral artery, a branch of the most common artery involved in acute strokes. But Prenuvo’s reviews of the scan did not flag the finding and otherwise reported everything in his brain looked normal; there was “no adverse finding.” (You can read Prenuvo’s report and see Clifford’s subsequent imaging here.)

Clifford suffered a massive stroke on March 7, 2024. Subsequent imaging found that the proximal right middle cerebral artery progressed to a complete blockage, causing the stroke. Clifford suffered paralysis of his left hand and leg, general weakness on his left side, vision loss and permanent double vision, anxiety, depression, mood swings, cognitive deficits, speech problems, and permanent difficulties with all daily activities.

He filed his lawsuit against Prenuvo in September 2024 in the New York State Supreme Court. In the lawsuit, he argues that if he had known of the problem, he could have undergone stenting or other minimally invasive measures to prevent the stroke.

Ongoing litigation

In the legal proceedings since, Prenuvo, a California-based company, has tried to limit the damages that Clifford could seek, first by trying to force arbitration and then by trying to apply California laws to the New York case, as California law caps malpractice damages. The company failed on both counts. In a December ruling, a judge also denied Prenovo’s attempts to shield the radiologist who reviewed Clifford’s scan, William A. Weiner, DO, of East Rockaway, New York.

Notably, Weiner has had his medical license suspended in connection with an auto insurance scheme, in which Weiner was accused of falsifying findings on MRI scans.

Man got $2,500 whole-body MRI that found no problems—then had massive stroke Read More »