Pass the mayo: Condiment could help improve fusion energy yields

Don’t hold the mayo —

Controlling a problematic instability could lead to cheaper internal fusion.

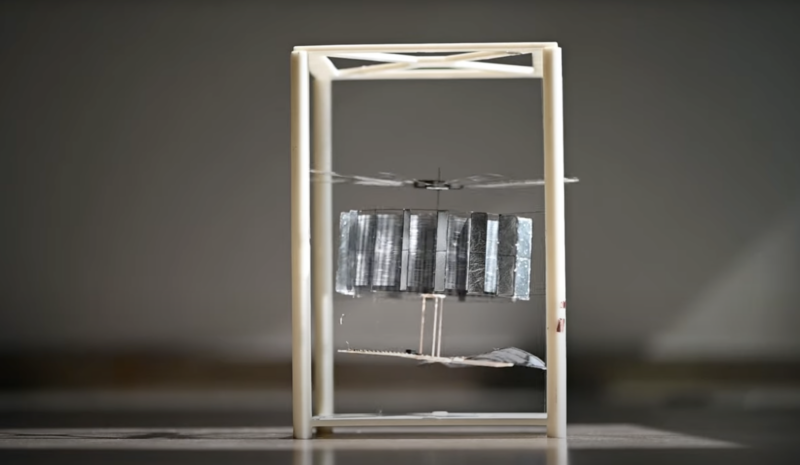

Inertial confinement fusion is one method for generating energy through nuclear fusion, albeit one plagued by all manner of scientific challenges (although progress is being made). Researchers at LeHigh University are attempting to overcome one specific bugbear with this approach by conducting experiments with mayonnaise placed in a rotating figure-eight contraption. They described their most recent findings in a new paper published in the journal Physical Review E with an eye toward increasing energy yields from fusion.

The work builds on prior research in the LeHigh laboratory of mechanical engineer Arindam Banerjee, who focuses on investigating the dynamics of fluids and other materials in response to extremely high acceleration and centrifugal force. In this case, his team was exploring what’s known as the “instability threshold” of elastic/plastic materials. Scientists have debated whether this comes about because of initial conditions, or whether it’s the result of “more local catastrophic processes,” according to Banerjee. The question is relevant to a variety of fields, including geophysics, astrophysics, explosive welding, and yes, inertial confinement fusion.

How exactly does inertial confinement fusion work? As Chris Lee explained for Ars back in 2016:

The idea behind inertial confinement fusion is simple. To get two atoms to fuse together, you need to bring their nuclei into contact with each other. Both nuclei are positively charged, so they repel each other, which means that force is needed to convince two hydrogen nuclei to touch. In a hydrogen bomb, force is generated when a small fission bomb explodes, compressing a core of hydrogen. This fuses to create heavier elements, releasing a huge amount of energy.

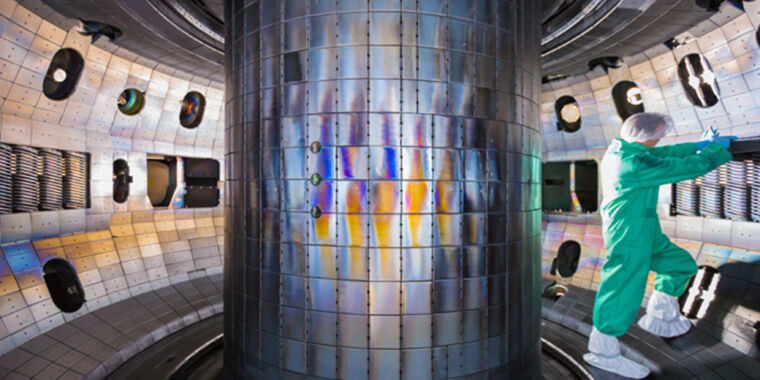

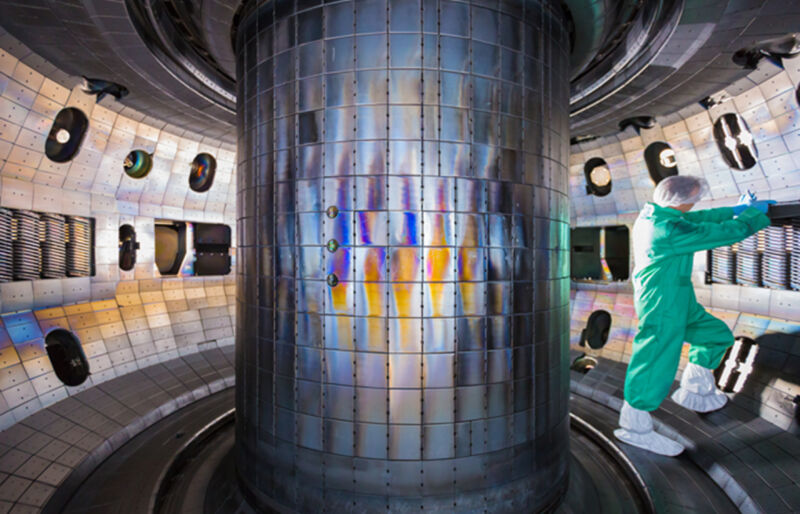

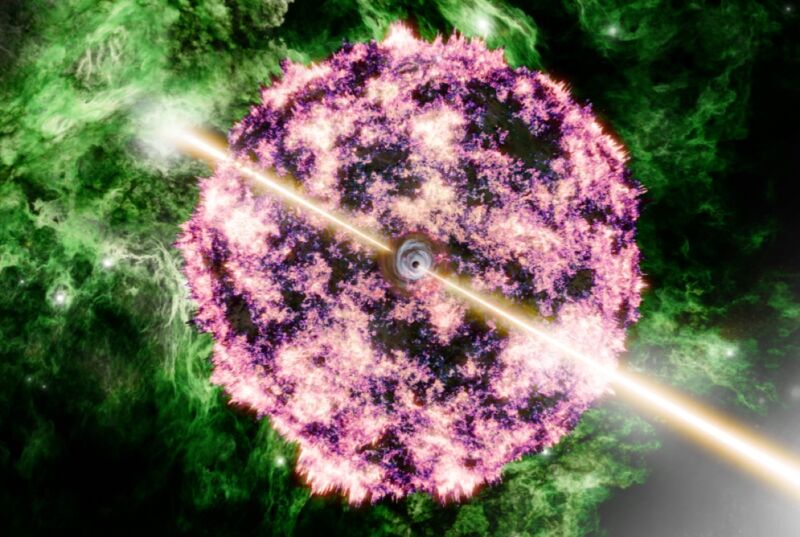

Being killjoys, scientists prefer not to detonate nuclear weapons every time they want to study fusion or use it to generate electricity. Which brings us to inertial confinement fusion. In inertial confinement fusion, the hydrogen core consists of a spherical pellet of hydrogen ice inside a heavy metal casing. The casing is illuminated by powerful lasers, which burn off a large portion of the material. The reaction force from the vaporized material exploding outward causes the remaining shell to implode. The resulting shockwave compresses the center of the core of the hydrogen pellet so that it begins to fuse.

If confinement fusion ended there, the amount of energy released would be tiny. But the energy released due to the initial fusion burn in the center generates enough heat for the hydrogen on the outside of the pellet to reach the required temperature and pressure. So, in the end (at least in computer models), all of the hydrogen is consumed in a fiery death, and massive quantities of energy are released.

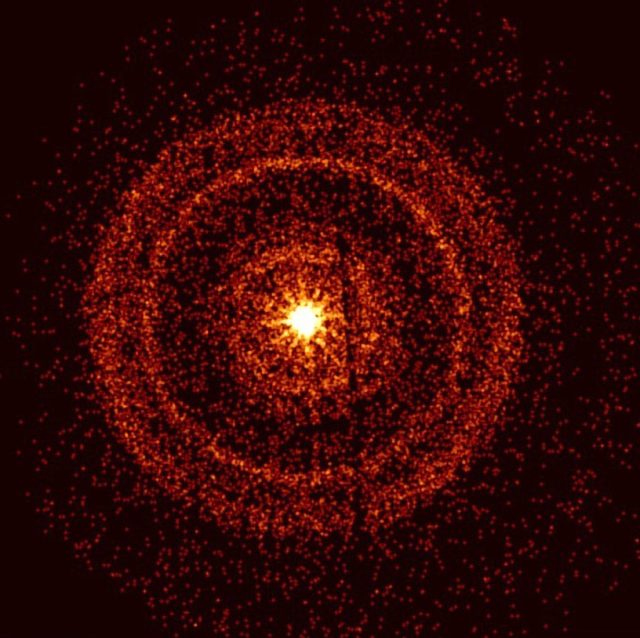

That’s the idea anyway. The problem is that hydrodynamic instabilities tend to form in the plasma state—Banerjee likens it to “two materials [that] penetrate one another like fingers” in the presence of gravity or any accelerating field—which in turn reduces energy yields. The technical term is a Rayleigh-Taylor instability, which occurs between two materials of different densities, where the density and pressure gradients move in opposite directions. Mayonnaise turns out to be an excellent analog for investigating this instability in accelerated solids, with no need for a lab setup with high temperature and pressure conditions, because it’s a non-Newtonian fluid.

“We use mayonnaise because it behaves like a solid, but when subjected to a pressure gradient, it starts to flow,” said Banerjee. “As with a traditional molten metal, if you put a stress on mayonnaise, it will start to deform, but if you remove the stress, it goes back to its original shape. So there’s an elastic phase followed by a stable plastic phase. The next phase is when it starts flowing, and that’s where the instability kicks in.”

More mayo, please

2019 video showcasing the rotating wheel Rayleigh Taylor instability experiment at Lehigh University.

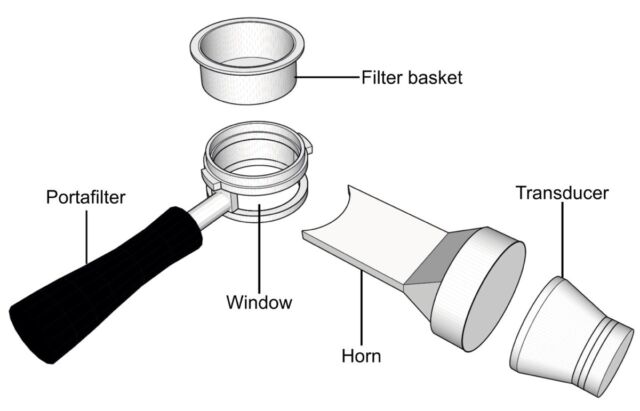

His team’s 2019 experiments involved pouring Hellman’s Real Mayonnaise—no Miracle Whip for this crew—into a Plexiglass container and then creating wavelike perturbations in the mayo. One experiment involved placing the container on a rotating wheel in the shape of a figure eight and tracking the material with a high-speed camera, using an image processing algorithm to analyze the footage. Their results supported the claim that the instability threshold is dependent on initial conditions, namely amplitude and wavelength.

This latest paper sheds more light on the structural integrity of fusion capsules used in inertial confinement fusion, taking a closer look at the material properties, the amplitude and wavelength conditions, and the acceleration rate of such materials as they hit the Rayleigh-Taylor instability threshold. The more scientists know about the phase transition from the elastic to the stable phase, the better they can control the conditions and maintain either an elastic or plastic phase, avoiding the instability. Banerjee et al. were able to identify the conditions to maintain the elastic phase, which could inform the design of future pellets for inertial confinement fusion.

That said, the mayonnaise experiments are an analog, orders of magnitude away from the real-world conditions of nuclear fusion, which Banerjee readily acknowledges. He is nonetheless hopeful that future research will improve the predictability of just what happens within the pellets in their high-temperature, high-pressure environments. “We’re another cog in this giant wheel of researchers,” he said. “And we’re all working towards making inertial fusion cheaper and therefore, attainable.”

DOI: Physical Review E, 2024. 10.1103/PhysRevE.109.055103 (About DOIs).

Pass the mayo: Condiment could help improve fusion energy yields Read More »