Brazil judge seizes cash from Starlink to cover fine imposed on Elon Musk’s X

Forced withdrawal —

Starlink and X treated as one economic group, forcing both to pay X fines.

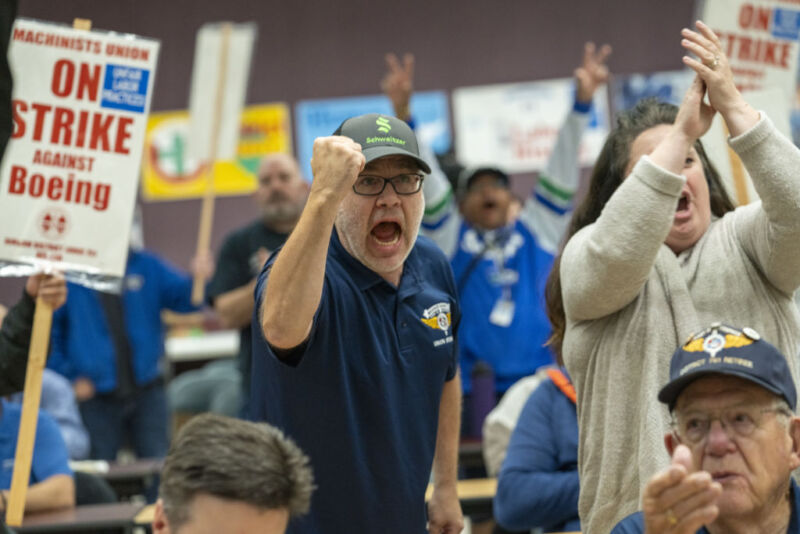

Enlarge / Supporters of former President Jair Bolsonaro participate in an event in the central area of Sao Paulo, Brazil, on September 7, 2024. Bolsonaro backers called for the impeachment of Supreme Court Justice Alexandre de Moraes.

Getty Images | NurPhoto

Brazil seized about $2 million from a Starlink bank account and another $1.3 million from X to collect on fines issued to Elon Musk’s social network, the country’s Supreme Court announced Friday.

Supreme Court Judge Alexandre de Moraes previously froze the accounts of both companies, treating them as the same de facto economic group because both are controlled by Musk. The Starlink and X bank accounts were unfrozen last week after the money transfers ordered by de Moraes.

Two banks carried out orders to transfer the money from Starlink and X to Brazil’s government. “After the payment of the full amount that was owed, the justice (de Moraes) considered there was no need to keep the bank accounts frozen and ordered the immediate unfreezing of bank accounts/financial assets,” the court said, as quoted by The Associated Press.

The suspension of X’s social platform, formerly named Twitter, remains in place. The dispute began several months ago when Musk said he would disobey an order from de Moraes to suspend dozens of accounts accused of spreading disinformation. De Moraes later ordered the X social platform to be blocked by Internet service providers. X closed its office in Brazil and did not pay the fines.

Starlink initially said it would refuse to block X on its broadband service until the government unfroze its assets. Starlink quickly backtracked, agreeing to block X, but said it would fight the asset freeze in court. The Brazil Supreme Court’s announcement of the money transfers said that Starlink and X missed a deadline to appeal the finding that they are part of the same de facto economic group.

Shotwell accused justice of “harassing Starlink”

Starlink has called the order to freeze its assets “an unfounded determination that Starlink should be responsible for the fines levied—unconstitutionally—against X.” SpaceX President Gwynne Shotwell accused de Moraes of “harassing Starlink.”

X has said that de Moraes targeted the platform “simply because we would not comply with his illegal orders to censor his political opponents.” X also argued that it is not defying Brazilian law.

“We are absolutely not insisting that other countries have the same free speech laws as the United States. The fundamental issue at stake here is that Judge de Moraes demands we break Brazil’s own laws. We simply won’t do that,” X’s Global Government Affairs account wrote.

As noted by CNBC, Brazilian news agency UOL reported last week “that some of the accounts de Moraes ordered Musk to suspend at X belong to users who allegedly threatened federal police officers involved in a probe of former right-wing Brazilian President Jair Bolsonaro.”

Bolsonaro was accused of instigating the January 8, 2023, attack on the Brazilian Congress that occurred after Bolsonaro’s election loss. Bolsonaro and his supporters praised Musk in April of this year after Musk’s decision to defy the orders to block X accounts associated with Bolsonaro supporters.

Brazil judge seizes cash from Starlink to cover fine imposed on Elon Musk’s X Read More »