Elon Musk declares “it is war” on ad industry as X sues over “illegal boycott”

Aurich Lawson | Getty Images

Elon Musk’s X Corp. today sued the World Federation of Advertisers and several large corporations, claiming they “conspired, along with dozens of non-defendant co-conspirators, to collectively withhold billions of dollars in advertising revenue” from the social network formerly known as Twitter.

“We tried peace for 2 years, now it is war,” Musk wrote today, a little over eight months after telling boycotting advertisers to “go fuck yourself.”

X’s lawsuit in US District Court for the Northern District of Texas targets a World Federation of Advertisers initiative called the Global Alliance for Responsible Media (GARM). The other defendants are Unilever PLC; Unilever United States; Mars, Incorporated; CVS Health Corporation; and Ørsted A/S. Those companies are all members of GARM. X itself is still listed as one of the group’s members.

“This is an antitrust action relating to a group boycott by competing advertisers of one of the most popular social media platforms in the United States… Concerned that Twitter might deviate from certain brand safety standards for advertising on social media platforms set through GARM, the conspirators collectively acted to enforce Twitter’s adherence to those standards through the boycott,” the lawsuit said.

The lawsuit seeks treble damages to be calculated based on the “actual damages in an amount to be determined at trial.” X also wants “a permanent injunction under Section 16 of the Clayton Act, enjoining Defendants from continuing to conspire with respect to the purchase of advertising from Plaintiff.”

The lawsuit came several weeks after Musk wrote that X “has no choice but to file suit against the perpetrators and collaborators in the advertising boycott racket,” and called for “criminal prosecution.” Musk’s complaints were buoyed by a House Judiciary Committee report claiming that “the extent to which GARM has organized its trade association and coordinates actions that rob consumers of choices is likely illegal under the antitrust laws and threatens fundamental American freedoms.”

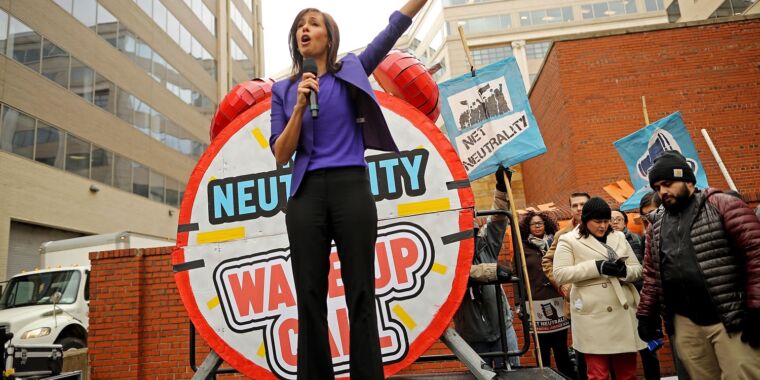

Yaccarino claims “illegal boycott” is stain on industry

We contacted all of the organizations named as defendants in the lawsuit and will update this article if any provide a response.

An advertising industry watchdog group called the Check My Ads Institute, which is not involved in the lawsuit, said that Musk’s claims should fail under the First Amendment. “Advertisers have a First Amendment right to choose who and what they want to be associated with… Elon Musk and X executives have the right, protected by the First Amendment, to say what they want online, even when it’s inaccurate, and advertisers have the right to keep their ads away from it,” the group said.

X CEO Linda Yaccarino posted an open letter to advertisers claiming that the alleged “illegal boycott” is “a stain on a great industry, and cannot be allowed to continue.”

“The illegal behavior of these organizations and their executives cost X billions of dollars… To those who broke the law, we say enough is enough. We are compelled to seek justice for the harm that has been done by these and potentially additional defendants, depending what the legal process reveals,” Yaccarino wrote.

Yaccarino also sought to gain support from X users in a video message. “These organizations targeted our company and you, our users,” she said.

Musk followed up with a post encouraging other companies to sue advertisers and claimed that advertisers could face “criminal liability via the RICO Act.” X previously filed lawsuits against the Center for Countering Digital Hate (CCDH) and Media Matters for America, blaming both for advertising losses.

X doesn’t provide public earnings reports because Musk took the company private after buying Twitter. A recent New York Times article said that “in the second quarter of this year, X earned $114 million in revenue in the United States, a 25 percent decline from the first quarter and a 53 percent decline from the previous year.”

Elon Musk declares “it is war” on ad industry as X sues over “illegal boycott” Read More »