California startup to demonstrate space weapon on its own dime

“All of the pieces that are required to make it viable exist.”

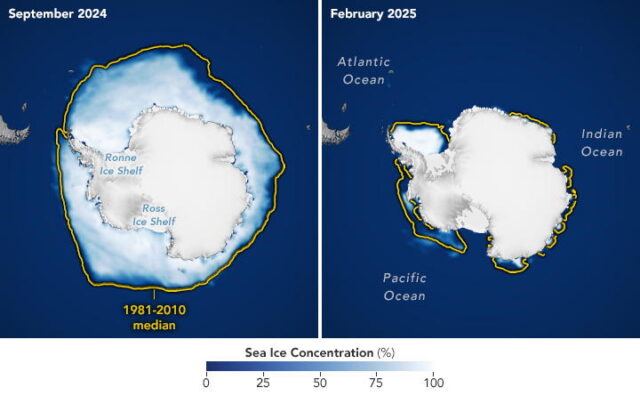

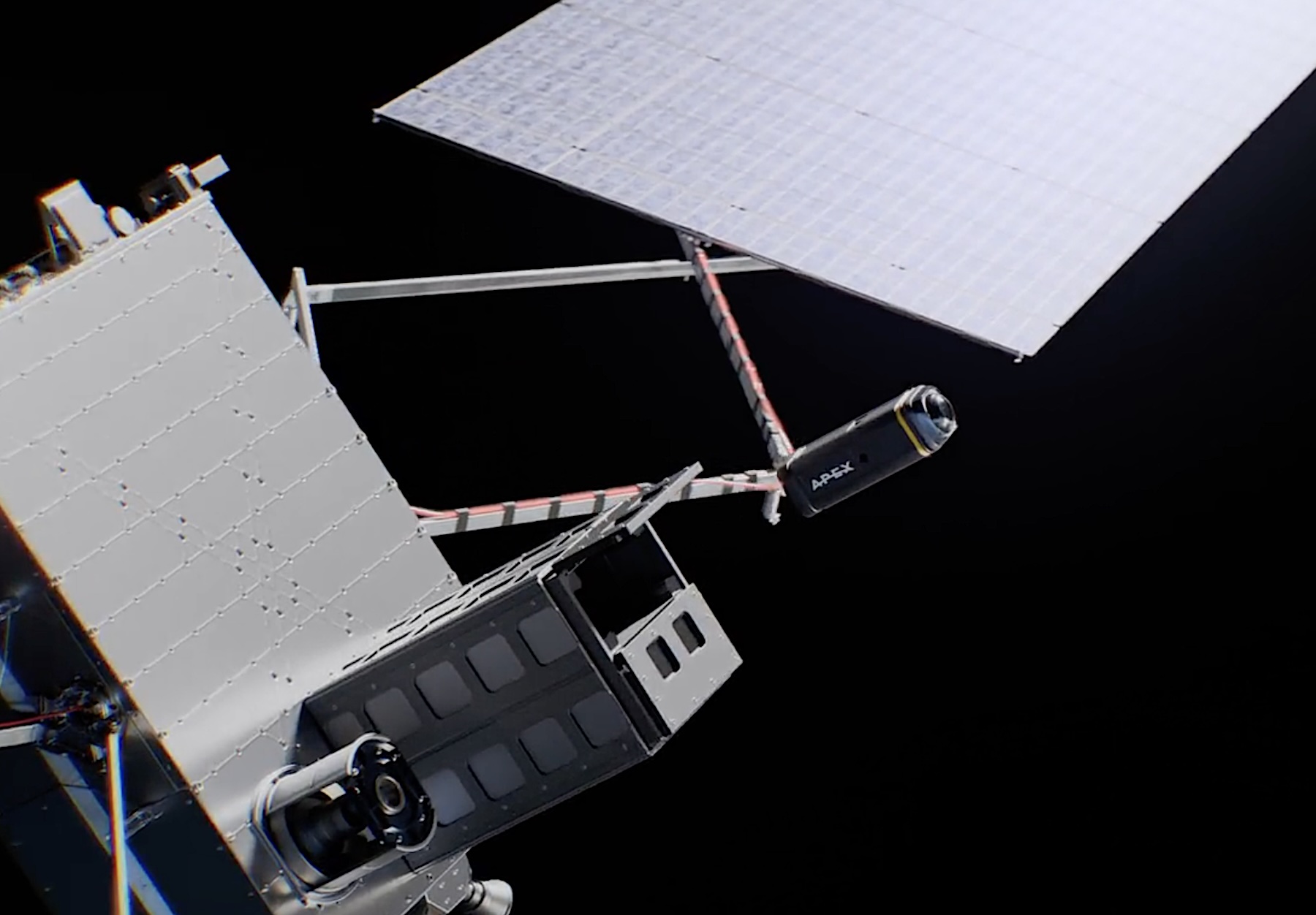

This illustration released by Apex depicts a space-based interceptor fired from a satellite in low-Earth orbit. Credit: Apex

Defense contractors are in full sales mode to win a piece of a potentially trillion-dollar pie for development of the Trump administration’s proposed Golden Dome missile shield.

CEOs are touting their companies’ ability to rapidly spool up satellite, sensor, and rocket production. Publicly, they all agree with the assertion of Pentagon officials that US industry already possesses the technologies required to make a homeland missile defense system work.

The challenge, they say, is tying all of it together under the umbrella of a sophisticated command and control network. Sensors must be able to detect and track missile threats, and that information must rapidly get to weapons that can shoot them down. Gen. Chance Saltzman, the Space Force’s top commander, likes to call Golden Dome a “systems of systems.”

One of these systems stands apart. It’s the element that was most controversial when former President Ronald Reagan announced the Strategic Defense Initiative or “Star Wars” program, a concept similar to Golden Dome that fizzled after the end of the Cold War.

Like the Star Wars concept 40 years ago, Golden Dome’s pièce de résistance will be a fleet of space-based interceptors loitering in orbit a few hundred miles overhead, ready to shoot down missiles shortly after they are launched. Pentagon officials haven’t disclosed the exact number of interceptors required to fulfill Golden Dome’s mission of defending the United States against a volley of incoming missiles. It will probably be in the thousands.

Skin in the game

Last month, the Defense Department released a request for prototype proposals for space-based interceptors (SBIs). The Space Force said it plans to sign agreements with multiple companies to develop and demonstrate SBIs and compete for prizes. This is an unusual procurement strategy for the Pentagon, requiring contractors to spend their own money on building and launching the SBIs into space, with the hope of eventually winning a lucrative production contract.

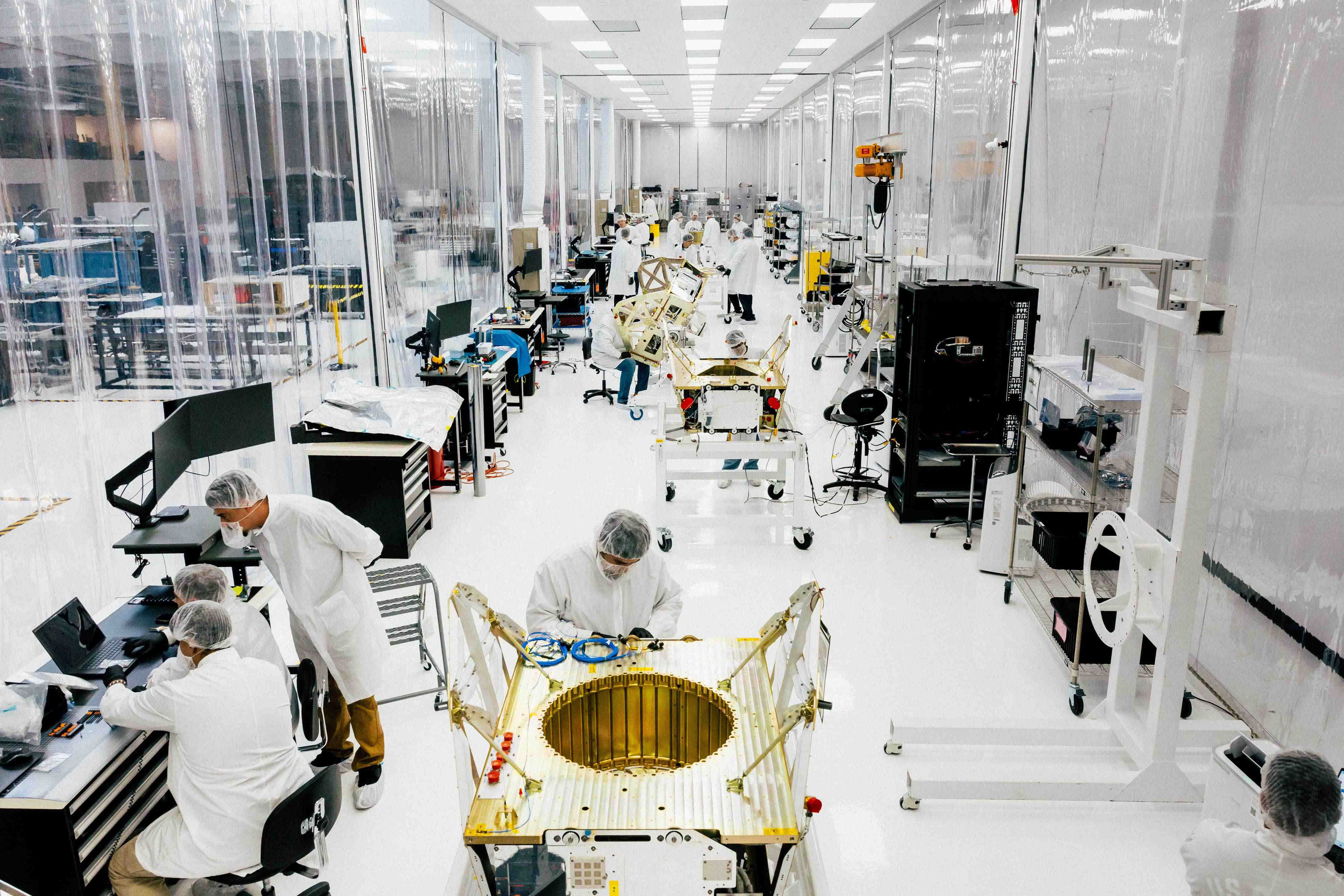

Apex is one of the companies posturing for an SBI contract. Based in Los Angeles, Apex is one of several US startups looking to manufacture satellites faster and cheaper than traditional aerospace contractors. The company’s vision is to rapidly churn out satellite buses, essentially the spacecraft’s chassis, to be integrated with a customer’s payloads. So far, Apex has raised more than $500 million from investors and launched its first satellite in 2024, just two years after the company’s founding. Apex won a $46 million contract from the Space Force in February to supply the military with an unspecified number of satellites through 2032.

Apex says its satellites can perform a range of missions: remote sensing and Earth observation, communications, AI-powered edge processing, and technology demos. The largest platform in Apex’s portfolio can accommodate payloads of up to 500 kilograms (1,100 pounds), with enough power to support direct-to-cell connectivity and government surveillance missions.

A look inside Apex’s satellite factory in Los Angeles. Credit: Apex

Now, Apex wants to show its satellite design can serve as an orbiting weapons platform.

“Apex is built to move fast, and that is exactly what America and our allies need to ensure we win the New Space Race,” Ian Cinnamon, the company’s co-founder and CEO, said in a statement Wednesday. “In under a year, we are launching the host platform for space-based interceptors, called an Orbital Magazine, which will deploy multiple prototype missile interceptors in orbit.”

The demonstration mission is called Project Shadow. It’s intended to “prove that an operational SBI constellation can be deployed in the timeframe our country needs,” Cinnamon said. “Apex isn’t waiting for handouts or contracts; we are developing this Orbital Magazine technology on our own dime and moving incredibly fast.”

Star Wars redux

Just one week into his second term in the White House, President Donald Trump signed an executive order for what would soon be named Golden Dome, citing an imperative to defend the United States against ballistic missiles and emerging weapons systems like hypersonic glide vehicles and drones.

The Trump administration said in May that the defense shield would cost $175 billion over the next three years. Most analysts peg the long-term cost much higher, but no one really knows. The Pentagon hasn’t released a detailed architecture for what Golden Dome will actually entail, and the uncertainty has driven independent cost estimates ranging from $500 billion to more than $3 trillion.

Golden Dome’s unknown costs, lack of definition, and its unpredictable effect on strategic stability have garnered criticism from Democratic lawmakers.

But unlike the reaction to the Reagan-era Star Wars program, there’s not much pushback on Golden Dome’s technical viability.

“All of the pieces that are required to make it viable exist. They’re out there,” Cinnamon told Ars. “We have satellites, we have boosters, we have seekers, we have fire control, we have IFTUs (in-flight target updates), we have inter-satellite links. The key is, all those pieces need to talk to each other and actually come together, and that integration is really, really difficult. The second key is, in order for it to be viable, you need enough of them in space to actually have the impact that you need.”

This frame from an Apex animation shows a space-based interceptor deploying from an Orbital Magazine.

Apex says its Project Shadow demo is scheduled to launch in June 2026. Once in orbit, the Project Shadow spacecraft will deploy two interceptors, each firing a high-thrust solid rocket motor from a third-party supplier. “The Orbital Magazine will prove its ability to environmentally control the interceptors, issue a fire control command, and close an in-space cross-link to send real-time updates post-deployment,” Apex said in a statement.

The Orbital Magazine on Apex’s drawing board could eventually carry more than 11,000 pounds (5,000 kilograms) of interceptor payload, the company said. “Orbital Magazines host one or many interceptors, allowing thousands of SBIs to be staged in orbit.”

Apex is spending about $15 million of its own money on Project Shadow. Cinnamon said Apex is working with other companies on “key parts of the interceptor and mission analysis” for Project Shadow, but he wasn’t ready to identify them yet. One possible propulsion supplier is Anduril Industries, the weapons company started by Oculus founder Palmer Luckey in 2017. Apex and Anduril have worked together before.

“What we’re very good at is high-rate manufacturing and piecing it together,” Cinnamon said. “We have suppliers for everything else.”

Apex is the first company to publicly disclose any details for an SBI demonstration, but it won’t be the last. Cinnamon said Apex will provide further updates on Project Shadow as it nears launch.

“We’re talking about it publicly because I believe it’s really important to inspire both the US and our allies, and show the pace of innovation and show what’s possible in today’s world,” Cinnamon said. “We are very fortunate to have an amazing team, a very large war chest of capital, and the ability to go do a project like this, truly for the good of the US and the good of our allies.”

A solid rocket motor designed for the ascent vehicle for NASA’s Mars Sample Return mission was test-fired by Northrop Grumman in 2023. A similar rocket motor could be used for space-based interceptors. Credit: NASA

The usual suspects

Apex will have a lot of competition vying for a slice of Golden Dome. America’s largest defense contractors have all signaled their interest in tapping into Golden Dome cash flows.

Lockheed Martin has submitted proposals to the Pentagon for space-based interceptors, the company’s CEO, James Taiclet, said Tuesday in a quarterly earnings call.

“We’re actually planning for a real on-orbit, space-based interceptor demonstration by 2028,” Taiclet said, without providing further details. Taiclet said Lockheed Martin is also working on command and control solutions for Golden Dome.

“At the same time, we’re rapidly increasing production capacity across the missiles, sensors, battle management systems, and satellite integration opportunities that will be directly relevant to achieve the overarching objective of Golden Dome,” Taiclet said.

“SBI, the space-based interceptor, is one of those,” he said. “We are building prototypes—full operational prototypes, not things in labs, not stuff on test stands, things that will go into space, or in the air, or fly across a missile range. These are real devices that will work and that can be produced at scale. So the space-based interceptor is one we’ve been pursuing already, and that’s all I can say about that.”

Northrop Grumman officials have made similar statements. Kathy Warden, Northrop’s CEO, has said her company is currently conducting “ground-based tests” of SBI-related technology. She didn’t describe the tests, although Northrop Grumman is the nation’s top supplier of solid rocket motors, a key piece of space-based interceptors, and regularly fires them on test stands.

“The architecture and spend plan for Golden Dome are not published, so I won’t comment on those specifically,” Warden said Tuesday. “We are providing some high-fidelity operational analysis that can help the customer understand those requirements, as well as ourselves.”

California startup to demonstrate space weapon on its own dime Read More »