Betel nuts have been giving people a buzz for over 4,000 years

Ancient rituals and customs often leave behind obvious archaeological evidence. From the impeccably preserved mummies of Egypt to psychoactive substance residue that remained at the bottom of a clay vessel for thousands of years, it seems as if some remnants of the past, even if not all are immediately visible, have defied the ravages of time.

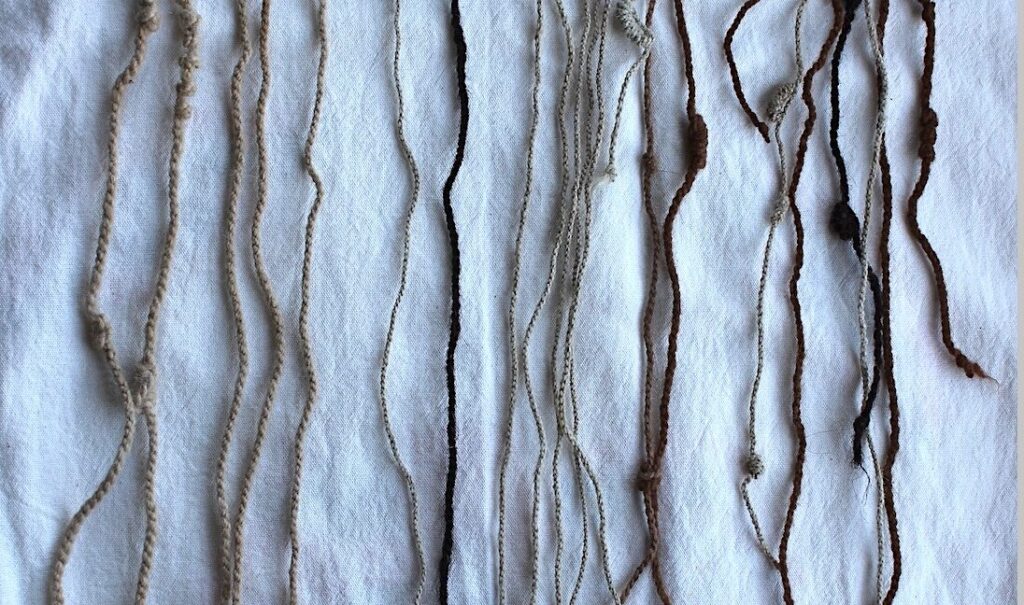

Chewing betel nuts is a cultural practice in parts of Southeast Asia. When chewed, these reddish nuts, which are the fruit of the areca palm, release psychoactive compounds that heighten alertness and energy, promote feelings of euphoria, and help with relaxation. They are usually wrapped in betel leaves with lime paste made from powdered shells or corals, depending on the region.

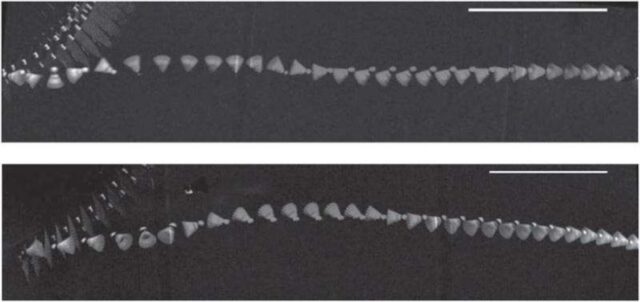

Critically, the ancient teeth from betel nut chewers are distinguishable because of red staining. So when archaeologist Piyawit Moonkham, of Chiang Mai University in Thailand, unearthed 4,000-year-old skeletons from the Bronze Age burial site of Nong Ratchawat, the lack of telltale red stains appeared to indicate that the individuals they belonged to were not chewers of betel nuts.

Yet when he sampled plaque from the teeth, he found that several of the teeth from one individual contained compounds found in betel nuts. This invisible evidence could indicate teeth cleaning practices had gotten rid of the color or that there were alternate methods of consumption.

“We found that these mineralized plaque deposits preserve multiple microscopic and biomolecular indicators,” Moonkham said in a study recently published in Frontiers. “This initial research suggested the detection potential for other psychoactive plant compounds.”

Since time immemorial

Betel nut chewing has been practiced in Thailand for at least 9,000 years. During the Lanna Kingdom, which began in the 13th century, teeth stained from betel chewing were considered a sign of beauty. While the practice is fading, it is still a part of some religious ceremonies, traditional medicine, and recreational gatherings, especially among certain ethnic minorities and people living in rural areas.

Betel nuts have been giving people a buzz for over 4,000 years Read More »