CEO of failing hospital chain got $250M amid patient deaths, layoffs, bankruptcy

“Outrageous corporate greed” —

Steward Health Care System, run by CEO Ralph de la Torre, filed for bankruptcy in May.

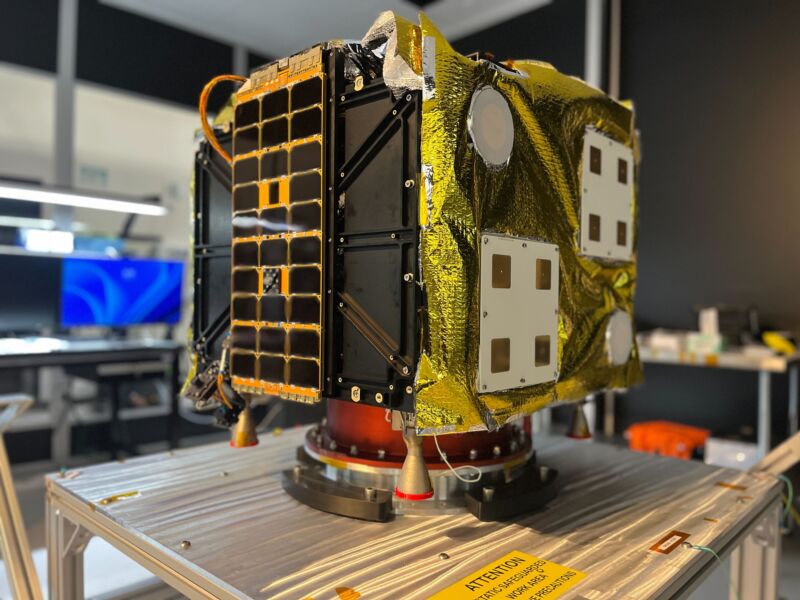

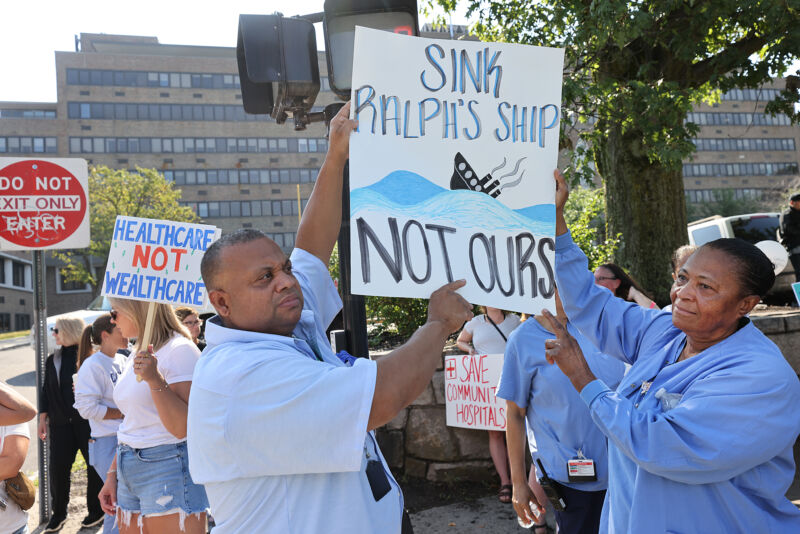

Enlarge / Hospital staff and community members held a protest in front of Carney Hospital in Boston on August 5 as Steward has announced it will close the hospital. “Ralph” refers to Steward’s CEO, Ralph de la Torre, who owns a yacht.

As the more than 30 hospitals in the Steward Health Care System scrounged for cash to cover supplies, shuttered pediatric and neonatal units, closed maternity wards, laid off hundreds of health care workers, and put patients in danger, the system paid out at least $250 million to its CEO and his companies, according to a report by The Wall Street Journal.

The newly revealed financial details bring yet more scrutiny to Steward CEO Ralph de la Torre, a Harvard University-trained cardiac surgeon who, in 2020, took over majority ownership of Steward from the private equity firm Cerberus. De la Torre and his companies were reportedly paid at least $250 million since that takeover. In May, Steward, which has hospitals in eight states, filed for Chapter 11 bankruptcy.

Critics—including members of the Senate Committee on Health, Education, Labor, and Pensions (HELP)—allege that de la Torre and stripped the system’s hospitals of assets, siphoned payments from them, and loaded them with debt, all while reaping huge payouts that made him obscenely wealthy.

Alleged greed

For instance, de la Torre sold the land under the system’s hospitals to a large hospital landlord, Medical Properties Trust, leaving Steward hospitals on the hook for large rent payments. Under de la Torre’s leadership, Steward also paid a management consulting firm $30 million a year to “provide executive oversight and overall strategic directive.” But, de la Torre was the majority owner of the consulting firm, which also employed other Steward executives. As the WSJ put it, Steward “effectively paid its CEO’s firm, which employed Steward executives, for executive- management services for Steward.”

In 2021, while the COVID-19 pandemic strained hospitals, Steward distributed $111 million to shareholders. With de la Torre owning 73 percent of the company at the time, his share would have been around $81 million, the WSJ reported. That year, de la Torre bought a 190-foot yacht for $40 million. He also owns a $15 million custom-made luxury fishing boat called Jaruco. The Senate Help Committee, meanwhile, notes that a Steward affiliate owned two jets, one valued at $62 million and a second “backup” jet valued at $33 million.

In 2022, de la Torre got married in an elaborate wedding on Italy’s Amalfi Coast and bought a 500-acre Texas ranch for at least $7.2 million. His new wife, Nicole Acosta, 29, is a competitive equestrian who trains at a facility near the ranch. She competes on a horse that was sold in 2014 for $3.5 million, though it’s unclear how much the couple paid for it. Besides the ranch, de la Torre, 58, owns an 11,108-square-foot mansion in Dallas valued at $7.2 million, the WSJ reported.

While de la Torre was living a lavish lifestyle, Steward hospitals faced dire situations—as they had been for years. An investigation by the Senate HELP committee noted that Steward had shut down several hospitals in Massachusetts, Ohio, Arizona, and Texas between 2014 and this year, laying off thousands of health care workers and leaving communities in the lurch. It closed several pediatric wards in Massachusetts and Texas; in Florida, it closed neonatal units and eliminated maternity services. In Louisiana, Steward patients faced “immediate jeopardy.”

“Third-world medicine”

In a July hearing, Sen. Bill Cassidy (R-LA), ranking member of the HELP Committee, spoke of the conditions at Glenwood Regional Medical Center in West Monroe, Louisiana, which Steward allegedly mismanaged. “According to a report from the Centers for Medicare and Medicaid Services, a physician at Glenwood told a Louisiana state inspector that the hospital was performing ‘third-world medicine,'” Cassidy said.

Further, “one patient died while waiting for a transfer to another hospital because Glenwood did not have the resources to treat them,” the Senator said. “Unfortunately, Glenwood is not unique,” he went on. “At a Steward-owned Massachusetts hospital, a woman died after giving birth when doctors realized mid-surgery that the supplies needed to treat her were previously repossessed due to Steward’s financial troubles.” The hospital reportedly owed the supplier $2.5 million in unpaid bills.

Additionally, the WSJ investigation dug up records that showed that a pest control company discovered 3,000 bats living in one of Steward’s Florida hospitals. In Arizona, a Phoenix-area hospital was without air conditioning during scorching temperatures, and its kitchen was closed for health-code violations. The state ordered it to shut down last week.

“Dr. de la Torre and his executive teams’ poor financial decisions and gross mismanagement of its hospitals is shocking,” Cassidy said. “Patients’ lives are at risk. The American people deserve answers.”

Outrage

Senate HELP Committee chair Bernie Sanders (I-VT) went further, saying that the US health care system “is designed not to make patients well, but to make health care executives and stockholders extraordinarily wealthy. … Perhaps more than anyone else in America, Ralph de la Torre, the CEO of Steward Health Care, epitomizes the type of outrageous corporate greed that is permeating throughout our for-profit health care system.”

Sanders lamented how de la Torre’s payouts could have instead benefited patients and communities, asking: “How many of Steward’s hospitals could have been prevented from closing down, how many lives could have been saved, how many health care workers would still have their jobs if Dr. de la Torre spent $150 million on high-quality health care instead of a yacht, two private jets and a luxury fishing boat?”

On July 25, the committee voted 16–4 to subpoena de la Torre so they could ask him such questions in person. To date, de la Torre has refused to voluntarily appear before the committee and declined to comment on the WSJ report. The committee’s vote marks the first time since 1981 that it has issued a subpoena.

Separately, Steward and de la Torre are under investigation by the Department of Justice over allegations of fraud and corruption in a deal to run hospitals in Malta.

CEO of failing hospital chain got $250M amid patient deaths, layoffs, bankruptcy Read More »