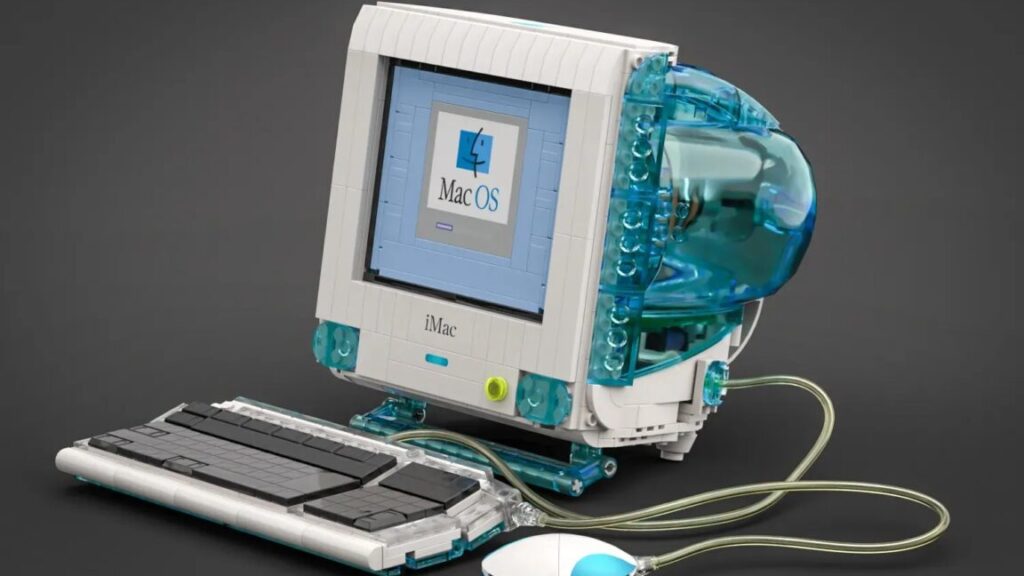

700-piece Lego G3 iMac design faces long-shot odds to get made, but I still want one

I don’t usually get too excited about user-submitted designs on the Lego Ideas website, especially when those ideas would require negotiating a license with another company—user-generated designs need to reach 10,000 supporters before Lego considers them for production, two pretty high bars to clear even without factoring in some other brand’s conditions and requests.

But I’m both intrigued and impressed by this Lego version of Apple’s old Bondi Blue G3 iMac that has been making the rounds today. Submitted by a user named terauma, the 700-plus-piece set comes complete with keyboard, hockey-puck mouse, a classic Mac OS boot screen, and cathode ray tubes and circuit boards visible through the set’s transparent blue casing (like the original iMac, it may cause controversy by excluding a floppy disk drive). The design has already reached 5,000 supporters, and it has 320 days left to reach the 10,000-supporter benchmark required to be reviewed by Lego.

With its personality-forward aesthetics and Jony Ive-led design, the original iMac was the first step down the path that led to blockbuster products like the iPod and iPhone. It was the company’s first all-new Mac design after CEO Steve Jobs returned to the company in the late ’90s, and while it lacked some features included in contemporary PCs, its tightly integrated design and ease of setup helped it stand out against the beige desktop PCs of the day. Today’s colorful Apple Silicon iMacs are clearly inspired by the original design.

700-piece Lego G3 iMac design faces long-shot odds to get made, but I still want one Read More »