Cable channel subscribers grew for the first time in 8 years last quarter

In a surprising, and likely temporary, turn of events, the number of people paying to watch cable channels has grown.

On Monday, research analyst MoffettNathanson released its “Cord-Cutting Monitor Q3 2025: Signs of Life?” report. It found that the pay TV operators, including cable companies, satellite companies, and virtual multichannel video programming distributors (vMVPDs) like YouTube TV and Fubo, added 303,000 net subscribers in Q3 2025.

According to the report, “There are more linear video subscribers now than there were three months ago. That’s the first time we’ve been able to say that since 2017.”

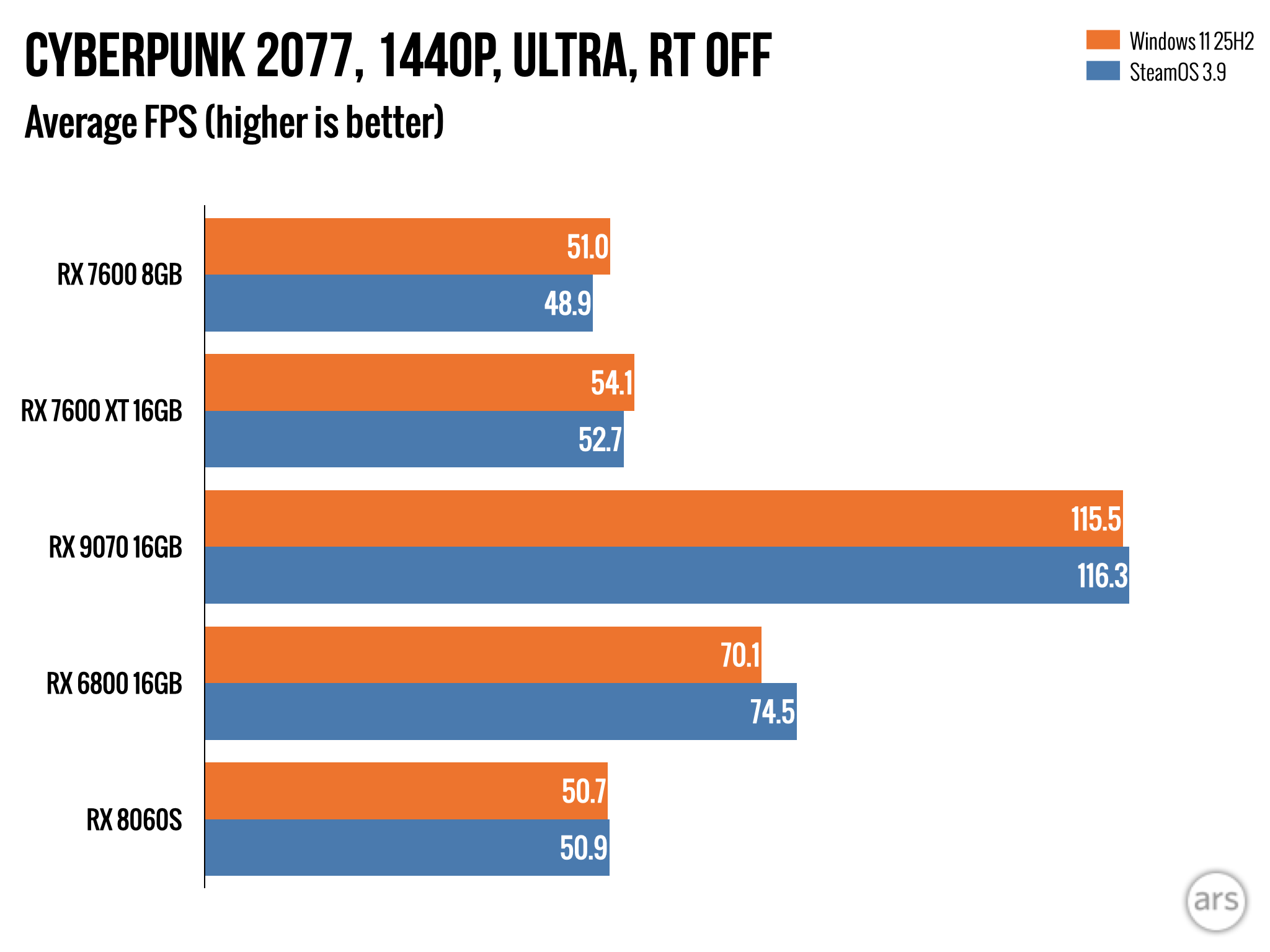

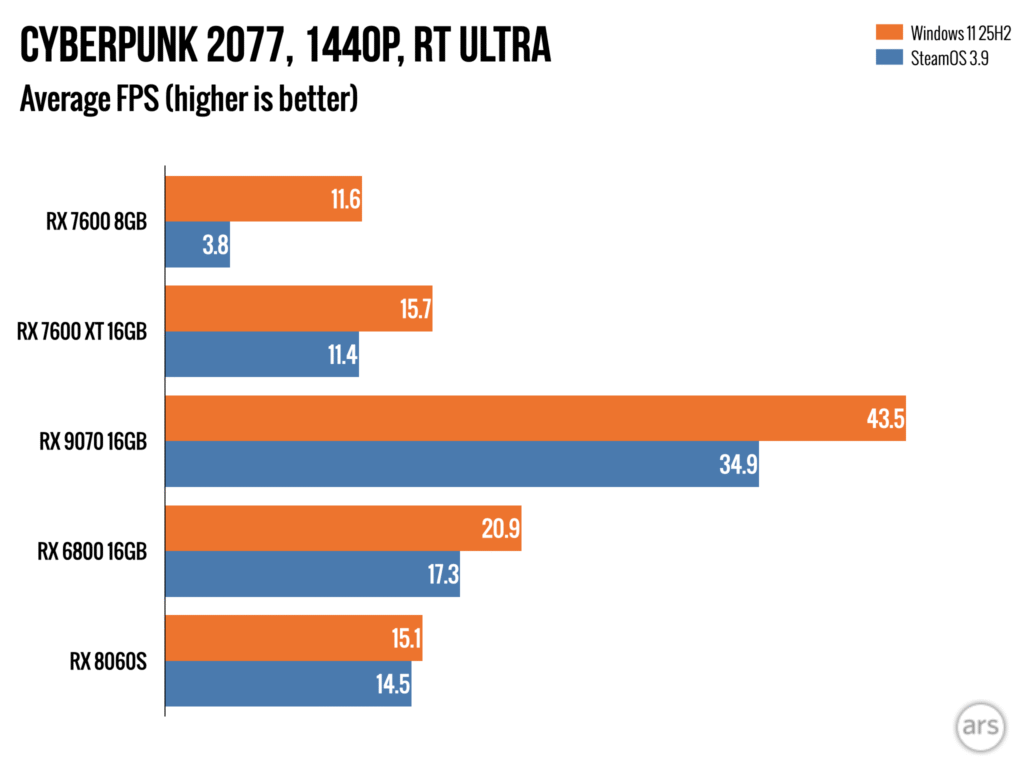

In Q3 2017, MoffettNathanson reported that pay TV gained 318,000 net new subscribers. But since then, the industry’s subscriber count has been declining, with 1,045,000 customers in Q2 2025, as depicted in the graph below.

Credit: MoffettNathanson

The world’s largest vMVPD by subscriber count, YouTube TV, claimed 8 million subscribers in February 2024; some analysts estimate that number is now at 9.4 million. In its report, MoffettNathanson estimated that YouTube TV added 750,000 subscribers in Q3 2025, compared to 1 million in Q3 2024.

Traditional pay TV companies also contributed to the industry’s unexpected growth by bundling its services with streaming subscriptions. Charter Communications offers bundles with nine streaming services, including Disney+, Hulu, and HBO Max. In Q3 2024, it saw net attrition of 294,000 customers, compared to about 70,000 in Q3 2025. Other cable companies have made similar moves. Comcast, for example, launched a streaming bundle with Netflix, Peacock, and Apple TV in May 2024. For Q3 2025, Comcast reported its best pay TV subscriber count in almost five years, which was a net loss of 257,000 customers.

Cable channel subscribers grew for the first time in 8 years last quarter Read More »