Microsoft debuts major Surface overhauls that regular people can’t buy

business time —

Not the first business-exclusive Surfaces, but they’re the most significant.

-

Microsoft

-

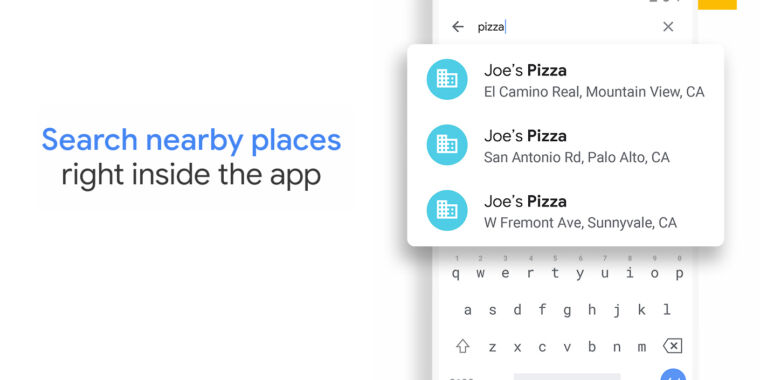

Yes, both devices launch with Microsoft’s new Copilot key.

Microsoft

-

The Surface Pro 10. Looks familiar.

Microsoft

-

An NFC reader supports physical security keys.

Microsoft

-

The 13.5- and 15-inch Surface Laptop 6.

Microsoft

-

The 15-inch Laptop 6 can be configured with a security card reader, another business thing.

Microsoft

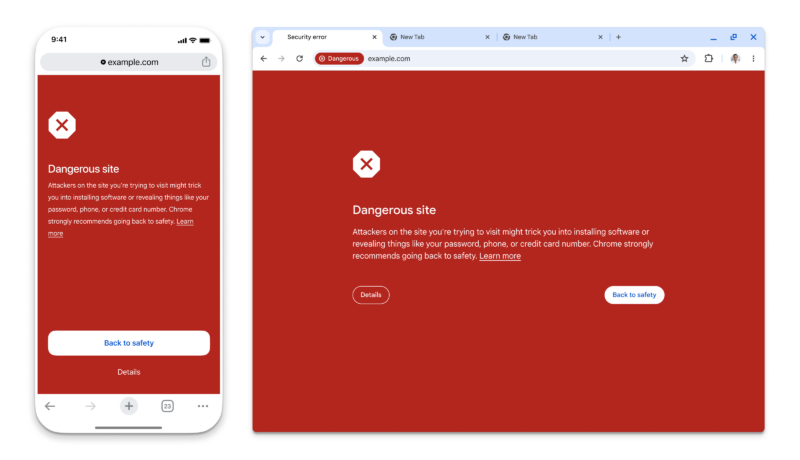

Microsoft is debuting major updates to two of its Surface PCs today: both the Surface Pro 10 and the 13.5- and 15-inch Surface Laptop 6 are major internal upgrades to Microsoft’s mainstream Surface devices. Both were last updated nearly a year and a half ago, and they’re both getting new Intel chips with significantly faster integrated GPUs, upgraded webcams, the Copilot key, and better battery life (according to Microsoft’s spec sheets).

The catch is that both of these Surfaces are being sold exclusively to businesses and commercial customers; as of this writing, regular people will not be able to buy one directly from Microsoft, and they won’t show up in most retail stores.

These aren’t the first Surface products released exclusively for businesses. Microsoft introduced a new business-exclusive Surface Go 3 tablet last fall, and a Surface Pro 7+ variant for businesses in early 2021. It is, however, the first time Microsoft has introduced new versions of its flagship tablet and laptop without also making them available to consumers. You can find some of these business-only PCs for sale at some third-party retailers, but usually with extended shipping times and higher prices than consumer systems.

Though this seems like a step back from the consumer PC market, Microsoft is still reportedly planning new consumer Surfaces. The Verge reports that Microsoft is planning a new Surface with Qualcomm’s upcoming Snapdragon X chip, to debut in May. It’s that device, rather than today’s traditional Intel-based Surface Pro 10, that will apparently take over as the flagship consumer Surface PC.

“We absolutely remain committed to consumer devices,” a Microsoft spokesperson told Ars. “Building great devices that people love to use aligns closely with our company mission to empower individuals as well as organizations. We are excited to be bringing devices to market that deliver great AI experiences to our customers. This commercial announcement is only the first part of this effort.”

This would be a big departure for Microsoft, which for a few years now has offered the Intel-based Surface tablets as its primary convertible tablets and the Arm-based Surface Pro X and Surface Pro 9 with 5G as separate niche variants. Older Qualcomm chips’ mediocre performance and lingering software and hardware compatibility issues with the Arm version of Windows have held those devices back, though Snapdragon X at least promises to solve the performance issues. If Microsoft plans to go all-in on Arm for its flagship consumer Surface device, it at least makes a little sense to retain the Intel-based Surface for businesses that will be more sensitive to those performance and compatibility problems.

What’s new in the Surface Pro 10 and Surface Laptop 6?

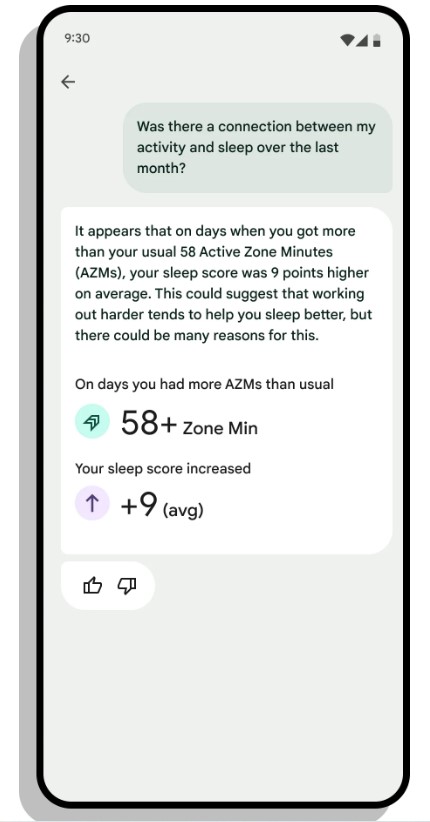

As for the hardware itself, for people who might be getting them at work or people who go out of their way to find one: The biggest upgrade is that both Surface devices have been updated with Intel Core Ultra CPUs based on the Meteor Lake architecture. While the processor performance improvements in these chips are a bit underwhelming, their Arc-integrated GPUs are significantly faster than the old Iris Xe GPUs. And the chips also include a neural processing unit (NPU) that can accelerate some AI and machine-learning workloads; Microsoft currently uses them mostly for fancy webcam effects, but more software will likely take advantage of them as they become more widely available.

Those new chips (and small battery capacity increases) have also bumped all of Microsoft’s battery life estimates up a bit. The Surface Pro 10 is said to be good for 19 hours of “typical device usage,” up from 15.5 hours from the Intel version of the Surface Pro 9. The 13.5 and 15-inch Surface Laptop 6 gets 18.5 and 19 hours of battery life, respectively, up from 18 and 17 hours for the Surface Laptop 5.

The downside is that the Surface Laptops are a bit heavier than the Laptop 5: 3.06 pounds and 3.7 pounds, compared to 2.86 and 3.44 pounds for the 13.5- and 15-inch models.

Both models also get new webcam hardware to go with those NPU-accelerated video effects. The Surface Pro goes from a 1080p webcam to a 1440p webcam, and the Surface Laptop goes from 720p to 1080p. The Surface Pro 10’s camera also features an “ultrawide field of view.” Both cameras support Windows Hello biometric logins using a scan of your face, and the Surface Pro 10 also has an NFC reader for use with hardware security keys. As business machines, both devices also have dedicated hardware TPM modules to support drive encryption and other features, instead of the firmware TPMs that the Surface Pro 9 and Surface Laptop 5 used. Neither supports Microsoft’s Pluton technology.

Enlarge / A new Type Cover with a brighter backlight and bolder legends was made for users with low vision or those who want to reduce eyestrain.

Microsoft

Neither device gets a big screen update, though there are small improvements. Microsoft says the Surface Pro 10’s 13-inch, 2880×1920 touchscreen is 33 percent brighter than before, with a maximum brightness of 600 nits. The screen has a slightly better contrast ratio than before and an anti-reflective coating; it also still supports a 120 Hz refresh rate. The Surface Laptop 6 doesn’t get a brightness bump but does have better contrast and an anti-reflective coating. Both devices are still using regular IPS LCD panels rather than OLED or something fancier.

And finally, some odds and ends. The 15-inch Surface Laptop 6 picks up a second Thunderbolt port and optional support for a smart card reader. The Surface Pro now has a “bold keyset” keyboard option, with an easier-to-read font and brighter backlight for users with low vision. These keyboards should also work with some older Surface devices, if you can find them.

The systems will be available to pre-order “in select markets” on March 21, and they’ll begin shipping on April 9. Microsoft didn’t share any specifics about pricing, though as business machines, we’d generally expect them to cost a little more than equivalent consumer PCs.

Listing image by Microsoft

Microsoft debuts major Surface overhauls that regular people can’t buy Read More »