How AI coding agents work—and what to remember if you use them

Agents of uncertain change

From compression tricks to multi-agent teamwork, here’s what makes them tick.

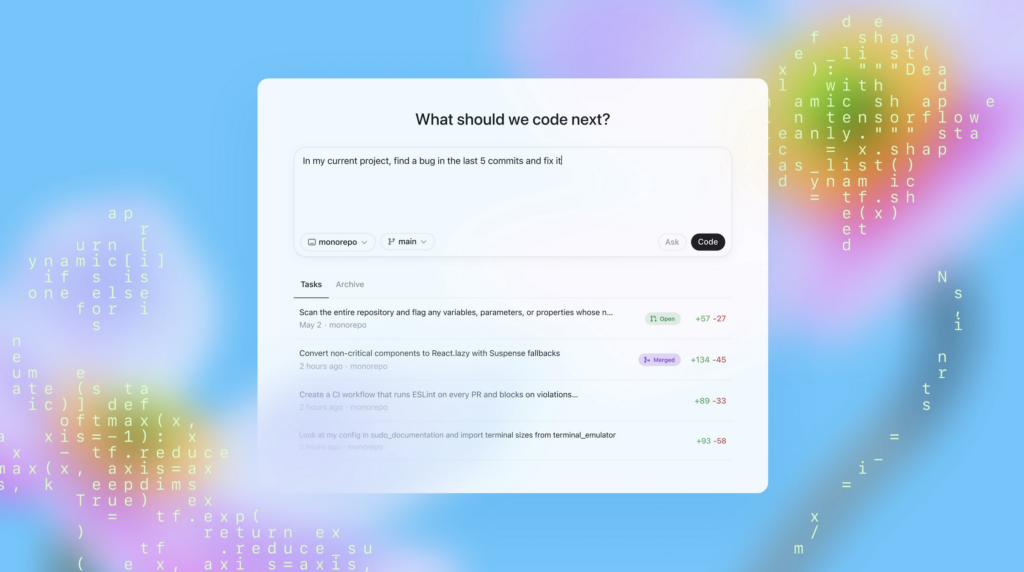

AI coding agents from OpenAI, Anthropic, and Google can now work on software projects for hours at a time, writing complete apps, running tests, and fixing bugs with human supervision. But these tools are not magic and can complicate rather than simplify a software project. Understanding how they work under the hood can help developers know when (and if) to use them, while avoiding common pitfalls.

We’ll start with the basics: At the core of every AI coding agent is a technology called a large language model (LLM), which is a type of neural network trained on vast amounts of text data, including lots of programming code. It’s a pattern-matching machine that uses a prompt to “extract” compressed statistical representations of data it saw during training and provide a plausible continuation of that pattern as an output. In this extraction, an LLM can interpolate across domains and concepts, resulting in some useful logical inferences when done well and confabulation errors when done poorly.

These base models are then further refined through techniques like fine-tuning on curated examples and reinforcement learning from human feedback (RLHF), which shape the model to follow instructions, use tools, and produce more useful outputs.

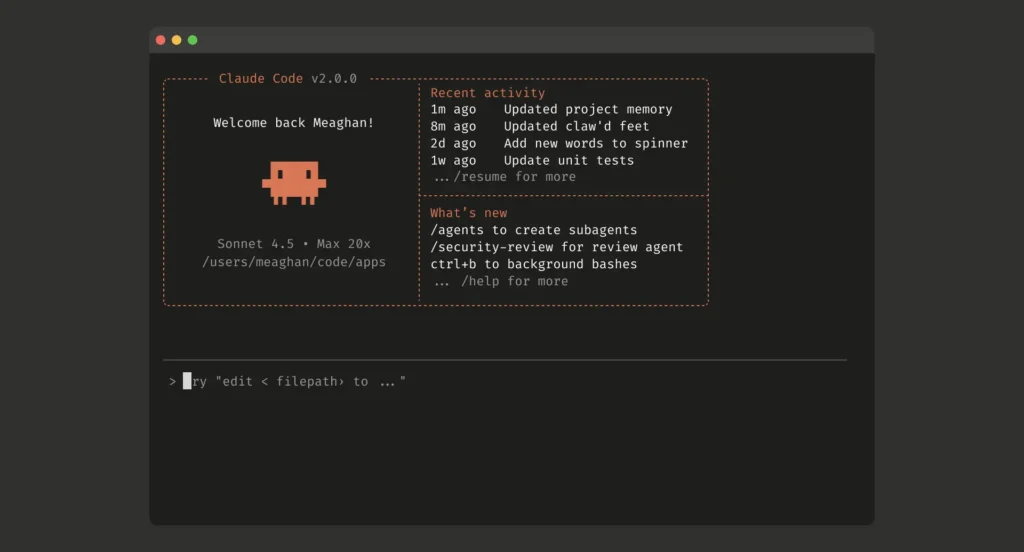

A screenshot of the Claude Code command-line interface. Credit: Anthropic

Over the past few years, AI researchers have been probing LLMs’ deficiencies and finding ways to work around them. One recent innovation was the simulated reasoning model, which generates context (extending the prompt) in the form of reasoning-style text that can help an LLM home in on a more accurate output. Another innovation was an application called an “agent” that links several LLMs together to perform tasks simultaneously and evaluate outputs.

How coding agents are structured

In that sense, each AI coding agent is a program wrapper that works with multiple LLMs. There is typically a “supervising” LLM that interprets tasks (prompts) from the human user and then assigns those tasks to parallel LLMs that can use software tools to execute the instructions. The supervising agent can interrupt tasks below it and evaluate the subtask results to see how a project is going. Anthropic’s engineering documentation describes this pattern as “gather context, take action, verify work, repeat.”

If run locally through a command-line interface (CLI), users give the agents conditional permission to write files on the local machine (code or whatever is needed), run exploratory commands (say, “ls” to list files in a directory), fetch websites (usually using “curl”), download software, or upload files to remote servers. There are lots of possibilities (and potential dangers) with this approach, so it needs to be used carefully.

In contrast, when a user starts a task in the web-based agent like the web versions of Codex and Claude Code, the system provisions a sandboxed cloud container preloaded with the user’s code repository, where Codex can read and edit files, run commands (including test harnesses and linters), and execute code in isolation. Anthropic’s Claude Code uses operating system-level features to create filesystem and network boundaries within which the agent can work more freely.

The context problem

Every LLM has a short-term memory, so to speak, that limits the amount of data it can process before it “forgets” what it’s doing. This is called “context.” Every time you submit a response to the supervising agent, you are amending one gigantic prompt that includes the entire history of the conversation so far (and all the code generated, plus the simulated reasoning tokens the model uses to “think” more about a problem). The AI model then evaluates this prompt and produces an output. It’s a very computationally expensive process that increases quadratically with prompt size because LLMs process every token (chunk of data) against every other token in the prompt.

Anthropic’s engineering team describes context as a finite resource with diminishing returns. Studies have revealed what researchers call “context rot”: As the number of tokens in the context window increases, the model’s ability to accurately recall information decreases. Every new token depletes what the documentation calls an “attention budget.”

This context limit naturally limits the size of a codebase a LLM can process at one time, and if you feed the AI model lots of huge code files (which have to be re-evaluated by the LLM every time you send another response), it can burn up token or usage limits pretty quickly.

Tricks of the trade

To get around these limits, the creators of coding agents use several tricks. For example, AI models are fine-tuned to write code to outsource activities to other software tools. For example, they might write Python scripts to extract data from images or files rather than feeding the whole file through an LLM, which saves tokens and avoids inaccurate results.

Anthropic’s documentation notes that Claude Code also uses this approach to perform complex data analysis over large databases, writing targeted queries and using Bash commands like “head” and “tail” to analyze large volumes of data without ever loading the full data objects into context.

(In a way, these AI agents are guided but semi-autonomous tool-using programs that are a major extension of a concept we first saw in early 2023.)

Another major breakthrough in agents came from dynamic context management. Agents can do this in a few ways that are not fully disclosed in proprietary coding models, but we do know the most important technique they use: context compression.

The command-line version of OpenAI Codex running in a macOS terminal window. Credit: Benj Edwards

When a coding LLM nears its context limit, this technique compresses the context history by summarizing it, losing details in the process but shortening the history to key details. Anthropic’s documentation describes this “compaction” as distilling context contents in a high-fidelity manner, preserving key details like architectural decisions and unresolved bugs while discarding redundant tool outputs.

This means the AI coding agents periodically “forget” a large portion of what they are doing every time this compression happens, but unlike older LLM-based systems, they aren’t completely clueless about what has transpired and can rapidly re-orient themselves by reading existing code, written notes left in files, change logs, and so on.

Anthropic’s documentation recommends using CLAUDE.md files to document common bash commands, core files, utility functions, code style guidelines, and testing instructions. AGENTS.md, now a multi-company standard, is another useful way of guiding agent actions in between context refreshes. These files act as external notes that let agents track progress across complex tasks while maintaining critical context that would otherwise be lost.

For tasks requiring extended work, both companies employ multi-agent architectures. According to Anthropic’s research documentation, its system uses an “orchestrator-worker pattern” in which a lead agent coordinates the process while delegating to specialized subagents that operate in parallel. When a user submits a query, the lead agent analyzes it, develops a strategy, and spawns subagents to explore different aspects simultaneously. The subagents act as intelligent filters, returning only relevant information rather than their full context to the lead agent.

The multi-agent approach burns through tokens rapidly. Anthropic’s documentation notes that agents typically use about four times more tokens than chatbot interactions, and multi-agent systems use about 15 times more tokens than chats. For economic viability, these systems require tasks where the value is high enough to justify the increased cost.

Best practices for humans

While using these agents is contentious in some programming circles, if you use one to code a project, knowing good software development practices helps to head off future problems. For example, it’s good to know about version control, making incremental backups, implementing one feature at a time, and testing it before moving on.

What people call “vibe coding”—creating AI-generated code without understanding what it’s doing—is clearly dangerous for production work. Shipping code you didn’t write yourself in a production environment is risky because it could introduce security issues or other bugs or begin gathering technical debt that could snowball over time.

Independent AI researcher Simon Willison recently argued that developers using coding agents still bear responsibility for proving their code works. “Almost anyone can prompt an LLM to generate a thousand-line patch and submit it for code review,” Willison wrote. “That’s no longer valuable. What’s valuable is contributing code that is proven to work.”

In fact, human planning is key. Claude Code’s best practices documentation recommends a specific workflow for complex problems: First, ask the agent to read relevant files and explicitly tell it not to write any code yet, then ask it to make a plan. Without these research and planning steps, the documentation warns, Claude’s outputs tend to jump straight to coding a solution.

Without planning, LLMs sometimes reach for quick solutions to satisfy a momentary objective that might break later if a project were expanded. So having some idea of what makes a good architecture for a modular program that can be expanded over time can help you guide the LLM to craft something more durable.

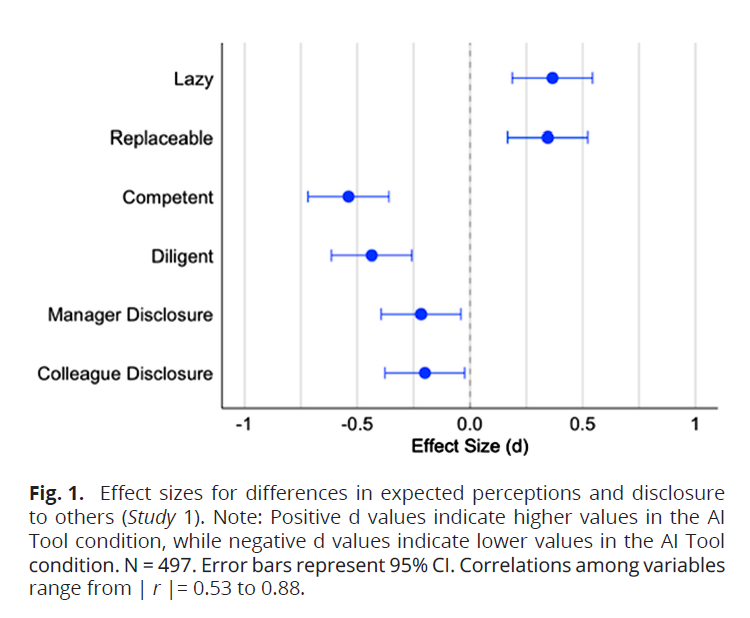

As mentioned above, these agents aren’t perfect, and some people prefer not to use them at all. A randomized controlled trial published by the nonprofit research organization METR in July 2025 found that experienced open-source developers actually took 19 percent longer to complete tasks when using AI tools, despite believing they were working faster. The study’s authors note several caveats: The developers were highly experienced with their codebases (averaging five years and 1,500 commits), the repositories were large and mature, and the models used (primarily Claude 3.5 and 3.7 Sonnet via Cursor) have since been superseded by more capable versions.

Whether newer models would produce different results remains an open question, but the study suggests that AI coding tools may not always provide universal speed-ups, particularly for developers who already know their codebases well.

Given these potential hazards, coding proof-of-concept demos and internal tools is probably the ideal use of coding agents right now. Since AI models have no actual agency (despite being called agents) and are not people who can be held accountable for mistakes, human oversight is key.

How AI coding agents work—and what to remember if you use them Read More »