ChatGPT’s new branching feature is a good reminder that AI chatbots aren’t people

On Thursday, OpenAI announced that ChatGPT users can now branch conversations into multiple parallel threads, serving as a useful reminder that AI chatbots aren’t people with fixed viewpoints but rather malleable tools you can rewind and redirect. The company released the feature for all logged-in web users following years of user requests for the capability.

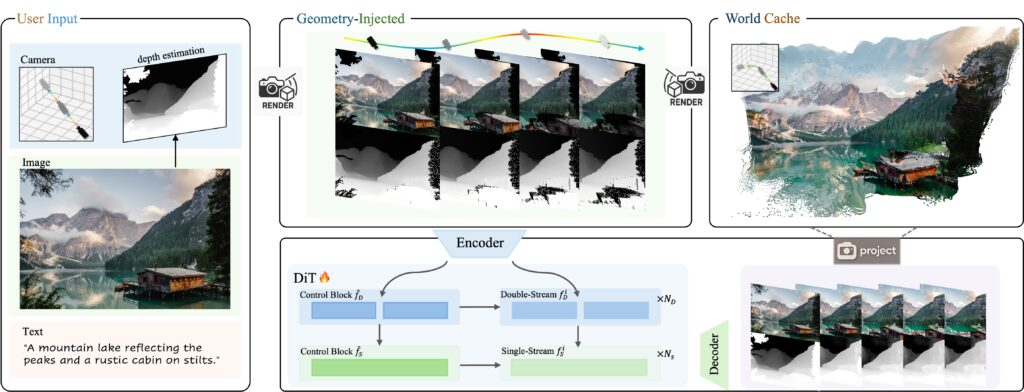

The feature works by letting users hover over any message in a ChatGPT conversation, click “More actions,” and select “Branch in new chat.” This creates a new conversation thread that includes all the conversation history up to that specific point, while preserving the original conversation intact.

Think of it almost like creating a new copy of a “document” to edit while keeping the original version safe—except that “document” is an ongoing AI conversation with all its accumulated context. For example, a marketing team brainstorming ad copy can now create separate branches to test a formal tone, a humorous approach, or an entirely different strategy—all stemming from the same initial setup.

A screenshot of conversation branching in ChatGPT. OpenAI

The feature addresses a longstanding limitation in the AI model where ChatGPT users who wanted to try different approaches had to either overwrite their existing conversation after a certain point by changing a previous prompt or start completely fresh. Branching allows exploring what-if scenarios easily—and unlike in a human conversation, you can try multiple different approaches.

A 2024 study conducted by researchers from Tsinghua University and Beijing Institute of Technology suggested that linear dialogue interfaces for LLMs poorly serve scenarios involving “multiple layers, and many subtasks—such as brainstorming, structured knowledge learning, and large project analysis.” The study found that linear interaction forces users to “repeatedly compare, modify, and copy previous content,” increasing cognitive load and reducing efficiency.

Some software developers have already responded positively to the update, with some comparing the feature to Git, the version control system that lets programmers create separate branches of code to test changes without affecting the main codebase. The comparison makes sense: Both allow you to experiment with different approaches while preserving your original work.

ChatGPT’s new branching feature is a good reminder that AI chatbots aren’t people Read More »