College student’s “time travel” AI experiment accidentally outputs real 1834 history

A hobbyist developer building AI language models that speak Victorian-era English “just for fun” got an unexpected history lesson this week when his latest creation mentioned real protests from 1834 London—events the developer didn’t know had actually happened until he Googled them.

“I was interested to see if a protest had actually occurred in 1834 London and it really did happen,” wrote Reddit user Hayk Grigorian, who is a computer science student at Muhlenberg College in Pennsylvania.

For the past month, Grigorian has been developing what he calls TimeCapsuleLLM, a small AI language model (like a pint-sized distant cousin to ChatGPT) which has been trained entirely on texts from 1800–1875 London. Grigorian wants to capture an authentic Victorian voice in the AI model’s outputs. As a result, the AI model ends up spitting out text that’s heavy with biblical references and period-appropriate rhetorical excess.

Grigorian’s project joins a growing field of researchers exploring what some call “Historical Large Language Models” (HLLMs) if they feature a larger base model than the small one Grigorian is using. Similar projects include MonadGPT, which was trained on 11,000 texts from 1400 to 1700 CE that can discuss topics using 17th-century knowledge frameworks, and XunziALLM, which generates classical Chinese poetry following ancient formal rules. These models offer researchers a chance to interact with the linguistic patterns of past eras.

According to Grigorian, TimeCapsuleLLM’s most intriguing recent output emerged from a simple test. When he prompted it with “It was the year of our Lord 1834,” the AI model—which is trained to continue text from wherever a user leaves off—generated the following:

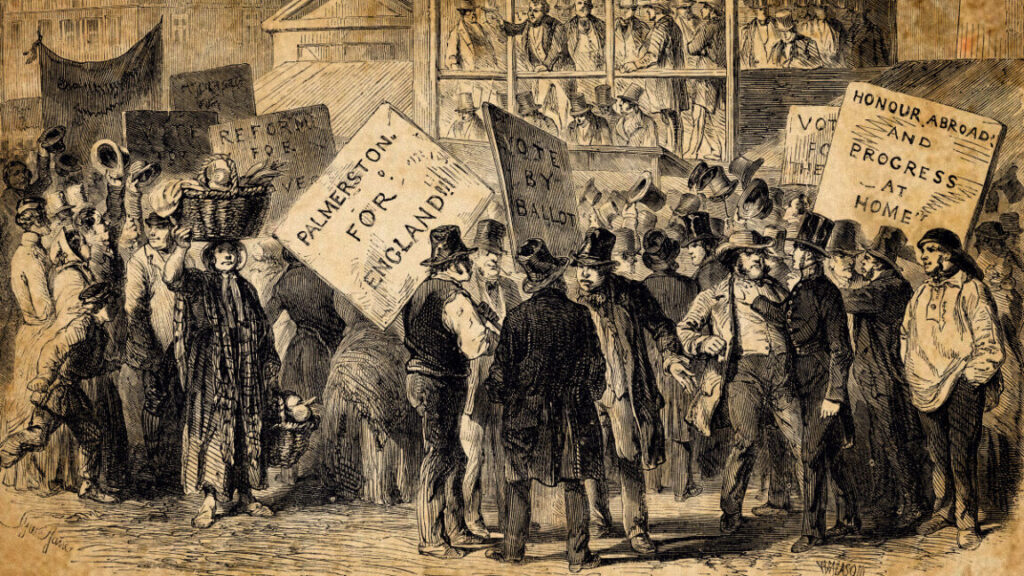

It was the year of our Lord 1834 and the streets of London were filled with protest and petition. The cause, as many re counted, was not bound in the way of private, but having taken up the same day in the day of Lord Palmerston, the public will receive a short statement of the difficulties under which the day of law has reached us. It is a matter of deep regret, that the present events in the history of the world are clear, and consequently will be’known. It is not true that the very men who first settled in the Gospel at Jerusalem should have so extensive and so interesting a record of the prosperity and prosperity

Curious about the accuracy, Grigorian did some fact-checking. “The output also brought up Lord Palmerston,” he wrote, “and after a google search I learned that his actions resulted in the 1834 protests.”

College student’s “time travel” AI experiment accidentally outputs real 1834 history Read More »