ChatGPT users hate GPT-5’s “overworked secretary” energy, miss their GPT-4o buddy

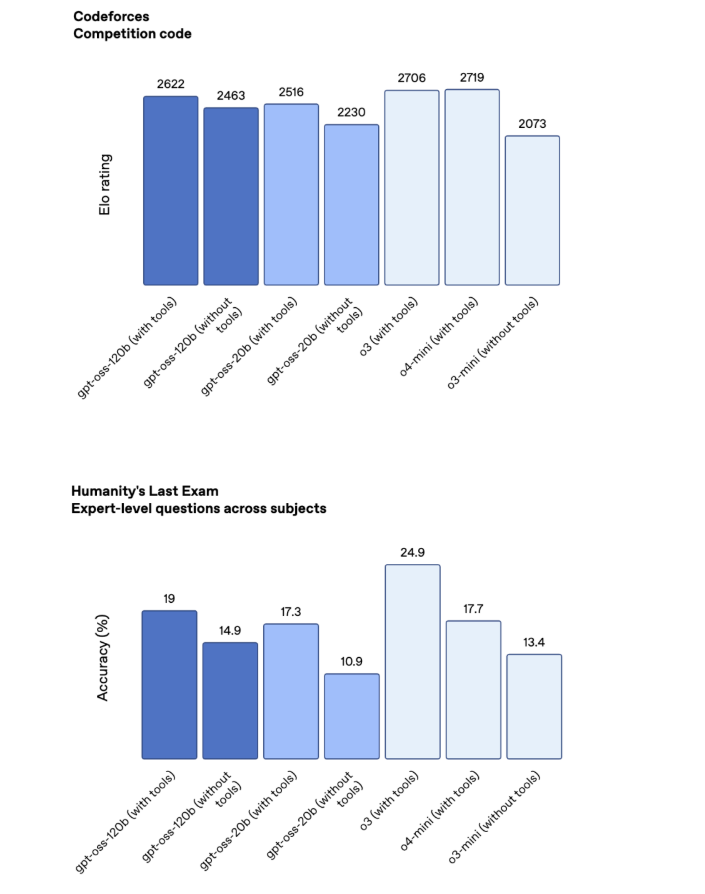

Others are irked by how quickly they run up against usage limits on the free tier, which pushes them toward the Plus ($20) and Pro ($200) subscriptions. But running generative AI is hugely expensive, and OpenAI is hemorrhaging cash. It wouldn’t be surprising if the wide rollout of GPT-5 is aimed at increasing revenue. At the same time, OpenAI can point to AI evaluations that show GPT-5 is more intelligent than its predecessor.

RIP your AI buddy

OpenAI built ChatGPT to be a tool people want to use. It’s a fine line to walk—OpenAI has occasionally made its flagship AI too friendly and complimentary. Several months ago, the company had to roll back a change that made the bot into a sycophantic mess that would suck up to the user at every opportunity. That was a bridge too far, certainly, but many of the company’s users liked the generally friendly tone of the chatbot. They tuned the AI with custom prompts and built it into a personal companion. They’ve lost that with GPT-5.

Naturally, ChatGPT users have turned to AI to express their frustration. Credit: /u/Responsible_Cow2236

There are reasons to be wary of this kind of parasocial attachment to artificial intelligence. As companies have tuned these systems to increase engagement, they prioritize outputs that make people feel good. This results in interactions that can reinforce delusions, eventually leading to serious mental health episodes and dangerous medical beliefs. It can be hard to understand for those of us who don’t spend our days having casual conversations with ChatGPT, but the Internet is teeming with folks who build their emotional lives around AI.

Is GPT-5 safer? Early impressions from frequent chatters decry the bot’s more corporate, less effusively creative tone. In short, a significant number of people don’t like the outputs as much. GPT-5 could be a more able analyst and worker, but it isn’t the digital companion people have come to expect, and in some cases, love. That might be good in the long term, both for users’ mental health and OpenAI’s bottom line, but there’s going to be an adjustment period for fans of GPT-4o.

Chatters who are unhappy with the more straightforward tone of GPT-5 can always go elsewhere. Elon Musk’s xAI has shown it is happy to push the envelope with Grok, featuring Taylor Swift nudes and AI waifus. Of course, Ars does not recommend you do that.

ChatGPT users hate GPT-5’s “overworked secretary” energy, miss their GPT-4o buddy Read More »