Apple says it has “a big week ahead.” Here’s what we expect to see.

it’s what’s on the inside that counts

Apple is taking an “ain’t broke/don’t fix” approach to most of its gadgets.

Apple’s 2018-era design for the then-Intel-powered MacBook Air. The M1 Air used largely the same design, and we expect Apple’s lower-cost MacBook to look pretty similar. Credit: Valentina Palladino

Excepting the AirTag 2, so far it’s been a quiet year for Apple hardware. But that’s poised to change next week, as the company is hosting a “special experience” on March 4.

The use of the word experience, rather than event or presentation, implies that Apple’s typical presentation format won’t apply here. And CEO Tim Cook more or less confirmed this when he posted that the company had “a big week ahead,” starting on Monday. Apple is most likely planning multiple days of product launches announced via press release on its Newsroom site, with the “experience” on Wednesday serving as a capper and a hands-on session for the media.

Apple has used a similar strategy before, spacing out relatively low-key refreshes over several days to generate sustained interest rather than dropping everything in a single 30- to 60-minute string of pre-recorded videos.

Reporting on what, exactly, Apple plans to announce has consistently centered on a small handful of specific devices, but with the exception of the iPhone 17 series, the M5 Vision Pro, and the Apple Watch, most of Apple’s major products have gone long enough without an update that anything is possible. Here’s what we consider to be the most likely, and a few other notes besides.

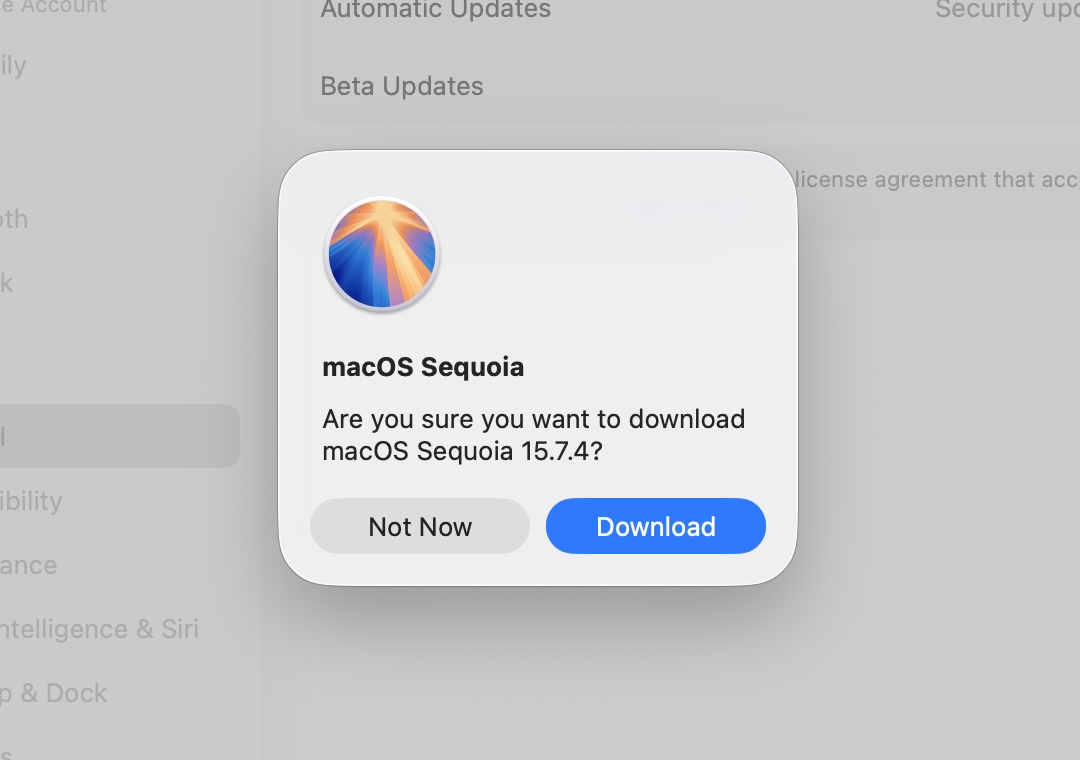

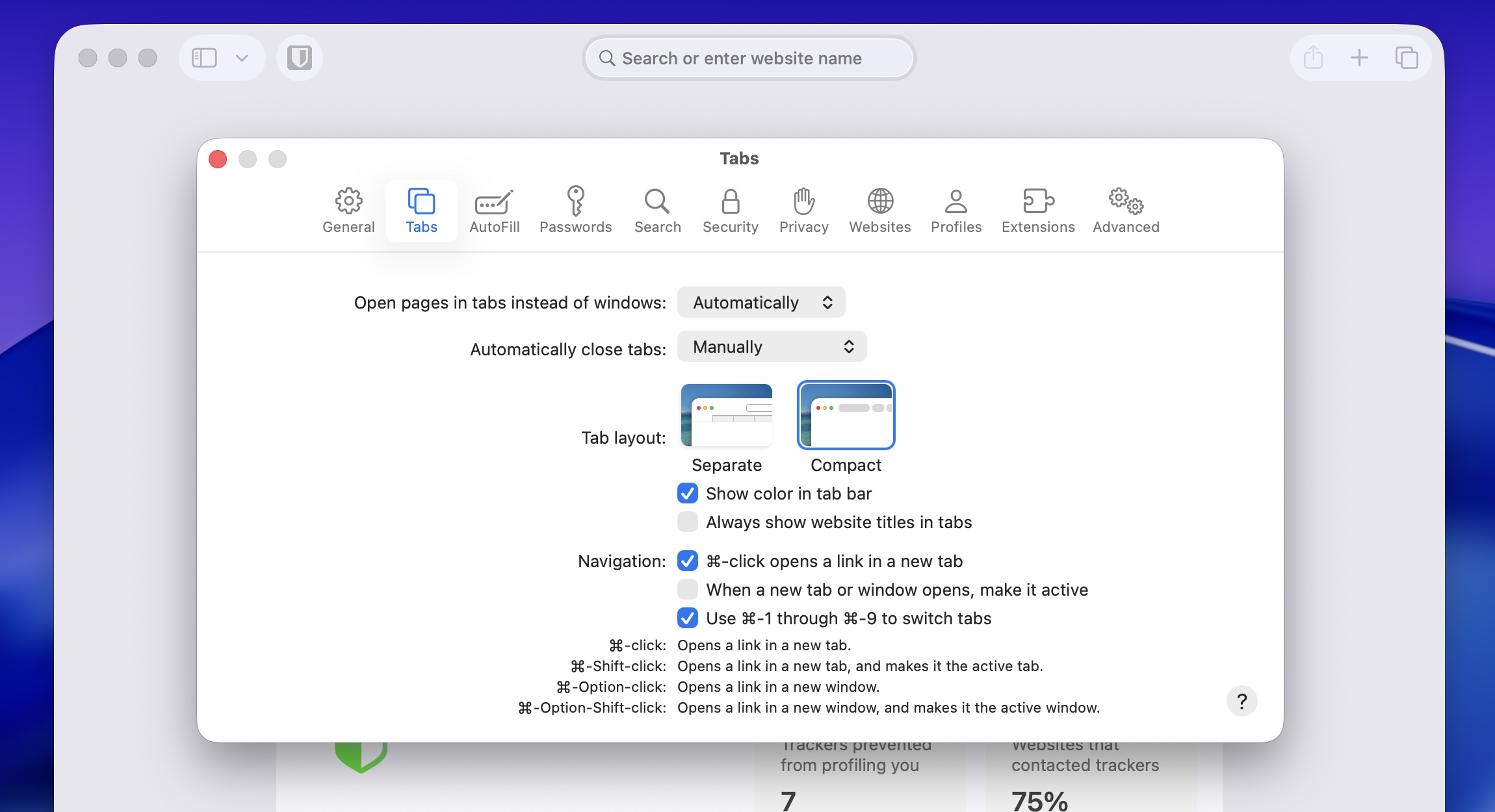

The long-awaited “budget” MacBook

Most rumors and leaks agree that Apple is preparing to launch a new MacBook priced well below the MacBook Air, in a style similar to the $349 iPad or the iPhone 16e. Commonly cited specs include a 13-inch-ish screen and an Apple A18 Pro chip, which debuted in the iPhone 16 Pro in 2024 and is typically packaged with 8GB of RAM. The laptop is also said to be coming in multiple colors, taking a page from the iMac and the basic iPad.

Rumors have circulated about a “cheap” MacBook purpose-built for cost-conscious buyers since the late 2000s, if not before. But none of these, if they’ve existed in Apple’s labs, have ever made it to stores, and Apple’s laptops have reliably started at around $1,000 for over 20 years.

But in the two years since removing it from its online store, Apple has used the old M1 MacBook Air design as a sort of trial balloon. Since early 2024, the laptop has only been available through Walmart in the US, with a basic 8GB of RAM and 256GB of storage. But it has been priced in the same $600 to $700 range as midrange Windows laptops and higher-end Chromebooks and has apparently done well enough to merit a true successor.

I expect Apple to follow a pattern similar to what it did when it first launched the $329 iPad in 2017, or the iPhone SE in 2016: to essentially re-use the 2020-era MacBook Air’s design and other components to the greatest degree possible.

These are already parts that Apple and its suppliers have a lot of experience manufacturing, and they’ve been around long enough that they’re probably about as inexpensive as they’re going to get. They’re also proven components that meet Apple’s usual standards for materials and build quality. If that leaves the new MacBook slightly out of step with the rest of Apple’s laptop designs, that’s a compromise the company has been willing to make in the past.

Some of the details of this system will probably be a surprise, but we can expect Apple to create some intentional distance between this MacBook and the MacBook Air, the same as it does for the low-end iPad and iPhone. The processor will be one limitation; the potential 8GB RAM ceiling, limited upgrade options, fewer and less-capable ports, and limited external display support may be others.

This thing is likely destined to be an email, browsing, and casual phone-camera-photo-editing machine for people who prefer a traditional clamshell laptop to an iPad. The $999-and-up MacBook Air will continue to be Apple’s default do-anything laptop, and the MacBook Pro will continue to occupy the “do-anything, but faster” position.

The $349 iPad

Apple’s basic $349 iPad could get an Apple Intelligence update, thanks to a processor and RAM bump. Credit: Andrew Cunningham

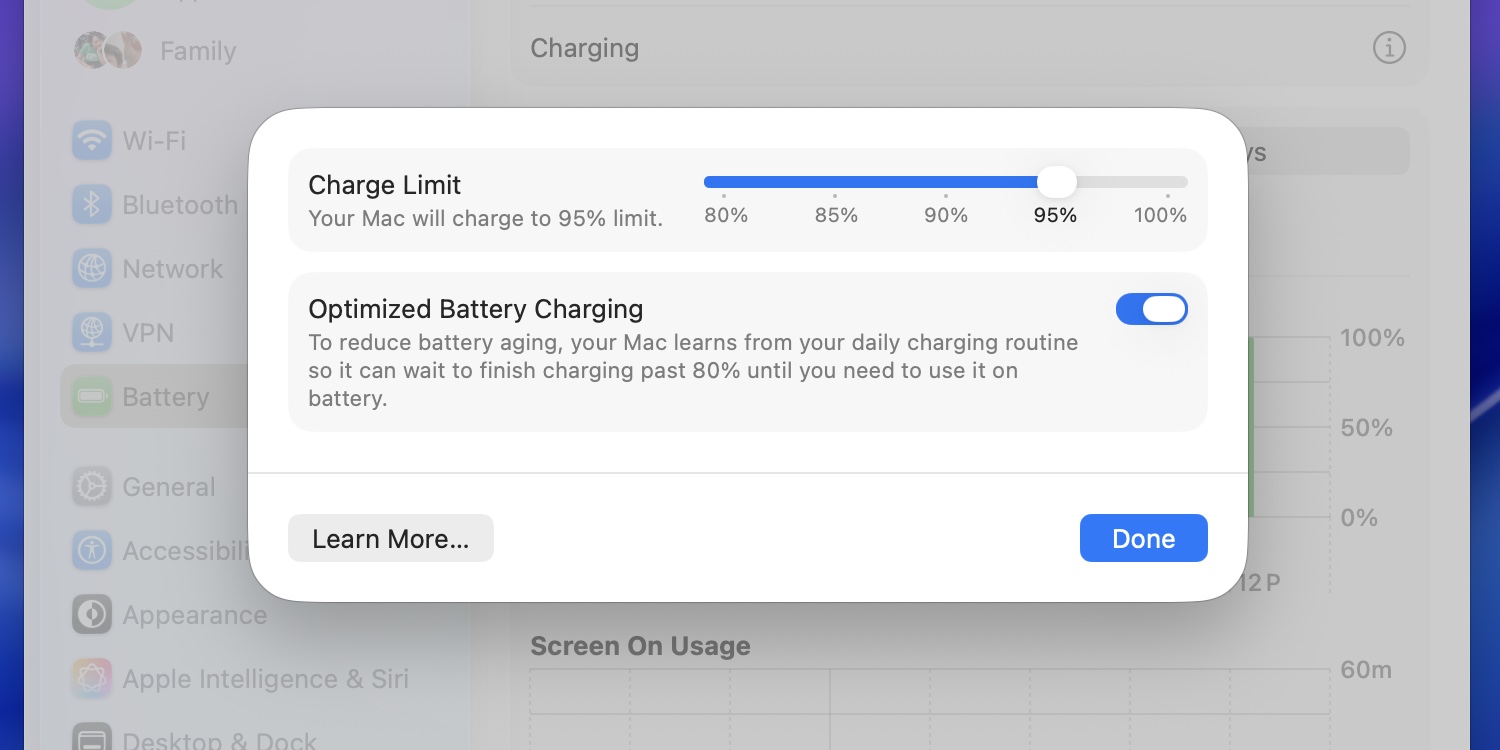

Speaking of the Apple A18 series, Apple is apparently planning a refresh of its $349 base-model iPad that uses an A18 or possibly an A19. Assuming it still comes with 8GB of RAM—up from 6GB for the current Apple A16-powered iPad—either chip would help it clear the bar for Apple Intelligence support.

Apple doesn’t always update its basic iPad every year; in 2024, for instance, it got a price drop rather than a hardware refresh. But the A16 iPad is currently the only thing in the entire iPhone/iPad/Mac lineup without support for Apple Intelligence, a bundle of features that Apple markets pretty heavily despite their functional unevenness. That marketing campaign is likely to intensify when Apple finally releases its new Google Gemini-powered Siri update at some point this year.

Even if you don’t care about Apple Intelligence, a basic iPad with 8GB of RAM will be a win for most users, since you can use that extra RAM for all kinds of things that have nothing to do with AI. It’s the same amount of memory Apple has shipped with the iPad Air since the M1 model, and with several generations of iPad Pro. Even attached to a slower processor, this should still improve the multitasking and productivity experience on the tablet.

The iPhone 17e

Apple would let the old iPhone SE languish for at least a couple years between updates, but it’s apparently taking a different tack with the “e” iPhones.

The main star of this refresh is a new chip, which will supposedly be upgraded from an Apple A18 to an A19. It’s also said to be picking up MagSafe charging support, making it compatible with Apple-made and third-party accessories that magnetically clamp to the back of other iPhones.

Other than that, the rumor mill suggests that the 17e will stick with its notched screen rather than a Dynamic Island, and we’d be surprised to see it move beyond its basic one-lens camera. Assuming Apple sticks with the same $599 starting price, though, there will still be some awkward overlap between the iPhone 16 and the regular iPhone 17.

The iPad Air

Do you like the current iPad Air with the Apple M3? Or the last one with the Apple M2?

That’s lucky for you, because a next-generation iPad Air is likely to continue in the same vein, picking up a new chip but not changing much else. If you’re holding out for something more exciting, like improved screen technology, you’ll likely be disappointed.

There’s no word on whether the M4 might come with any other internal upgrades, like more RAM or increased storage in the base model. Either or both of those could spice up an otherwise straightforward update.

Other possibilities

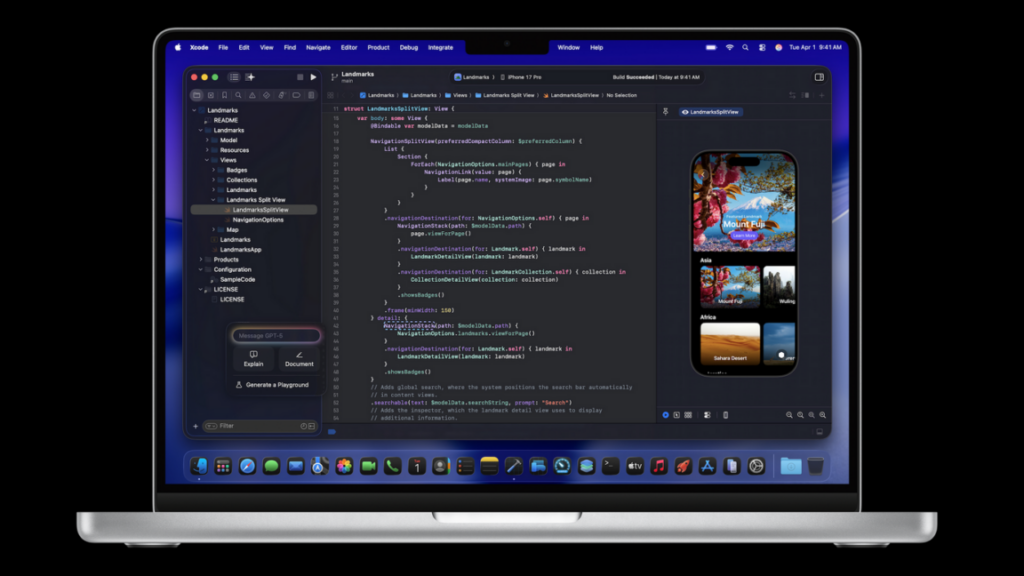

Apple could update the remaining M4 family MacBook Pros (pictured) with M5 family replacements. Credit: Andrew Cunningham

Apple could choose to refresh almost any of its Macs next week—only the low-end MacBook Pro has an M5 chip, and it has been at least a year since the rest of the lineup was last updated. There’s no refresh that would come as a true surprise, excepting maybe the Mac Pro that Apple has allegedly put “on the back burner” (again).

Higher-end MacBook Pros with M5 Pro and M5 Max processors would be the most interesting updates, since they would be the first Macs to debut higher-end M5 family processors. But if you’re not desperate for an upgrade, it might be better to keep waiting a while longer. These M5 models are said to continue using the same design Apple has been using for the MacBook Pro for the last five years, and a more significant design update with OLED touchscreens and the Mac’s first Dynamic Island could be on the horizon.

M5 updates for the 13- and 15-inch MacBook Air, the iMac, the Mac mini, and the Mac Studio could happen, too; none of these computers are said to be getting any kind of significant design overhaul this generation. I would, however, be surprised if Apple chose to refresh these Macs all at once. To update some models now and hold others back until later in the spring or maybe even until the Worldwide Developers Conference in June would be more in keeping with Apple’s past practice.

As for other devices, reports have circulated for months about an imminent update for the Apple TV box, last refreshed in 2022. It has yet to materialize and is not mentioned on any shortlist for next week’s announcements, but an update is well overdue, and a new chip like the A18 or A19 would be necessary if Apple wanted to start bringing Apple Intelligence features to tvOS.

The common theme to all of these refreshes is that we can expect their updates to happen primarily on the inside, rather than the outside. The inside of a device is often more important than the outside of it, and these kinds of chip-only updates are usually successful in keeping Apple’s hardware feeling fresh. Just don’t expect to have many interesting new things to look at.

Andrew is a Senior Technology Reporter at Ars Technica, with a focus on consumer tech including computer hardware and in-depth reviews of operating systems like Windows and macOS. Andrew lives in Philadelphia and co-hosts a weekly book podcast called Overdue.

Apple says it has “a big week ahead.” Here’s what we expect to see. Read More »