Unpacking Passkeys Pwned: Possibly the most specious research in decades

Researchers take note: When the endpoint is compromised, all bets are off.

Don’t believe everything you read—especially when it’s part of a marketing pitch designed to sell security services.

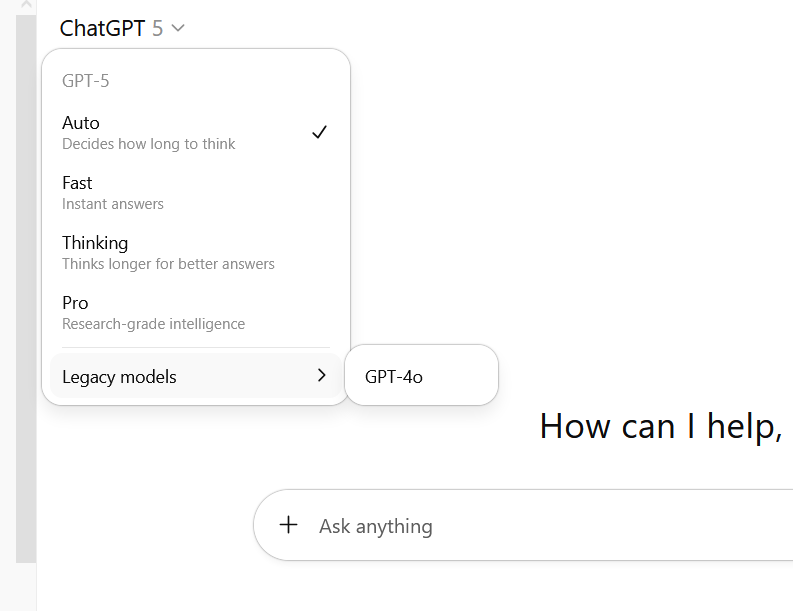

The latest example of the runaway hype that can come from such pitches is research published today by SquareX, a startup selling services for securing browsers and other client-side applications. It claims, without basis, to have found a “major passkey vulnerability” that undermines the lofty security promises made by Apple, Google, Microsoft, and thousands of other companies that have enthusiastically embraced passkeys.

Ahoy, face-palm ahead

“Passkeys Pwned,” the attack described in the research, was demonstrated earlier this month in a Defcon presentation. It relies on a malicious browser extension, installed in an earlier social engineering attack, that hijacks the process for creating a passkey for use on Gmail, Microsoft 365, or any of the other thousands of sites that now use the alternative form of authentication.

Behind the scenes, the extension allows a keypair to be created and binds it to the legitimate gmail.com domain, but the keypair is created by the malware and controlled by the attacker. With that, the adversary has access to cloud apps that organizations use for their most sensitive operations.

“This discovery breaks the myth that passkeys cannot be stolen, demonstrating that ‘passkey stealing’ is not only possible, but as trivial as traditional credential stealing,” SquareX researchers wrote in a draft version of Thursday’s research paper sent to me. “This serves as a wake up call that while passkeys appear more secure, much of this perception stems from a new technology that has not yet gone through decades of security research and trial by fire.”

In fact, this claim is the thing that’s untested. More on that later. For now, here’s a recap of passkeys.

FIDO recap

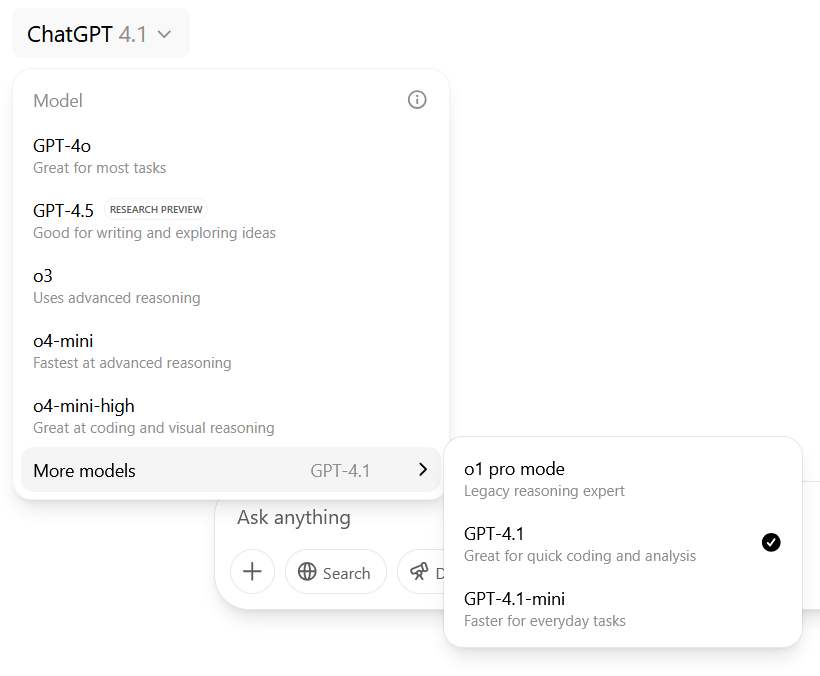

Passkeys are a core part of the FIDO specifications drafted by the FIDO (Fast IDentity Online) Alliance, a coalition of hundreds of companies around the world. A passkey is a public-private cryptographic keypair that uses ES256 or one of several other time-tested cryptographic algorithms. During the registration process, a unique key pair is made for—and cryptographically bound to—each website the user enrolls. The website stores the public key. The private key remains solely on the user’s authentication device, which can be a smartphone, dedicated security key, or other device.

When the user logs in, the website sends the user a pseudo-random string of data. The authentication device then uses the private key bound to the website domain to cryptographically sign the challenge string. The browser then sends the signed challenge back to the website. The site then uses the user’s public key to verify that the challenge was signed by the private key. If the signature is valid, the user is logged in. The entire process is generally as quick, if not quicker, than logging in to the site with a password.

As I’ve noted before, passkeys still have a long way to go before they’re ready for many users. That’s mainly because passkeys don’t always interoperate well between different platforms. What’s more, they’re so new that no service yet provides accounts that can only be logged in to using a passkey and instead require a password to be registered as a fallback. And as long as attackers can still phish or steal a user’s password, much of the benefit of passkeys is undermined.

That said, passkeys provide an authentication alternative that’s by far the most resistant to date to the types of account takeovers that have vexed online services and their users for decades. Unlike passwords, passkey keypairs can’t be phished. If a user gets redirected to a fake Gmail page, the passkey won’t work since it’s bound to the real gmail.com domain. Passkeys can’t be divulged in phone calls or text messages sent by attackers masquerading as trusted IT personnel. They can’t be sniffed over the wire. They can’t be leaked in database breaches. To date, there have been no vulnerabilities reported in the FIDO spec.

A fundamental misunderstanding of security

SquareX is now claiming all of that has changed because it found a way to hijack the passkey registration process. Those claims are based on a lack of familiarity with the FIDO spec, flawed logic, and a fundamental misunderstanding of security in general.

First, the claim that Passkeys Pwned shows that passkeys can be stolen is flat-out wrong. If the targeted user has already registered a passkey for Gmail, that key will remain safely stored on the authenticator device. The attacker never comes close to stealing it. Using malware to hijack the registration process is something altogether different. If a user already has a passkey registered, Passkeys Pwned will block the login and return an error message that prompts the user to register a new passkey. If the user takes the bait, the new key will be controlled by the attacker. At no time are any passkeys stolen.

The research also fails to take into account that the FIDO spec makes clear that passkeys provide no defense against attacks that rely on the operating system, or browser running on it, being compromised and hence aren’t part of the FIDO threat model.

Section 6 of the document lists specific “security assumptions” inherent in the passkeys trust model. SA-3 states that “Applications on the user device are able to establish secure channels that provide trustworthy server authentication, and confidentiality and integrity for messages.” SA-4 holds that “the computing environment on the FIDO user device and the… applications involved in a FIDO operation act as trustworthy agents of the user.” WebAuthn, the predecessor spec to FIDO, hints at the same common-sense limitation.

By definition, an attack that relies on a browser infected by malware falls well outside the scope of protections passkeys were designed to provide. If passkeys are weak because they can’t withstand a compromise of the endpoint they run on, so too are protections we take for granted in TLS encryption and end-to-end encryption in messengers such as Signal—not to mention the security of SquareX services themselves. Further discrediting itself, Thursday’s writeup includes a marketing pitch for the SquareX platform.

“In my personal view, this seems like a dubious sales pitch for a commercial product,” Kenn White, a security engineer who works for banking, health care, and defense organizations, wrote in an interview. “If you are social engineered into adding a malicious extension, ALL web trust models are broken. I know that on the conference program committees I participate in, a submission like this would be eliminated in the first round.”

When you’re in a hole, stop digging

I enumerated these criticisms in an interview with SquareX lead developer Shourya Pratap Singh. He held his ground, saying that since Passkeys Pwned binds an attacker-controlled passkey to a legitimate site, “the passkey is effectively stolen.” He also bristled when I told him his research didn’t appear to be well thought out or when I pointed out that the FIDO spec—just like those for TLS, SSH, and others—explicitly excludes attacks relying on trojan infections.

He wrote:

This research was presented on the DEFCON Main Stage, which means it went through peer review by technical experts before selection. The warnings cited in the FIDO documents read like funny disclaimers, listing numerous conditions and assumptions before concluding that passkeys can be used securely. If we stick with that logic, then no authentication protocol would be considered secure. The purpose of a secure authentication method or protocol is not to remain secure in the face of a fully compromised device, but it should account for realistic client-side risks such as malicious extensions or injected JavaScript.

Passkeys are being heavily promoted today, but the average user is not aware of these hidden conditions. This research aims to highlight that gap and show why client-side risks need to be part of the conversation around passkeys.

The Passkeys Pwned research was presented just weeks after a separate security company made—and promptly withdrew—claims that it devised an attack that bypassed FIDO-based two-factor authentication. In fact, the sites that were attacked offered FIDO as only one means for 2FA, but also allowed other, less secure forms of 2FA. The attacks attacked those other forms, not the one specified by FIDO. Had the sites not allowed fallbacks to the weaker 2FA forms, the attack would have failed.

SquareX is right in saying that passkeys haven’t withstood decades of security research the way more traditional forms of authentication have. There very possibly will be vulnerabilities discovered in either the FIDO spec or various implementations of it. For now, though, passkeys remain the best defense against attacks relying on things like credential phishing, password reuse, and database breaches.

Dan Goodin is Senior Security Editor at Ars Technica, where he oversees coverage of malware, computer espionage, botnets, hardware hacking, encryption, and passwords. In his spare time, he enjoys gardening, cooking, and following the independent music scene. Dan is based in San Francisco. Follow him at here on Mastodon and here on Bluesky. Contact him on Signal at DanArs.82.

Unpacking Passkeys Pwned: Possibly the most specious research in decades Read More »