OpenAI researcher quits over ChatGPT ads, warns of “Facebook” path

On Wednesday, former OpenAI researcher Zoë Hitzig published a guest essay in The New York Times announcing that she resigned from the company on Monday, the same day OpenAI began testing advertisements inside ChatGPT. Hitzig, an economist and published poet who holds a junior fellowship at the Harvard Society of Fellows, spent two years at OpenAI helping shape how its AI models were built and priced. She wrote that OpenAI’s advertising strategy risks repeating the same mistakes that Facebook made a decade ago.

“I once believed I could help the people building A.I. get ahead of the problems it would create,” Hitzig wrote. “This week confirmed my slow realization that OpenAI seems to have stopped asking the questions I’d joined to help answer.”

Hitzig did not call advertising itself immoral. Instead, she argued that the nature of the data at stake makes ChatGPT ads especially risky. Users have shared medical fears, relationship problems, and religious beliefs with the chatbot, she wrote, often “because people believed they were talking to something that had no ulterior agenda.” She called this accumulated record of personal disclosures “an archive of human candor that has no precedent.”

She also drew a direct parallel to Facebook’s early history, noting that the social media company once promised users control over their data and the ability to vote on policy changes. Those pledges eroded over time, Hitzig wrote, and the Federal Trade Commission found that privacy changes Facebook marketed as giving users more control actually did the opposite.

She warned that a similar trajectory could play out with ChatGPT: “I believe the first iteration of ads will probably follow those principles. But I’m worried subsequent iterations won’t, because the company is building an economic engine that creates strong incentives to override its own rules.”

Ads arrive after a week of AI industry sparring

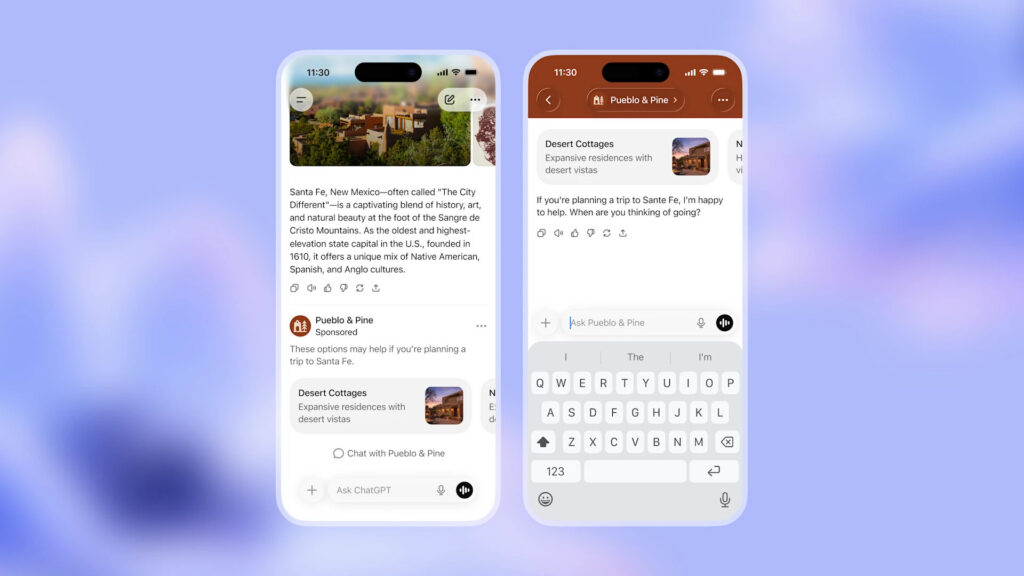

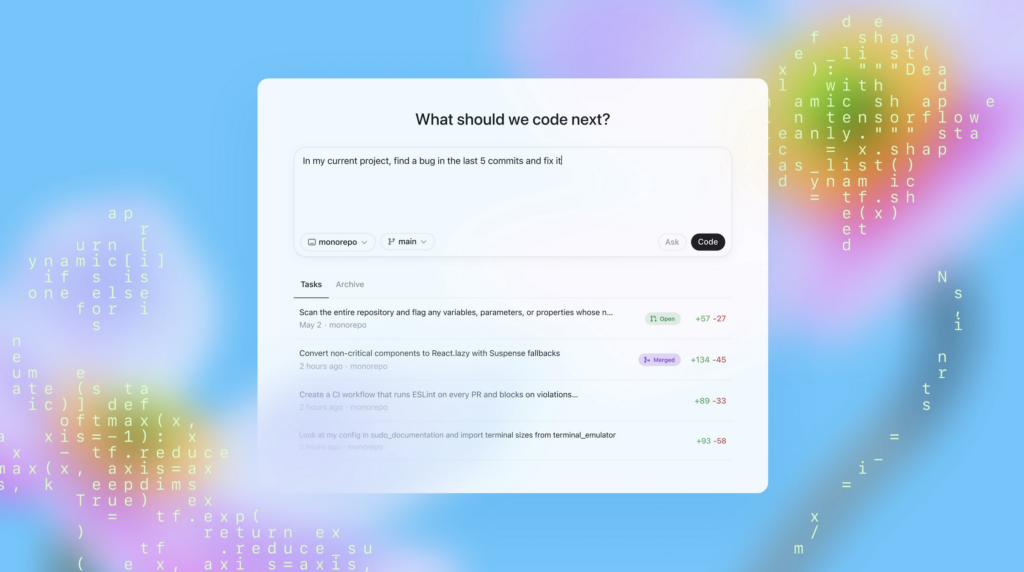

Hitzig’s resignation adds another voice to a growing debate over advertising in AI chatbots. OpenAI announced in January that it would begin testing ads in the US for users on its free and $8-per-month “Go” subscription tiers, while paid Plus, Pro, Business, Enterprise, and Education subscribers would not see ads. The company said ads would appear at the bottom of ChatGPT responses, be clearly labeled, and would not influence the chatbot’s answers.

OpenAI researcher quits over ChatGPT ads, warns of “Facebook” path Read More »