You won’t believe the excuses lawyers have after getting busted for using AI

Amid what one judge called an “epidemic” of fake AI-generated case citations bogging down courts, some common excuses are emerging from lawyers hoping to dodge the most severe sanctions for filings deemed misleading.

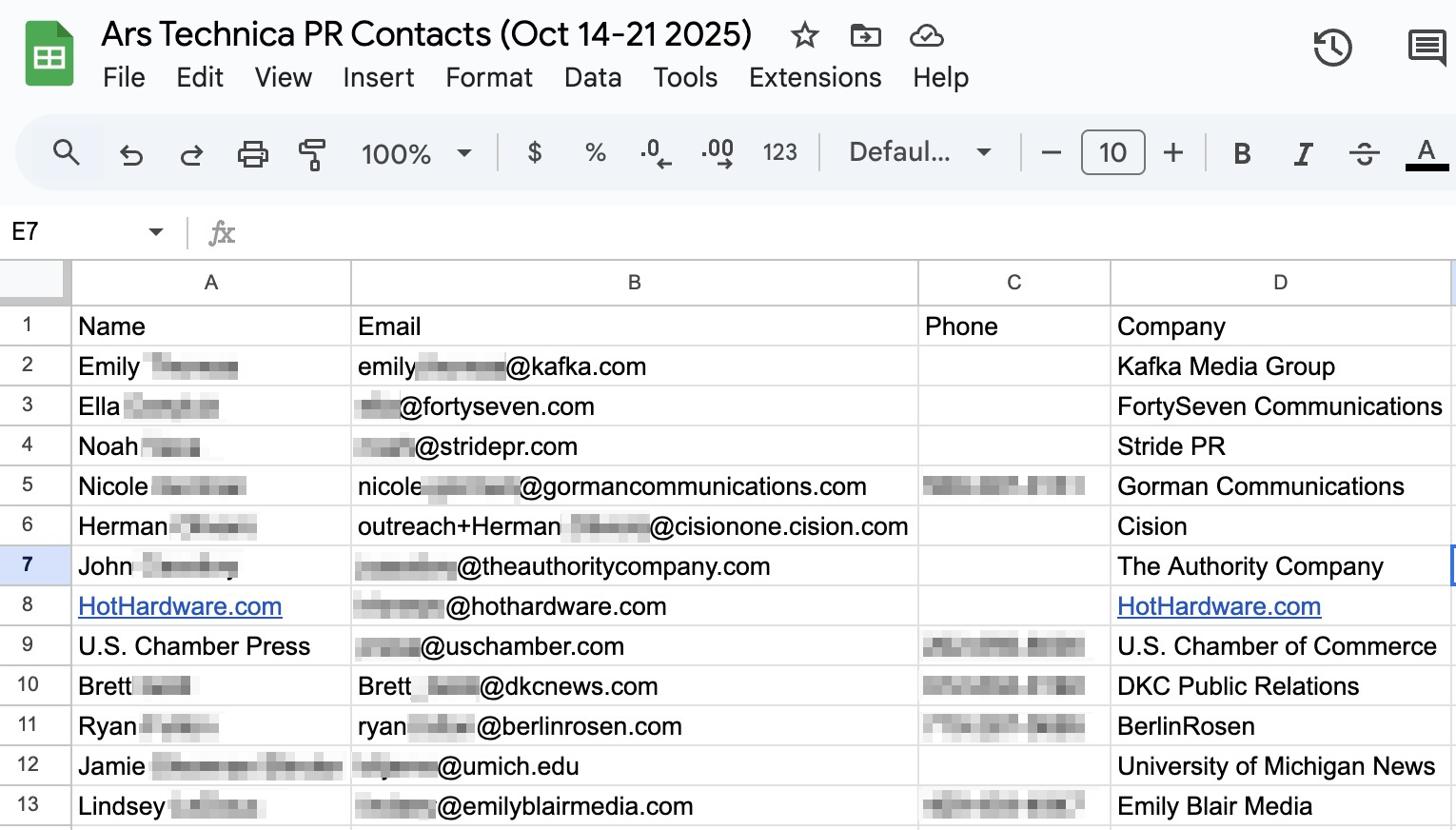

Using a database compiled by French lawyer and AI researcher Damien Charlotin, Ars reviewed 23 cases where lawyers were sanctioned for AI hallucinations. In many, judges noted that the simplest path to avoid or diminish sanctions was to admit that AI was used as soon as it’s detected, act humble, self-report the error to relevant legal associations, and voluntarily take classes on AI and law. But not every lawyer takes the path of least resistance, Ars’ review found, with many instead offering excuses that no judge found credible. Some even lie about their AI use, judges concluded.

Since 2023—when fake AI citations started being publicized—the most popular excuse has been that the lawyer didn’t know AI was used to draft a filing.

Sometimes that means arguing that you didn’t realize you were using AI, as in the case of a California lawyer who got stung by Google’s AI Overviews, which he claimed he took for typical Google search results. Most often, lawyers using this excuse tend to blame an underling, but clients have been blamed, too. A Texas lawyer this month was sanctioned after deflecting so much that the court had to eventually put his client on the stand after he revealed she played a significant role in drafting the aberrant filing.

“Is your client an attorney?” the court asked.

“No, not at all your Honor, just was essentially helping me with the theories of the case,” the lawyer said.

Another popular dodge comes from lawyers who feign ignorance that chatbots are prone to hallucinating facts.

Recent cases suggest this excuse may be mutating into variants. Last month, a sanctioned Oklahoma lawyer admitted that he didn’t expect ChatGPT to add new citations when all he asked the bot to do was “make his writing more persuasive.” And in September, a California lawyer got in a similar bind—and was sanctioned a whopping $10,000, a fine the judge called “conservative.” That lawyer had asked ChatGPT to “enhance” his briefs, “then ran the ‘enhanced’ briefs through other AI platforms to check for errors,” neglecting to ever read the “enhanced” briefs.

Neither of those tired old excuses hold much weight today, especially in courts that have drawn up guidance to address AI hallucinations. But rather than quickly acknowledge their missteps, as courts are begging lawyers to do, several lawyers appear to have gotten desperate. Ars found a bunch citing common tech issues as the reason for citing fake cases.

When in doubt, blame hackers?

For an extreme case, look to a New York City civil court, where a lawyer, Innocent Chinweze, first admitted to using Microsoft Copilot to draft an errant filing, then bizarrely pivoted to claim that the AI citations were due to malware found on his computer.

Chinweze said he had created a draft with correct citations but then got hacked, allowing bad actors “unauthorized remote access” to supposedly add the errors in his filing.

The judge was skeptical, describing the excuse as an “incredible and unsupported statement,” particularly since there was no evidence of the prior draft existing. Instead, Chinweze asked to bring in an expert to testify that the hack had occurred, requesting to end the proceedings on sanctions until after the court weighed the expert’s analysis.

The judge, Kimon C. Thermos, didn’t have to weigh this argument, however, because after the court broke for lunch, the lawyer once again “dramatically” changed his position.

“He no longer wished to adjourn for an expert to testify regarding malware or unauthorized access to his computer,” Thermos wrote in an order issuing sanctions. “He retreated” to “his original position that he used Copilot to aid in his research and didn’t realize that it could generate fake cases.”

Possibly more galling to Thermos than the lawyer’s weird malware argument, though, was a document that Chinweze filed on the day of his sanctions hearing. That document included multiple summaries preceded by this text, the judge noted:

Some case metadata and case summaries were written with the help of AI, which can produce inaccuracies. You should read the full case before relying on it for legal research purposes.

Thermos admonished Chinweze for continuing to use AI recklessly. He blasted the filing as “an incoherent document that is eighty-eight pages long, has no structure, contains the full text of most of the cases cited,” and “shows distinct indications that parts of the discussion/analysis of the cited cases were written by artificial intelligence.”

Ultimately, Thermos ordered Chinweze to pay $1,000, the most typical fine lawyers received in the cases Ars reviewed. The judge then took an extra non-monetary step to sanction Chinweze, referring the lawyer to a grievance committee, “given that his misconduct was substantial and seriously implicated his honesty, trustworthiness, and fitness to practice law.”

Ars could not immediately reach Chinweze for comment.

Toggling windows on a laptop is hard

In Alabama, an attorney named James A. Johnson made an “embarrassing mistake,” he said, primarily because toggling windows on a laptop is hard, US District Judge Terry F. Moorer noted in an October order on sanctions.

Johnson explained that he had accidentally used an AI tool that he didn’t realize could hallucinate. It happened while he was “at an out-of-state hospital attending to the care of a family member recovering from surgery.” He rushed to draft the filing, he said, because he got a notice that his client’s conference had suddenly been “moved up on the court’s schedule.”

“Under time pressure and difficult personal circumstance,” Johnson explained, he decided against using Fastcase, a research tool provided by the Alabama State Bar, to research the filing. Working on his laptop, he opted instead to use “a Microsoft Word plug-in called Ghostwriter Legal” because “it appeared automatically in the sidebar of Word while Fastcase required opening a separate browser to access through the Alabama State Bar website.”

To Johnson, it felt “tedious to toggle back and forth between programs on [his] laptop with the touchpad,” and that meant he “unfortunately fell victim to the allure of a new program that was open and available.”

Moorer seemed unimpressed by Johnson’s claim that he understood tools like ChatGPT were unreliable but didn’t expect the same from other AI legal tools—particularly since “information from Ghostwriter Legal made it clear that it used ChatGPT as its default AI program,” Moorer wrote.

The lawyer’s client was similarly horrified, deciding to drop Johnson on the spot, even though that risked “a significant delay of trial.” Moorer noted that Johnson seemed shaken by his client’s abrupt decision, evidenced by “his look of shock, dismay, and display of emotion.”

Moorer further noted that Johnson had been paid using public funds while seemingly letting AI do his homework. “The harm is not inconsequential as public funds for appointed counsel are not a bottomless well and are limited resource,” the judge wrote in justifying a more severe fine.

“It has become clear that basic reprimands and small fines are not sufficient to deter this type of misconduct because if it were, we would not be here,” Moorer concluded.

Ruling that Johnson’s reliance on AI was “tantamount to bad faith,” Moorer imposed a $5,000 fine. The judge also would have “considered potential disqualification, but that was rendered moot” since Johnson’s client had already dismissed him.

Asked for comment, Johnson told Ars that “the court made plainly erroneous findings of fact and the sanctions are on appeal.”

Plagued by login issues

As a lawyer in Georgia tells it, sometimes fake AI citations may be filed because a lawyer accidentally filed a rough draft instead of the final version.

Other lawyers claim they turn to AI as needed when they have trouble accessing legal tools like Westlaw or LexisNexis.

For example, in Iowa, a lawyer told an appeals court that she regretted relying on “secondary AI-driven research tools” after experiencing “login issues her with her Westlaw subscription.” Although the court was “sympathetic to issues with technology, such as login issues,” the lawyer was sanctioned, primarily because she only admitted to using AI after the court ordered her to explain her mistakes. In her case, however, she got to choose between paying a minimal $150 fine or attending “two hours of legal ethics training particular to AI.”

Less sympathetic was a lawyer who got caught lying about the AI tool she blamed for inaccuracies, a Louisiana case suggested. In that case, a judge demanded to see the research history after a lawyer claimed that AI hallucinations came from “using Westlaw Precision, an AI-assisted research tool, rather than Westlaw’s standalone legal database.”

It turned out that the lawyer had outsourced the research, relying on a “currently suspended” lawyer’s AI citations, and had only “assumed” the lawyer’s mistakes were from Westlaw’s AI tool. It’s unclear what tool was actually used by the suspended lawyer, who likely lost access to a Westlaw login, but the judge ordered a $1,000 penalty after the lawyer who signed the filing “agreed that Westlaw did not generate the fabricated citations.”

Judge warned of “serial hallucinators”

Another lawyer, William T. Panichi in Illinois, has been sanctioned at least three times, Ars’ review found.

In response to his initial penalties ordered in July, he admitted to being tempted by AI while he was “between research software.”

In that case, the court was frustrated to find that the lawyer had contradicted himself, and it ordered more severe sanctions as a result.

Panichi “simultaneously admitted to using AI to generate the briefs, not doing any of his own independent research, and even that he ‘barely did any personal work [him]self on this appeal,’” the court order said, while also defending charging a higher fee—supposedly because this case “was out of the ordinary in terms of time spent” and his office “did some exceptional work” getting information.

The court deemed this AI misuse so bad that Panichi was ordered to disgorge a “payment of $6,925.62 that he received” in addition to a $1,000 penalty.

“If I’m lucky enough to be able to continue practicing before the appellate court, I’m not going to do it again,” Panichi told the court in July, just before getting hit with two more rounds of sanctions in August.

Panichi did not immediately respond to Ars’ request for comment.

When AI-generated hallucinations are found, penalties are often paid to the court, the other parties’ lawyers, or both, depending on whose time and resources were wasted fact-checking fake cases.

Lawyers seem more likely to argue against paying sanctions to the other parties’ attorneys, hoping to keep sanctions as low as possible. One lawyer even argued that “it only takes 7.6 seconds, not hours, to type citations into LexisNexis or Westlaw,” while seemingly neglecting the fact that she did not take those precious seconds to check her own citations.

The judge in the case, Nancy Miller, was clear that “such statements display an astounding lack of awareness of counsel’s obligations,” noting that “the responsibility for correcting erroneous and fake citations never shifts to opposing counsel or the court, even if they are the first to notice the errors.”

“The duty to mitigate the harms caused by such errors remains with the signor,” Miller said. “The sooner such errors are properly corrected, either by withdrawing or amending and supplementing the offending pleadings, the less time is wasted by everyone involved, and fewer costs are incurred.”

Texas US District Judge Marina Garcia Marmolejo agreed, explaining that even more time is wasted determining how other judges have responded to fake AI-generated citations.

“At one of the busiest court dockets in the nation, there are scant resources to spare ferreting out erroneous AI citations in the first place, let alone surveying the burgeoning caselaw on this subject,” she said.

At least one Florida court was “shocked, shocked” to find that a lawyer was refusing to pay what the other party’s attorneys said they were owed after misusing AI. The lawyer in that case, James Martin Paul, asked to pay less than a quarter of the fees and costs owed, arguing that Charlotin’s database showed he might otherwise owe penalties that “would be the largest sanctions paid out for the use of AI generative case law to date.”

But caving to Paul’s arguments “would only benefit serial hallucinators,” the Florida court found. Ultimately, Paul was sanctioned more than $85,000 for what the court said was “far more egregious” conduct than other offenders in the database, chastising him for “repeated, abusive, bad-faith conduct that cannot be recognized as legitimate legal practice and must be deterred.”

Paul did not immediately respond to Ars’ request to comment.

Michael B. Slade, a US bankruptcy judge in Illinois, seems to be done weighing excuses, calling on all lawyers to stop taking AI shortcuts that are burdening courts.

“At this point, to be blunt, any lawyer unaware that using generative AI platforms to do legal research is playing with fire is living in a cloud,” Slade wrote.

You won’t believe the excuses lawyers have after getting busted for using AI Read More »