Modder injects AI dialogue into 2002’s Animal Crossing using memory hack

But discovering the addresses was only half the problem. When you talk to a villager in Animal Crossing, the game normally displays dialogue instantly. Calling an AI model over the Internet takes several seconds. Willison examined the code and found Fonseca’s solution: a watch_dialogue() function that polls memory 10 times per second. When it detects a conversation starting, it immediately writes placeholder text: three dots with hidden pause commands between them, followed by a “Press A to continue” prompt.

“So the user gets a ‘press A to continue’ button and hopefully the LLM has finished by the time they press that button,” Willison noted in a Hacker News comment. While players watch dots appear and reach for the A button, the mod races to get a response from the AI model and translate it into the game’s dialog format.

Learning the game’s secret language

Simply writing text to memory froze the game. Animal Crossing uses an encoded format with control codes that manage everything from text color to character emotions. A special prefix byte (0x7F) signals commands rather than characters. Without the proper end-of-conversation control code, the game waits forever.

“Think of it like HTML,” Fonseca explains. “Your browser doesn’t just display words; it interprets tags … to make text bold.” The decompilation community had documented these codes, allowing Fonseca to build encoder and decoder tools that translate between a human-readable format and the GameCube’s expected byte sequences.

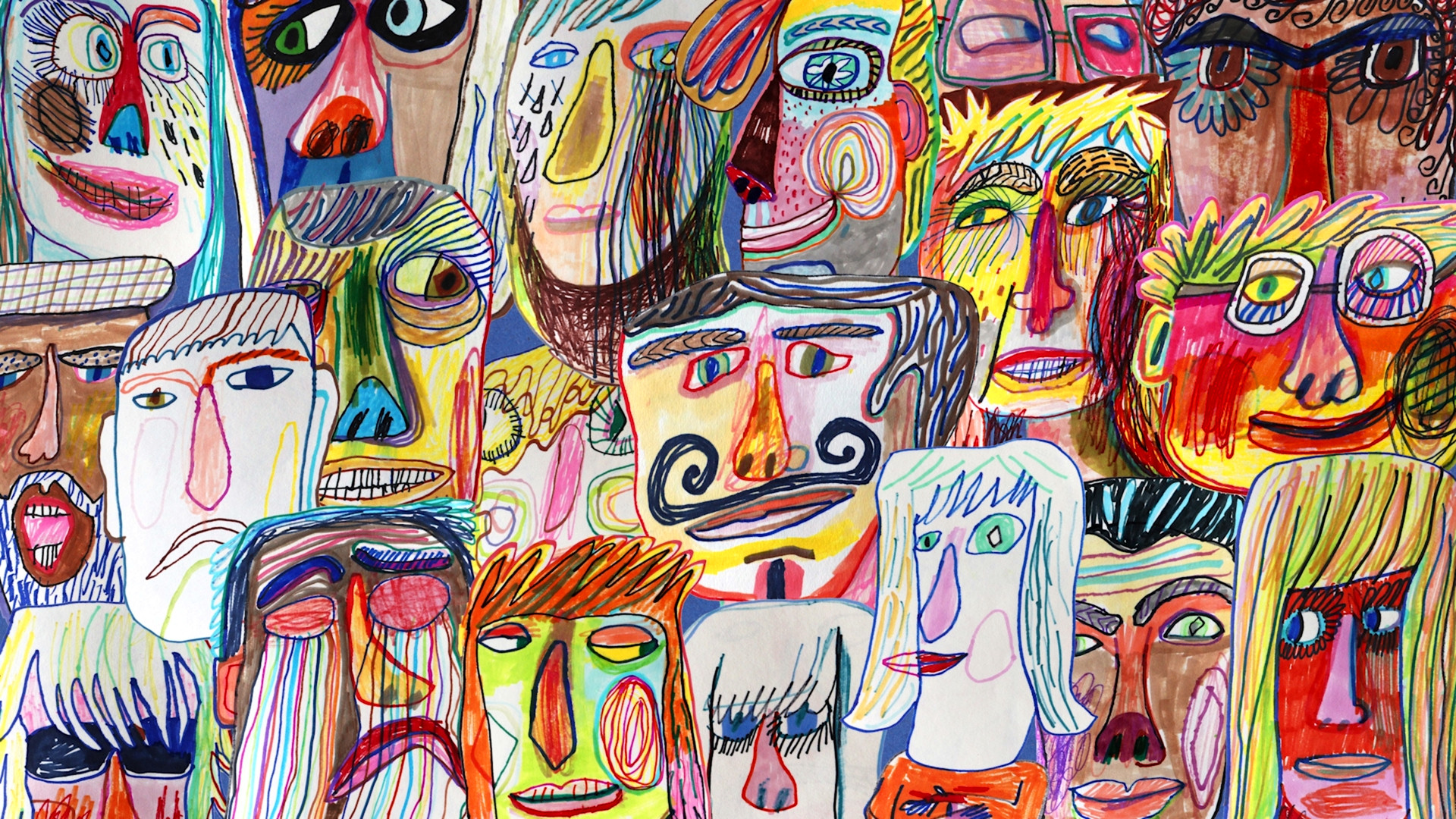

A screenshot of LLM-powered dialog injected into Animal Crossing for the GameCube. Credit: Joshua Fonseca

Initially, he tried using a single AI model to handle both creative writing and technical formatting. “The results were a mess,” he notes. “The AI was trying to be a creative writer and a technical programmer simultaneously and was bad at both.”

The solution: split the work between two models. A Writer AI creates dialogue using character sheets scraped from the Animal Crossing fan wiki. A Director AI then adds technical elements, including pauses, color changes, character expressions, and sound effects.

The code is available on GitHub, though Fonseca warns it contains known bugs and has only been tested on macOS. The mod requires Python 3.8+, API keys for either Google Gemini or OpenAI, and Dolphin emulator. Have fun sticking it to the man—or the raccoon, as the case may be.

Modder injects AI dialogue into 2002’s Animal Crossing using memory hack Read More »