OpenAI adds GPT-4.1 to ChatGPT amid complaints over confusing model lineup

The release comes just two weeks after OpenAI made GPT-4 unavailable in ChatGPT on April 30. That earlier model, which launched in March 2023, once sparked widespread hype about AI capabilities. Compared to that hyperbolic launch, GPT-4.1’s rollout has been a fairly understated affair—probably because it’s tricky to convey the subtle differences between all of the available OpenAI models.

As if 4.1’s launch wasn’t confusing enough, the release also roughly coincides with OpenAI’s July 2025 deadline for retiring the GPT-4.5 Preview from the API, a model one AI expert called a “lemon.” Developers must migrate to other options, OpenAI says, although GPT-4.5 will remain available in ChatGPT for now.

A confusing addition to OpenAI’s model lineup

In February, OpenAI CEO Sam Altman acknowledged on X his company’s confusing AI model naming practices, writing, “We realize how complicated our model and product offerings have gotten.” He promised that a forthcoming “GPT-5” model would consolidate the o-series and GPT-series models into a unified branding structure. But the addition of GPT-4.1 to ChatGPT appears to contradict that simplification goal.

So, if you use ChatGPT, which model should you use? If you’re a developer using the models through the API, the consideration is more of a trade-off between capability, speed, and cost. But in ChatGPT, your choice might be limited more by personal taste in behavioral style and what you’d like to accomplish. Some of the “more capable” models have lower usage limits as well because they cost more for OpenAI to run.

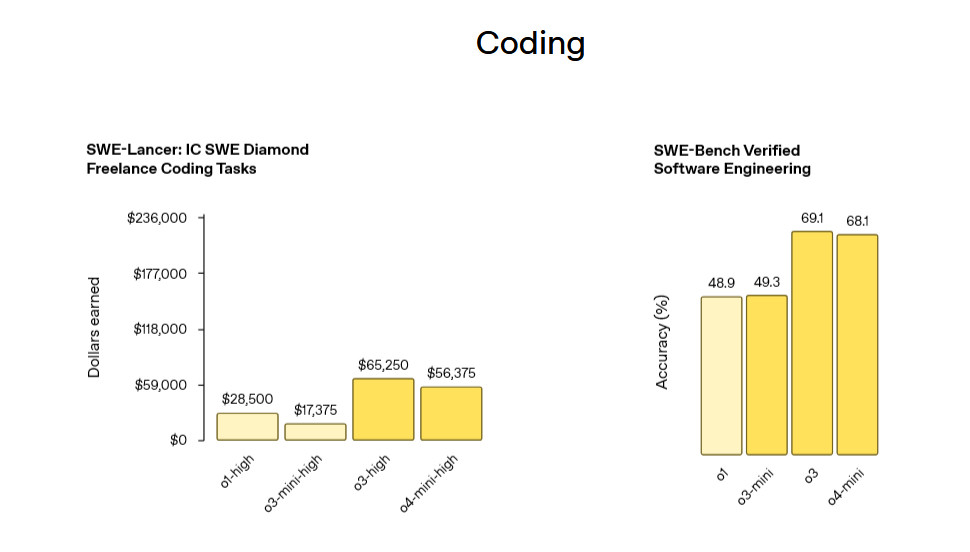

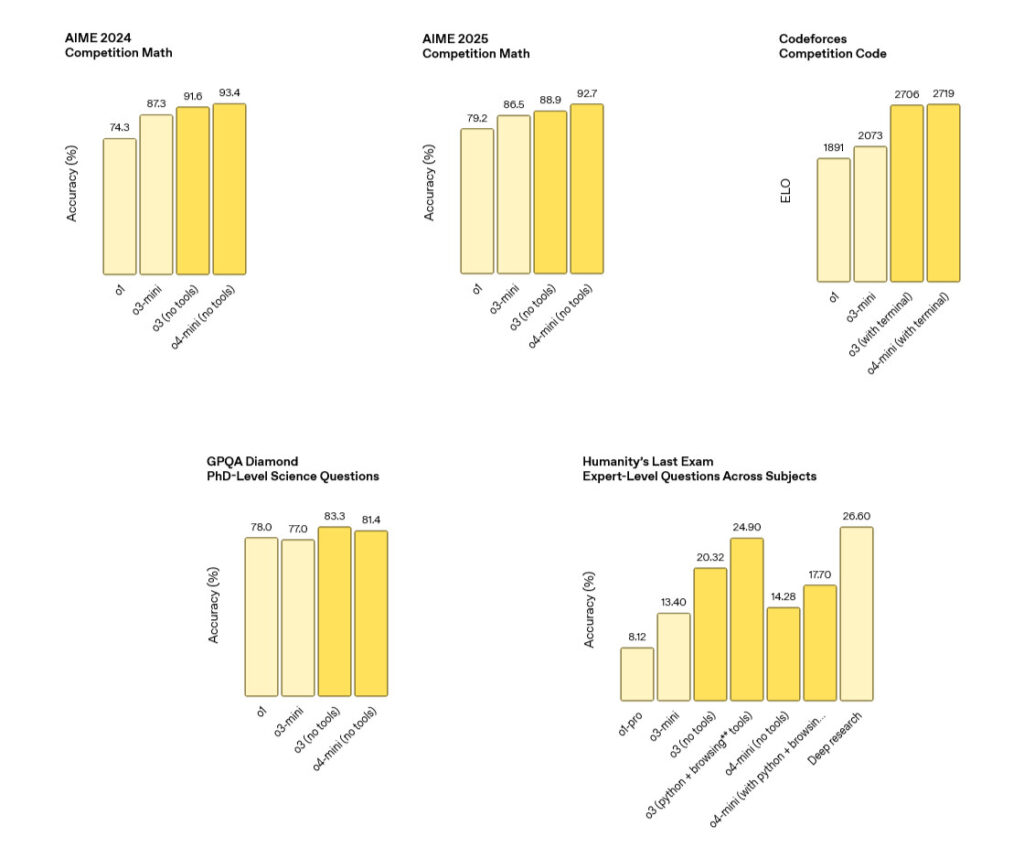

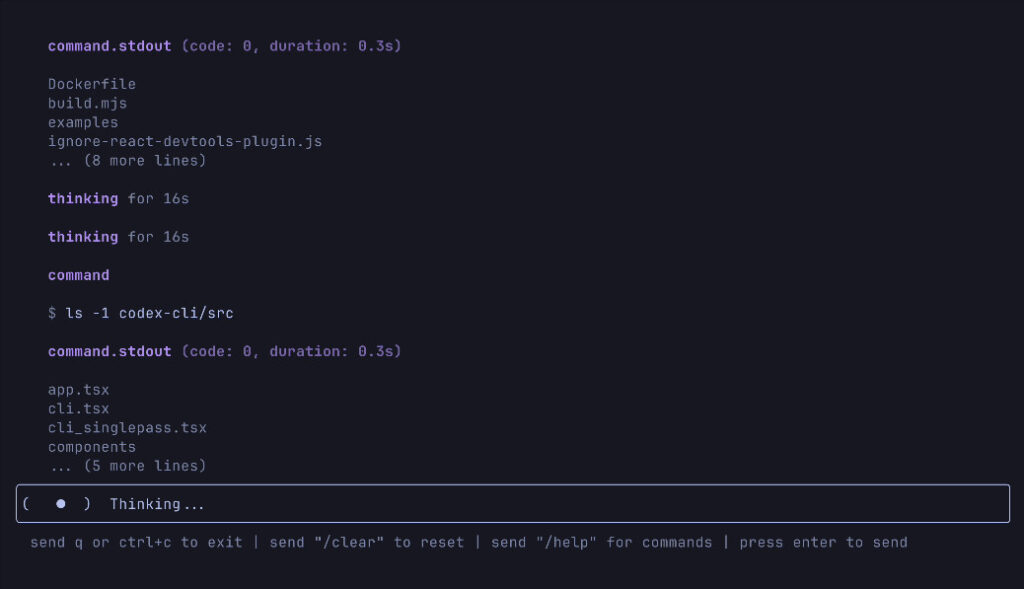

For now, OpenAI is keeping GPT-4o as the default ChatGPT model, likely due to its general versatility, balance between speed and capability, and personable style (conditioned using reinforcement learning and a specialized system prompt). The simulated reasoning models like 03 and 04-mini-high are slower to execute but can consider analytical-style problems more systematically and perform comprehensive web research that sometimes feels genuinely useful when it surfaces relevant (non-confabulated) web links. Compared to those, OpenAI is largely positioning GPT-4.1 as a speedier AI model for coding assistance.

Just remember that all of the AI models are prone to confabulations, meaning that they tend to make up authoritative-sounding information when they encounter gaps in their trained “knowledge.” So you’ll need to double-check all of the outputs with other sources of information if you’re hoping to use these AI models to assist with an important task.

OpenAI adds GPT-4.1 to ChatGPT amid complaints over confusing model lineup Read More »