Nvidia’s 50-series cards drop support for PhysX, impacting older games

Nvidia’s PhysX offerings to developers didn’t always generate warm feelings. As part of its broader GamesWorks package, PhysX was cited as one of the reasons The Witcher 3 ran at notably sub-optimal levels at launch. Protagonist Geralt’s hair, rendered in PhysX-powered HairWorks, was a burden on some chipsets.

PhysX started appearing in general game engines, like Unity 5, and was eventually open-sourced, first in limited computer and mobile form, then more broadly. As an application wrapped up in Nvidia’s 32-bit CUDA API and platform, the PhysX engine had a built-in shelf life. Now the expiration date is known, and it is conditional on buying into Nvidia’s 50-series video cards—whenever they approach reasonable human prices.

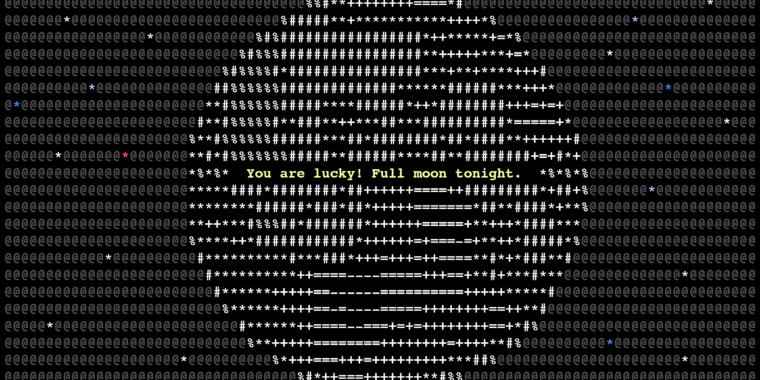

See that smoke? It’s from Sweden, originally. Credit: Gearbox/Take 2

The real dynamic particles were the friends we made…

Nvidia noted in mid-January that 32-bit applications cannot be developed or debugged on the latest versions of its CUDA toolkit. They will still run on cards before the 50 series. Technically, you could also keep an older card installed on your system for compatibility, which is real dedication to early-2010’s-era particle physics.

Technically, a 64-bit game could still support PhysX on Nvidia’s newest GPUs, but the heyday of PhysX, as a stand-alone technology switched on in game settings, tended to coincide with the 32-bit computing era.

If you load up a 32-bit game now with PhysX enabled (or forced in a config file) and a 50-series Nvidia GPU installed, there’s a good chance the physics work will be passed to the CPU instead of the GPU, likely bottlenecking the game and steeply lowering frame rates. Of course, turning off PhysX entirely raised frame rates above even native GPU support levels.

Demanding Borderlands 2 keep using PhysX made it so it “runs terrible,” noted one Redditor, even if the dust clouds and flapping cloth strips looked interesting. Other games with PhysX baked in, as listed by ResetEra completists, include Metro 2033, Assassin’s Creed IV: Black Flag, and the 2013 Star Trek game.

Commenters on Reddit and ResetEra note that many of the games listed had performance issues with PhysX long before Nvidia forced them to either turn off or be loaded onto a CPU. For some games, however, PhysX enabled destructible environments, “dynamic bank notes” and “posters” (in the Arkham games), fluid simulations, and base gameplay physics.

Anyone who works in, or cares about, game preservation has always had their work cut out for them. But it’s a particularly tough challenge to see certain aspects of a game’s operation lost to the forward march of the CUDA platform, something that’s harder to explain than a scratched CD or Windows compatibility.

Nvidia’s 50-series cards drop support for PhysX, impacting older games Read More »