Platforms bend over backward to help DHS censor ICE critics, advocates say

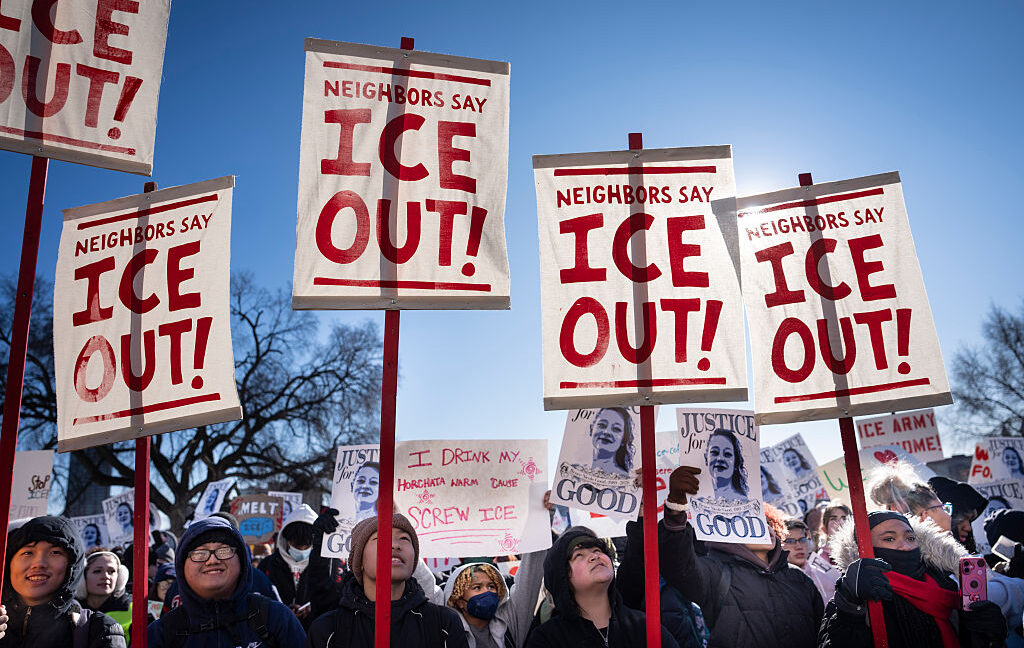

Pressure is mounting on tech companies to shield users from unlawful government requests that advocates say are making it harder to reliably share information about Immigration and Customs Enforcement (ICE) online.

Alleging that ICE officers are being doxed or otherwise endangered, Trump officials have spent the last year targeting an unknown number of users and platforms with demands to censor content. Early lawsuits show that platforms have caved, even though experts say they could refuse these demands without a court order.

In a lawsuit filed on Wednesday, the Foundation for Individual Rights and Expression (FIRE) accused Attorney General Pam Bondi and Department of Homeland Security Secretary Kristi Noem of coercing tech companies into removing a wide range of content “to control what the public can see, hear, or say about ICE operations.”

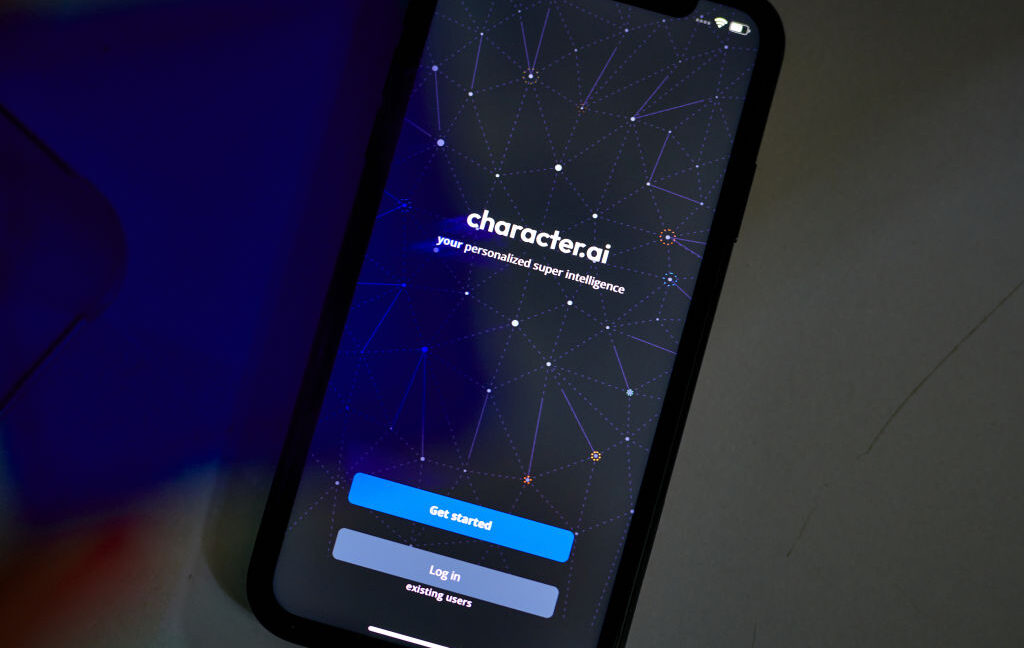

It’s the second lawsuit alleging that Bondi and DHS officials are using regulatory power to pressure private platforms to suppress speech protected by the First Amendment. It follows a complaint from the developer of an app called ICEBlock, which Apple removed from the App Store in October. Officials aren’t rushing to resolve that case—last month, they requested more time to respond—so it may remain unclear until March what defense they plan to offer for the takedown demands.

That leaves community members who monitor ICE in a precarious situation, as critical resources could disappear at the department’s request with no warning.

FIRE says people have legitimate reasons to share information about ICE. Some communities focus on helping people avoid dangerous ICE activity, while others aim to hold the government accountable and raise public awareness of how ICE operates. Unless there’s proof of incitement to violence or a true threat, such expression is protected.

Despite the high bar for censoring online speech, lawsuits trace an escalating pattern of DHS increasingly targeting websites, app stores, and platforms—many that have been willing to remove content the government dislikes.

Officials have ordered ICE-monitoring apps to be removed from app stores and even threatened to sanction CNN for simply reporting on the existence of one such app. Officials have also demanded that Meta delete at least one Chicago-based Facebook group with 100,000 members and made multiple unsuccessful attempts to unmask anonymous users behind other Facebook groups. Even encrypted apps like Signal don’t feel safe from officials’ seeming overreach. FBI Director Kash Patel recently said he has opened an investigation into Signal chats used by Minnesota residents to track ICE activity, NBC News reported.

As DHS censorship threats increase, platforms have done little to shield users, advocates say. Not only have they sometimes failed to reject unlawful orders that simply provided a “a bare mention of ‘officer safety/doxing’” as justification, but in one case, Google complied with a subpoena that left a critical section blank, the Electronic Frontier Foundation (EFF) reported.

For users, it’s increasingly difficult to trust that platforms won’t betray their own policies when faced with government intimidation, advocates say. Sometimes platforms notify users before complying with government requests, giving users a chance to challenge potentially unconstitutional demands. But in other cases, users learn about the requests only as platforms comply with them—even when those platforms have promised that would never happen.

Government emails with platforms may be exposed

Platforms could face backlash from users if lawsuits expose their communications to the government, a possibility in the coming months. Last fall, the EFF sued after DOJ, DHS, ICE, and Customs and Border Patrol failed to respond to Freedom of Information Act requests seeking emails between the government and platforms about takedown demands. Other lawsuits may surface emails in discovery. In the coming weeks, a judge will set a schedule for EFF’s litigation.

“The nature and content of the Defendants’ communications with these technology companies” is “critical for determining whether they crossed the line from governmental cajoling to unconstitutional coercion,” EFF’s complaint said.

EFF Senior Staff Attorney Mario Trujillo told Ars that the EFF is confident it can win the fight to expose government demands, but like most FOIA lawsuits, the case is expected to move slowly. That’s unfortunate, he said, because ICE activity is escalating, and delays in addressing these concerns could irreparably harm speech at a pivotal moment.

Like users, platforms are seemingly victims, too, FIRE senior attorney Colin McDonnell told Ars.

They’ve been forced to override their own editorial judgment while navigating implicit threats from the government, he said.

“If Attorney General Bondi demands that they remove speech, the platform is going to feel like they have to comply; they don’t have a choice,” McDonnell said.

But platforms do have a choice and could be doing more to protect users, the EFF has said. Platforms could even serve as a first line of defense, requiring officials to get a court order before complying with any requests.

Platforms may now have good reason to push back against government requests—and to give users the tools to do the same. Trujillo noted that while courts have been slow to address the ICEBlock removal and FOIA lawsuits, the government has quickly withdrawn requests to unmask Facebook users soon after litigation began.

“That’s like an acknowledgement that the Trump administration, when actually challenged in court, wasn’t even willing to defend itself,” Trujillo said.

Platforms could view that as evidence that government pressure only works when platforms fail to put up a bare-minimum fight, Trujillo said.

Platforms “bend over backward” to appease DHS

An open letter from the EFF and the American Civil Liberties Union (ACLU) documented two instances of tech companies complying with government demands without first notifying users.

The letter called out Meta for unmasking at least one user without prior notice, which groups noted “potentially” occured due to a “technical glitch.”

More troubling than buggy notifications, however, is the possibility that platforms may be routinely delaying notice until it’s too late.

After Google “received an ICE subpoena for user data and fulfilled it on the same day that it notified the user,” the company admitted that “sometimes when Google misses its response deadline, it complies with the subpoena and provides notice to a user at the same time to minimize the delay for an overdue production,” the letter said.

“This is a worrying admission that violates [Google’s] clear promise to users, especially because there is no legal consequence to missing the government’s response deadline,” the letter said.

Platforms face no sanctions for refusing to comply with government demands that have not been court-ordered, the letter noted. That’s why the EFF and ACLU have urged companies to use their “immense resources” to shield users who may not be able to drop everything and fight unconstitutional data requests.

In their letter, the groups asked companies to insist on court intervention before complying with a DHS subpoena. They should also resist DHS “gag orders” that ask platforms to hand over data without notifying users.

Instead, they should commit to giving users “as much notice as possible when they are the target of a subpoena,” as well as a copy of the subpoena. Ideally, platforms would also link users to legal aid resources and take up legal fights on behalf of vulnerable users, advocates suggested.

That’s not what’s happening so far. Trujillo told Ars that it feels like “companies have bent over backward to appease the Trump administration.”

The tide could turn this year if courts side with app makers behind crowdsourcing apps like ICEBlock and Eyes Up, who are suing to end the alleged government coercion. FIRE’s McDonnell, who represents the creator of Eyes Up, told Ars that platforms may feel more comfortable exercising their own editorial judgment moving forward if a court declares they were coerced into removing content.

DHS can’t use doxing to dodge First Amendment

FIRE’s lawsuit accuses Bondi and Noem of coercing Meta to disable a Facebook group with 100,000 members called “ICE Sightings–Chicagoland.”

The popularity of that group surged during “Operation Midway Blitz,” when hundreds of agents arrested more than 4,500 people over weeks of raids that used tear gas in neighborhoods and caused car crashes and other violence. Arrests included US citizens and immigrants of lawful status, which “gave Chicagoans reason to fear being injured or arrested due to their proximity to ICE raids, no matter their immigration status,” FIRE’s complaint said.

Kassandra Rosado, a lifelong Chicagoan and US citizen of Mexican descent, started the Facebook group and served as admin, moderating content with other volunteers. She prohibited “hate speech or bullying” and “instructed group members not to post anything threatening, hateful, or that promoted violence or illegal conduct.”

Facebook only ever flagged five posts that supposedly violated community guidelines, but in warnings, the company reassured Rosado that “groups aren’t penalized when members or visitors break the rules without admin approval.”

Rosado had no reason to suspect that her group was in danger of removal. When Facebook disabled her group, it told Rosado the group violated community standards “multiple times.” But her complaint noted that, confusingly, “Facebook policies don’t provide for disabling groups if a few members post ostensibly prohibited content; they call for removing groups when the group moderator repeatedly either creates prohibited content or affirmatively ‘approves’ such content.”

Facebook’s decision came after a right-wing influencer, Laura Loomer, tagged Noem and Bondi in a social media post alleging that the group was “getting people killed.” Within two days, Bondi bragged that she had gotten the group disabled while claiming that it “was being used to dox and target [ICE] agents in Chicago.”

McDonnell told Ars it seems clear that Bondi selectively uses the term “doxing” when people post images from ICE arrests. He pointed to “ICE’s own social media accounts,” which share favorable opinions of ICE alongside videos and photos of ICE arrests that Bondi doesn’t consider doxing.

“Rosado’s creation of Facebook groups to send and receive information about where and how ICE carries out its duties in public, to share photographs and videos of ICE carrying out its duties in public, and to exchange opinions about and criticism of ICE’s tactics in carrying out its duties, is speech protected by the First Amendment,” FIRE argued.

The same goes for speech managed by Mark Hodges, a US citizen who resides in Indiana. He created an app called Eyes Up to serve as an archive of ICE videos. Apple removed Eyes Up from the App Store around the same time that it removed ICEBlock.

“It is just videos of what government employees did in public carrying out their duties,” McDonnell said. “It’s nothing even close to threatening or doxing or any of these other theories that the government has used to justify suppressing speech.”

Bondi bragged that she had gotten ICEBlock banned, and FIRE’s complaint confirmed that Hodges’ company received the same notification that ICEBlock’s developer got after Bondi’s victory lap. The notice said that Apple received “information” from “law enforcement” claiming that the apps had violated Apple guidelines against “defamatory, discriminatory, or mean-spirited content.”

Apple did not reach the same conclusion when it independently reviewed Eyes Up prior to government meddling, FIRE’s complaint said. Notably, the app remains available in Google Play, and Rosado now manages a new Facebook group with similar content but somewhat tighter restrictions on who can join. Neither activity has required urgent intervention from either tech giants or the government.

McDonnell told Ars that it’s harmful for DHS to water down the meaning of doxing when pushing platforms to remove content critical of ICE.

“When most of us hear the word ‘doxing,’ we think of something that’s threatening, posting private information along with home addresses or places of work,” McDonnell said. “And it seems like the government is expanding that definition to encompass just sharing, even if there’s no threats, nothing violent. Just sharing information about what our government is doing.”

Expanding the definition and then using that term to justify suppressing speech is concerning, he said, especially since the First Amendment includes no exception for “doxing,” even if DHS ever were to provide evidence of it.

To suppress speech, officials must show that groups are inciting violence or making true threats. FIRE has alleged that the government has not met “the extraordinary justifications required for a prior restraint” on speech and is instead using vague doxing threats to discriminate against speech based on viewpoint. They’re seeking a permanent injunction barring officials from coercing tech companies into censoring ICE posts.

If plaintiffs win, the censorship threats could subside, and tech companies may feel safe reinstating apps and Facebook groups, advocates told Ars. That could potentially revive archives documenting thousands of ICE incidents and reconnect webs of ICE watchers who lost access to valued feeds.

Until courts possibly end threats of censorship, the most cautious community members are moving local ICE-watch efforts to group chats and listservs that are harder for the government to disrupt, Trujillo told Ars.

Platforms bend over backward to help DHS censor ICE critics, advocates say Read More »