Nintendo raises planned Switch 2 accessory prices amid tariff “uncertainty”

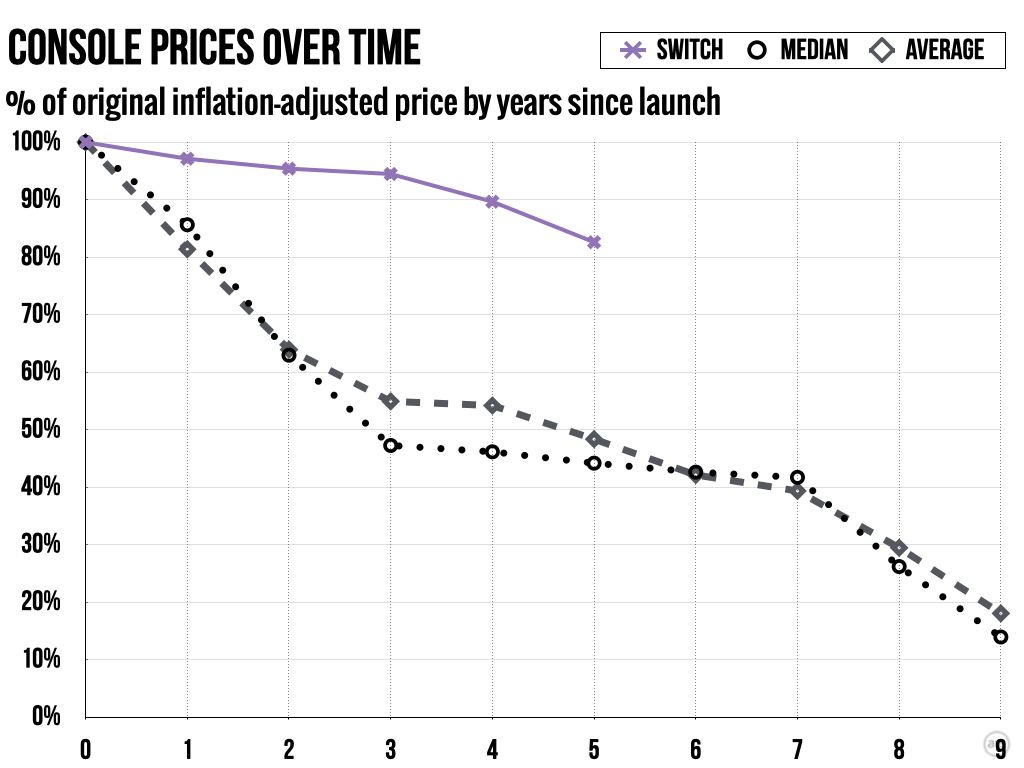

The Switch 2 hardware will still retail for its initially announced $449.99, alongside a $499.99 bundle including a digital download of Mario Kart World. Nintendo revealed Thursday that the Mario Kart bundle will only be produced “through Fall 2025,” though, and will only be available “while supplies last.” Mario Kart World will retail for $79.99 on its own, while Donkey Kong Bananza will launch in July for a $69.99 MSRP.

Most industry analysts expected Nintendo to hold the price for the Switch 2 hardware steady, even as Trump’s wide-ranging tariffs threatened to raise the cost the company incurred for systems built in China and Vietnam. “I believe it is now too late for Nintendo to drive up the price further, if that ever was an option in the first place,” Kantan Games’ Serkan Toto told GamesIndustry.biz. “As far as tariffs go, Nintendo was looking at a black box all the way until April 2, just like everybody else. As a hardware manufacturer, Nintendo most likely ran simulations to get to a price that would make them tariff-proof as much as possible.”

But that pricing calculus might not hold forever. “If the tariffs persist, I think a price increase in 2026 might be on the table,” Ampere Analysis’ Piers Harding-Rolls told GameSpot. “Nintendo will be treading very carefully considering the importance of the US market.”

Since the Switch 2 launch details were announced earlier this month, Nintendo’s official promotional livestreams have been inundated with messages begging the company to “DROP THE PRICE.”

Nintendo raises planned Switch 2 accessory prices amid tariff “uncertainty” Read More »