Adobe to automatically move subscribers to pricier, AI-focused tier in June

Subscribers to Adobe’s multi-app subscription plan, Creative Cloud All Apps, will be charged more starting on June 17 to accommodate for new generative AI features.

Adobe’s announcement, spotted by MakeUseOf, says the change will affect North American subscribers to the Creative Cloud All Apps plan, which Adobe is renaming Creative Cloud Pro. Starting on June 17, Adobe will automatically renew Creative Cloud All Apps subscribers into the Creative Cloud Pro subscription, which will be $70 per month for individuals who commit to an annual plan, up from $60 for Creative Cloud All Apps. Annual plans for students and teachers plans are moving from $35/month to $40/month, and annual teams pricing will go from $90/month to $100/month. Monthly (non-annual) subscriptions are also increasing, from $90 to $105.

Further, in an apparent attempt to push generative AI users to more expensive subscriptions, as of June 17, Adobe will give single-app subscribers just 25 generative AI credits instead of the current 500.

Current subscribers can opt to move down to a new multi-app plan called Creative Cloud Standard, which is $55/month for annual subscribers and $82.49/month for monthly subscribers. However, this tier limits access to mobile and web app features, and subscribers can’t use premium generative AI features.

Creative Cloud Standard won’t be available to new subscribers, meaning the only option for new customers who need access to many Adobe apps will be the new AI-heavy Creative Cloud Pro plan.

Adobe’s announcement explained the higher prices by saying that the subscription tier “includes all the core applications and new AI capabilities that power the way people create today, and its price reflects that innovation, as well as our ongoing commitment to deliver the future of creative tools.”

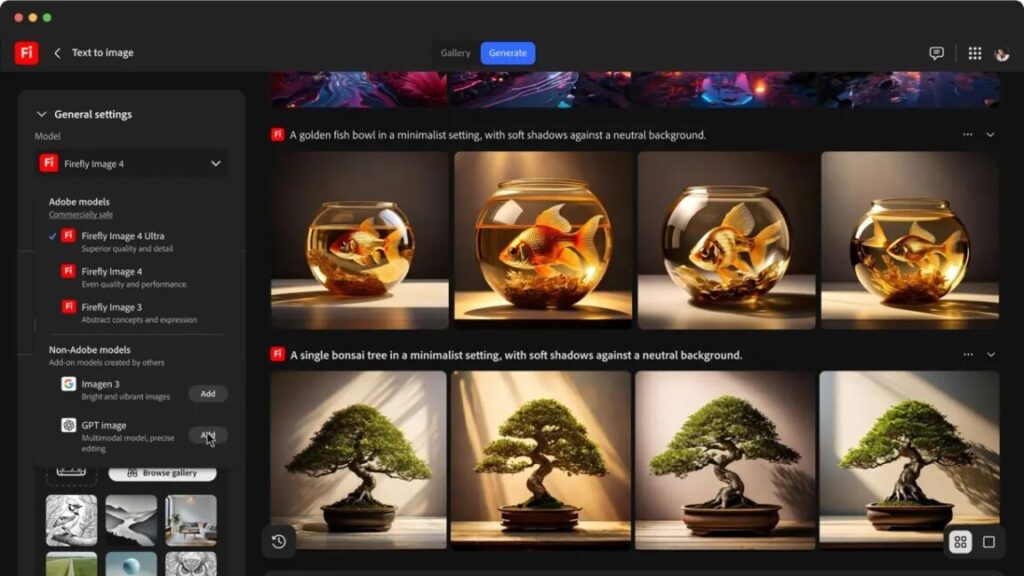

Like today’s Creative Cloud All Apps plan, Creative Cloud Pro will include Photoshop, Illustrator, Premiere Pro, Lightroom, and access to Adobe’s web and mobile apps. AI features include unlimited usage of image and vector features in Adobe apps, including Generative Fill in Photoshop, Generative Remove in Lightroom, Generative Shape Fill in Illustrator, and 4K video generation with Generative Extend in Premiere Pro.

Adobe to automatically move subscribers to pricier, AI-focused tier in June Read More »