Record scratch—Google’s Lyria 3 AI music model is coming to Gemini today

Sour notes

AI-generated music is not a new phenomenon. Several companies offer models that ingest and homogenize human-created music, and the resulting tracks can sound remarkably “real,” if a bit overproduced. Streaming services have already been inundated with phony AI artists, some of which have gathered thousands of listeners who may not even realize they’re grooving to the musical equivalent of a blender set to purée.

Still, you have to seek out tools like that, and Google is bringing similar capabilities to the Gemini app. As one of the most popular AI platforms, we’re probably about to see a lot more AI music on the Internet. Google says tracks generated with Lyria 3 will have an audio version of Google’s SynthID embedded within. That means you’ll always be able to check if a piece of audio was created with Google’s AI by uploading it to Gemini, similar to the way you can check images and videos for SynthID tags.

Google also says it has sought to create a music AI that respects copyright and partner agreements. If you name a specific artist in your prompt, Gemini won’t attempt to copy that artist’s sound. Instead, it’s trained to take that as “broad creative inspiration.” Although it also notes this process is not foolproof, and some of that original expression might imitate an artist too much. In those cases, Google invites users to report such shared content.

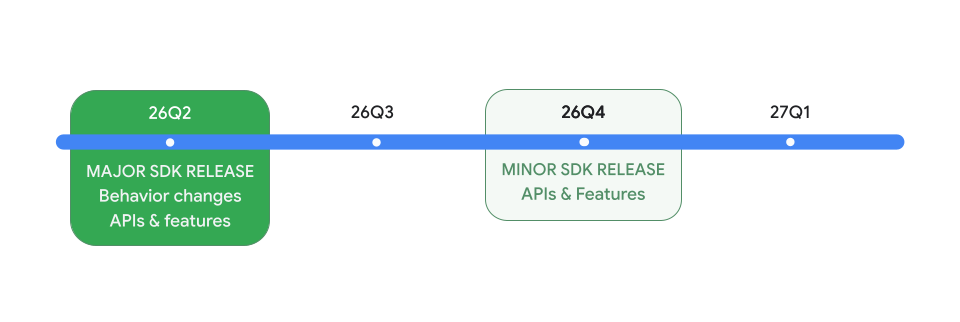

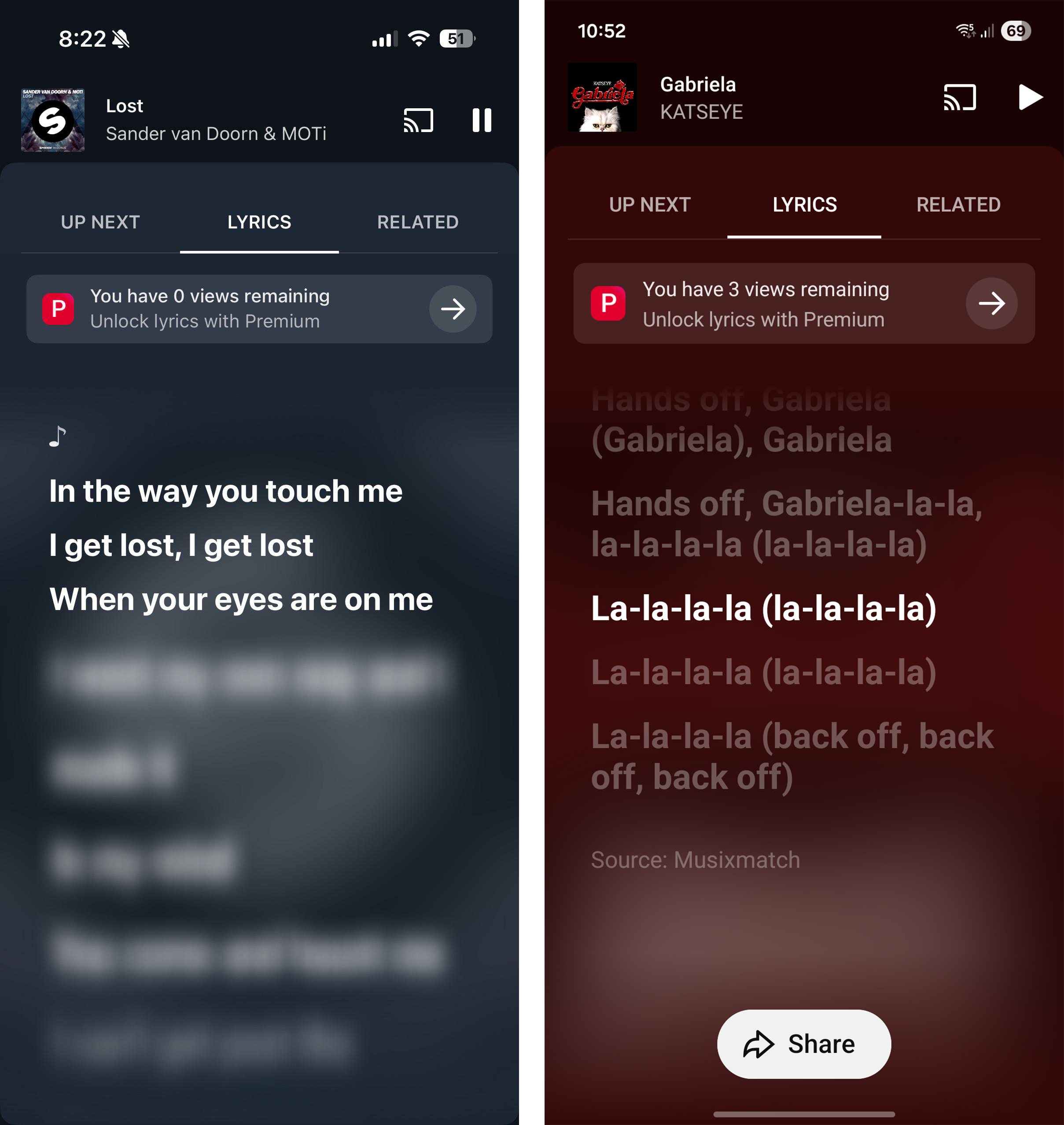

Lyria 3 is going live in the Gemini web interface today and should be available in the mobile app within a few days. It works in English, German, Spanish, French, Hindi, Japanese, Korean, and Portuguese, but Google plans to add more languages soon. While all users will have some access to music generation, those with AI Pro and AI Ultra subscriptions will have higher usage limits, but the specifics are unclear.

Record scratch—Google’s Lyria 3 AI music model is coming to Gemini today Read More »