OpenAI releases GPT-5.2 after “code red” Google threat alert

On Thursday, OpenAI released GPT-5.2, its newest family of AI models for ChatGPT, in three versions called Instant, Thinking, and Pro. The release follows CEO Sam Altman’s internal “code red” memo earlier this month, which directed company resources toward improving ChatGPT in response to competitive pressure from Google’s Gemini 3 AI model.

“We designed 5.2 to unlock even more economic value for people,” Fidji Simo, OpenAI’s chief product officer, said during a press briefing with journalists on Thursday. “It’s better at creating spreadsheets, building presentations, writing code, perceiving images, understanding long context, using tools and then linking complex, multi-step projects.”

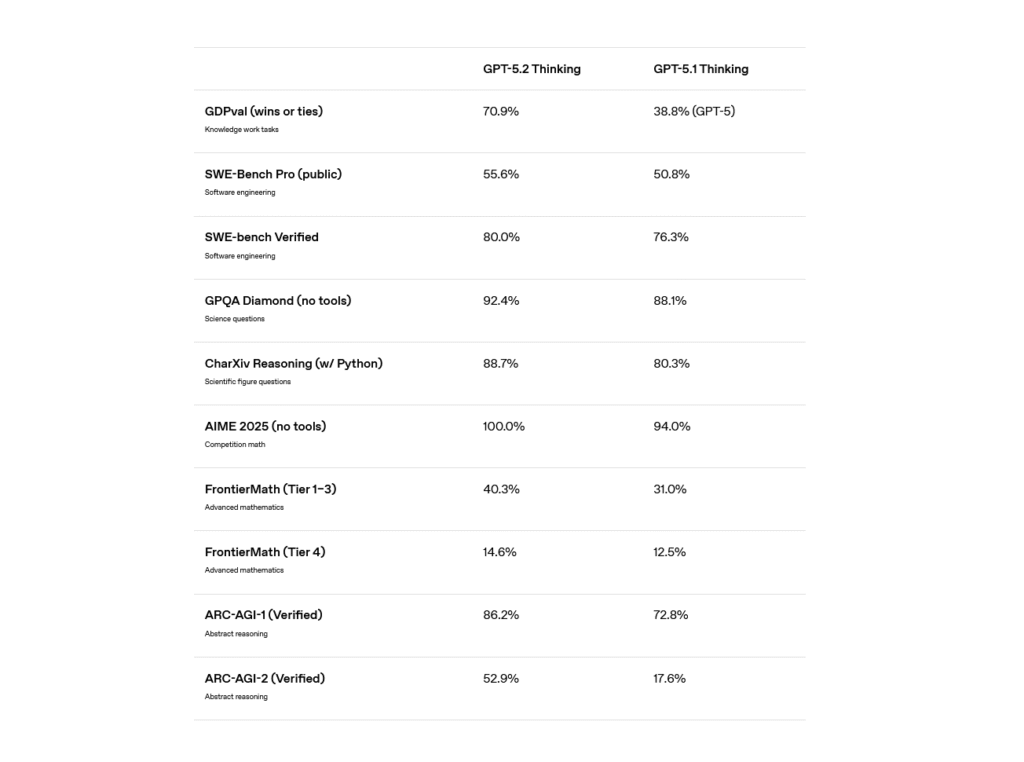

As with previous versions of GPT-5, the three model tiers serve different purposes: Instant handles faster tasks like writing and translation; Thinking spits out simulated reasoning “thinking” text in an attempt to tackle more complex work like coding and math; and Pro spits out even more simulated reasoning text with the goal of delivering the highest-accuracy performance for difficult problems.

A chart of GPT-5.2 Thinking benchmark results comparing it to its predecessor, taken from OpenAI’s website. Credit: OpenAI

GPT-5.2 features a 400,000-token context window, allowing it to process hundreds of documents at once, and a knowledge cutoff date of August 31, 2025.

GPT-5.2 is rolling out to paid ChatGPT subscribers starting Thursday, with API access available to developers. Pricing in the API runs $1.75 per million input tokens for the standard model, a 40 percent increase over GPT-5.1. OpenAI says the older GPT-5.1 will remain available in ChatGPT for paid users for three months under a legacy models dropdown.

Playing catch-up with Google

The release follows a tricky month for OpenAI. In early December, Altman issued an internal “code red” directive after Google’s Gemini 3 model topped multiple AI benchmarks and gained market share. The memo called for delaying other initiatives, including advertising plans for ChatGPT, to focus on improving the chatbot’s core experience.

The stakes for OpenAI are substantial. The company has made commitments totaling $1.4 trillion for AI infrastructure buildouts over the next several years, bets it made when it had a more obvious technology lead among AI companies. Google’s Gemini app now has more than 650 million monthly active users, while OpenAI reports 800 million weekly active users for ChatGPT.

OpenAI releases GPT-5.2 after “code red” Google threat alert Read More »