Meet the 2025 Ig Nobel Prize winners

The annual award ceremony features miniature operas, scientific demos, and the 24/7 lectures.

The Ig Nobel Prizes honor “achievements that first make people laugh and then make them think.” Credit: Aurich Lawson / Getty Images

Does alcohol enhance one’s foreign language fluency? Do West African lizards have a preferred pizza topping? And can painting cows with zebra stripes help repel biting flies? These and other unusual research questions were honored tonight in a virtual ceremony to announce the 2025 recipients of the annual Ig Nobel Prizes. Yes, it’s that time of year again, when the serious and the silly converge—for science.

Established in 1991, the Ig Nobels are a good-natured parody of the Nobel Prizes; they honor “achievements that first make people laugh and then make them think.” The unapologetically campy awards ceremony features miniature operas, scientific demos, and the 24/7 lectures whereby experts must explain their work twice: once in 24 seconds and the second in just seven words.

Acceptance speeches are limited to 60 seconds. And as the motto implies, the research being honored might seem ridiculous at first glance, but that doesn’t mean it’s devoid of scientific merit. In the weeks following the ceremony, the winners will also give free public talks, which will be posted on the Improbable Research website.

Without further ado, here are the winners of the 2025 Ig Nobel prizes.

Biology

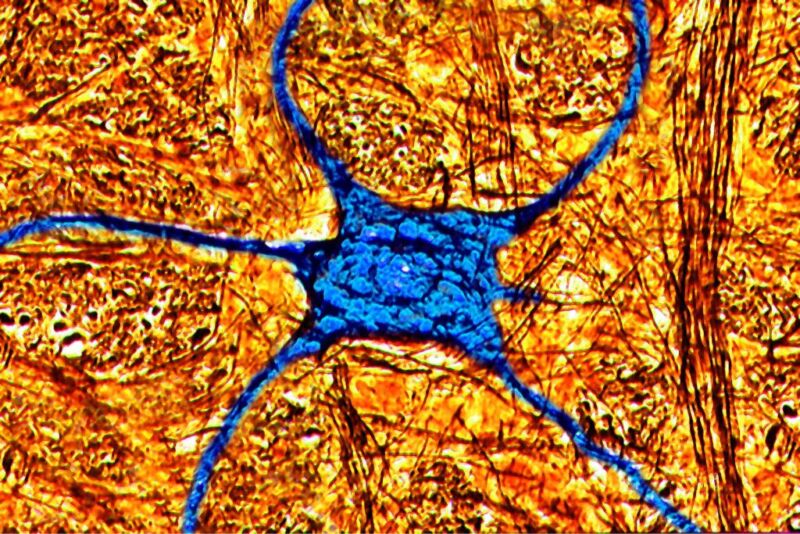

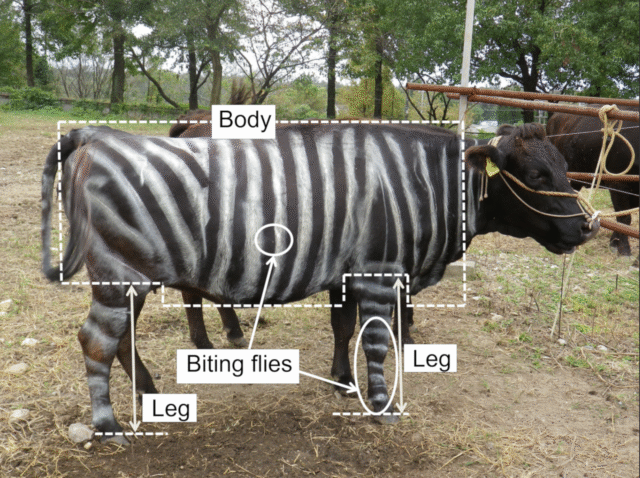

Credit: Tomoki Kojima et al., 2019

Citation: Tomoki Kojima, Kazato Oishi, Yasushi Matsubara, Yuki Uchiyama, Yoshihiko Fukushima, Naoto Aoki, Say Sato, Tatsuaki Masuda, Junichi Ueda, Hiroyuki Hirooka, and Katsutoshi Kino, for their experiments to learn whether cows painted with zebra-like striping can avoid being bitten by flies.

Any dairy farmer can tell you that biting flies are a pestilent scourge for cattle herds, which is why one so often sees cows throwing their heads, stamping their feet, flicking their tails, and twitching their skin—desperately trying to shake off the nasty creatures. There’s an economic cost as well since it causes the cattle to graze and feed less, bed down for shorter times, and start bunching together, which increases heat stress and risks injury to the animals. That results in less milk yield for dairy cows and less beef yields from feedlot cattle.

You know who isn’t much bothered by biting flies? The zebra. Scientists have long debated the function of the zebra’s distinctive black-and-white striped pattern. Is it for camouflage? Confusing potential predators? Or is it to repel those pesky flies? Tomoki Kojima et al. decided to put the latter hypothesis to the test, painting zebra stripes on six pregnant Japanese black cows at the Aichi Agricultural Research Center in Japan. They used water-borne lacquers that washed away after a few days, so the cows could take turns being in three different groups: zebra stripes, just black stripes, or no stripes (as a control).

The results: the zebra stripes significantly decreased both the number of biting flies on the cattle and the animals’ fly-repelling behaviors compared to those with black stripes or no stripes. The one exception was for skin twitching—perhaps because it is the least energy intensive of those behaviors. Why does it work? The authors suggest it might have something to do with modulation brightness or polarized light that confuses the insects’ motion detection system, used to control their approach when landing on a surface. But that’s a topic for further study.

Chemistry

Credit: Andrevan/CC BY-SA 2.5

Citation: Rotem Naftalovich, Daniel Naftalovich, and Frank Greenway, for experiments to test whether eating Teflon [a form of plastic more formally called “polytetrafluoroethylene”] is a good way to increase food volume and hence satiety without increasing calorie content.

Diet sodas and other zero-calorie drinks are a mainstay of the modern diet, thanks to the development of artificial sweeteners whose molecules can’t be metabolized by the human body. The authors of this paper are intrigued by the notion of zero-calorie foods, which they believe could be achieved by increasing the satisfying volume and mass of food without increasing the calories. And they have just the additive for that purpose: polytetrafluoroethylene (PTFE), more commonly known as Teflon.

Yes, the stuff they use on nonstick cookware. They insist that Teflon is inert, heat-resistant, impervious to stomach acid, tasteless, cost-effective, and available in handy powder form for easy mixing into food. They recommend a ratio of three parts food to one part Teflon powder.

The authors understand that to the average layperson, this is going to sound like a phenomenally bad idea—no thank you, I would prefer not to have powdered Teflon added to my food. So they spend many paragraphs citing all the scientific studies on the safety of Teflon—it didn’t hurt rats in feeding trials!—as well as the many applications for which it is already being used. These include Teflon-coated stirring rods used in labs and coatings on medical devices like bladder catheters and gynecological implants, as well as the catheters used for in vitro fertilization. And guys, you’ll be happy to know that Teflon doesn’t seem to affect sperm motility or viability. I suspect this will still be a hard sell in the consumer marketplace.

Physics

Credit: Simone Frau

Citation: Giacomo Bartolucci, Daniel Maria Busiello, Matteo Ciarchi, Alberto Corticelli, Ivan Di Terlizzi, Fabrizio Olmeda, Davide Revignas, and Vincenzo Maria Schimmenti, for discoveries about the physics of pasta sauce, especially the phase transition that can lead to clumping, which can be a cause of unpleasantness.

“Pasta alla cacio e pepe” is a simple dish: just tonnarelli pasta, pecorino cheese, and pepper. But its simplicity is deceptive. The dish is notoriously challenging to make because it’s so easy for the sauce to form unappetizing clumps with a texture more akin to stringy mozzarella rather than being smooth and creamy. As we reported in April, Italian physicists came to the rescue with a foolproof recipe based on their many scientific experiments, according to a new paper published in the journal Physics of Fluids. The trick: using corn starch for the cheese and pepper sauce instead of relying on however much starch leaches into the boiling water as the pasta is cooked.

Traditionally, the chef will extract part of the water and starch solution—which is cooled to a suitable temperature to avoid clumping as the cheese proteins “denaturate”—and mix it with the cheese to make the sauce, adding the pepper last, right before serving. But the authors note that temperature is not the only factor that can lead to this dreaded “mozzarella phase.” If one tries to mix cheese and water without any starch, the clumping is more pronounced. There is less clumping with water containing a little starch, like water in which pasta has been cooked. And when one mixes the cheese with pasta water “risottata”—i.e., collected and heated in a pan so enough water evaporates that there is a higher concentration of starch—there is almost no clumping.

The authors found that the correct starch ratio is between 2 to 3 percent of the cheese weight. Below that, you get the clumping phase separation; above that, and the sauce becomes stiff and unappetizing as it cools. Pasta water alone contains too little starch. Using pasta water “risottata” may concentrate the starch, but the chef has less control over the precise amount of starch. So the authors recommend simply dissolving 4 grams of powdered potato or corn starch in 40 grams of water, heating it gently until it thickens and combining that gel with the cheese. They also recommend toasting the black pepper briefly before adding it to the mixture to enhance its flavors and aromas.

Engineering Design

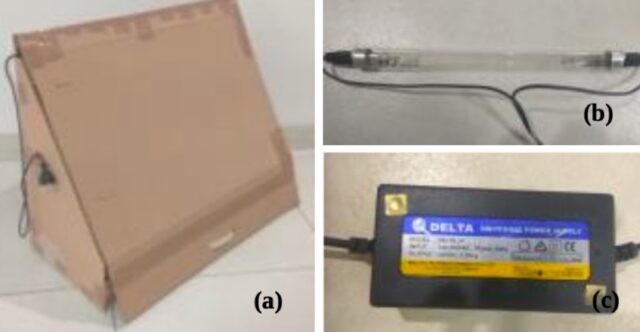

Credit: Vikash Kumar and Sarthak Mittal

Citation: Vikash Kumar and Sarthak Mittal, for analyzing, from an engineering design perspective, “how foul-smelling shoes affects the good experience of using a shoe-rack.”

Shoe odor is a universal problem, even in India, according to the authors of this paper, who hail from Shiv Nadar University (SNU) in Uttar Pradesh. All that heat and humidity means people perspire profusely when engaging even in moderate physical activity. Add in a lack of proper ventilation and washing, and shoes become a breeding ground for odor-causing bacteria called Kytococcus sedentarius. Most Indians make use of shoe racks to store their footwear, and the odors can become quite intense in that closed environment.

Yet nobody has really studied the “smelly shoe” problem when it comes to shoe racks. Enter Kumar and Mittal, who conducted a pilot study with the help of 149 first-year SNU students. More than half reported feeling uncomfortable about their own or someone else’s smelly shoes, and 90 percent kept their shoes in a shoe rack. Common methods to combat the odor included washing the shoes and drying them in the sun; using spray deodorant; or sprinkling the shoes with an antibacterial powder. They were unaware of many current odor-combatting products on the market, such as tea tree and coconut oil solutions, thyme oil, or isopropyl alcohol.

Clearly, there is an opportunity to make a killing in the odor-resistant shoe rack market. So naturally Kumar and Mittal decided to design their own version. They opted to use bacteria-killing UV rays (via a UV-C tube light) as their built-in “odor eater,” testing their device on the shoes of several SNU athletes, “which had a very strong noticeable odor.” They concluded that an exposure time of two to three minutes was sufficient to kill the bacteria and get rid of the odor.

Aviation

Credit: Public domain

Citation: Francisco Sánchez, Mariana Melcón, Carmi Korine, and Berry Pinshow, for studying whether ingesting alcohol can impair bats’ ability to fly and also their ability to echolocate.

Nature is rife with naturally occurring ethanol, particularly from ripening fruit, and that fruit in turn is consumed by various microorganisms and animal species. There are occasional rare instances of some mammals, birds, and even insects consuming fruit rich in ethanol and becoming intoxicated, making those creatures more vulnerable to potential predators or more accident-prone due to lessened motor coordination. Sánchez et al. decided to look specifically at the effects of ethanol on Egyptian fruit bats, which have been shown to avoid high-ethanol fruit. The authors wondered if this might be because the bats wanted to avoid becoming inebriated.

They conducted their experiments on adult male fruit bats kept in an outdoor cage that served as a long flight corridor. The bats were given liquid food with varying amounts of ethanol and then released in the corridor, with the authors timing how long it took each bat to fly from one end to the other. A second experiment followed the same basic protocol, but this time the authors recorded the bats’ echolocation calls with an ultrasonic microphone. The results: The bats that received liquid food with the highest ethanol content took longer to fly the length of the corridor, evidence of impaired flight ability. The quality of those bats’ echolocation was also adversely affected, putting them at a higher risk of colliding with obstacles mid-flight.

Psychology

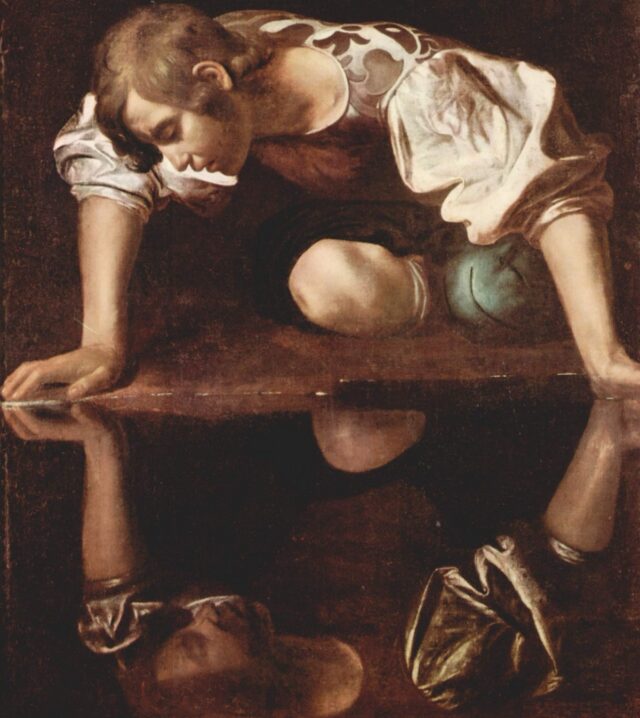

Credit: Public domain

Citation: Marcin Zajenkowski and Gilles Gignac, for investigating what happens when you tell narcissists—or anyone else—that they are intelligent.

Not all narcissists are created equal. There are vulnerable narcissists who tend to be socially withdrawn, have low self-esteem, and are prone to negative emotions. And then there are grandiose narcissists, who exhibit social boldness, high self-esteem, and are more likely to overestimate their own intelligence. The prevailing view is that this overconfidence stems from narcissism. The authors wanted to explore whether this effect might also work in reverse, i.e., that believing one has superior intelligence due to positive external feedback can lead to at least a temporary state of narcissism.

Zajenkowski et al. recruited 361 participants from Poland who were asked to rate their level of intelligence compared to other people; complete the Polish version of the Narcissistic Personality Inventory; and take an IQ test to compare their perceptions of their own intelligence with an objective measurement. The participants were then randomly assigned to one of two groups. One group received positive feedback—telling them they did indeed have a higher IQ than most people—while the other received negative feedback.

The results confirmed most of the researchers’ hypotheses. In general, participants gave lower estimates of their relative intelligence after completing the IQ test, which provided an objective check of sorts. But the type of feedback they received had a measurable impact. Positive feedback enhanced their feelings of uniqueness (a key aspect of grandiose narcissism). Those who received negative feedback rated their own intelligence as being lower, and that negative feedback had a larger effect than positive feedback. The authors concluded that external feedback helped shape the subjects’ perception of their own intelligence, regardless of the accuracy of that feedback.

Nutrition

Credit: Daniele Dendi et al, 2022

Citation: Daniele Dendi, Gabriel H. Segniagbeto, Roger Meek, and Luca Luiselli, for studying the extent to which a certain kind of lizard chooses to eat certain kinds of pizza.

Move over, Pizza Rat, here come the Pizza Lizards—rainbow lizards, to be precise. This is a species common to urban and suburban West Africa. The lizards primarily live off insects and arthropods, but their proximity to humans has led to some developing a more omnivorous approach to their foraging. Bread is a particular favorite. Case in point: One fine sunny day at a Togo seaside resort, the authors noticed a rainbow lizard stealing a tourist’s slice of four-cheese pizza and happily chowing down.

Naturally, they wanted to know if this was an isolated incident or whether the local rainbow lizards routinely feasted on pizza slices. And did the lizards have a preferred topping? Inquiring minds need to know. So they monitored the behavior of nine particular lizards, giving them the choice between a plate of four-cheese pizza and a plate of “four seasons” pizza, spaced about 10 meters apart.

It only took 15 minutes for the lizards to find the pizza and eat it, sometimes fighting over the remaining slices. But they only ate the four-cheese pizza. For the authors, this suggests there might be some form of chemical cues that attract them to the cheesy pizzas, or perhaps it’s easier for them to digest. I’d love to see how the lizards react to the widely derided Canadian bacon and pineapple pizza.

Pediatrics

Citation: Julie Mennella and Gary Beauchamp, for studying what a nursing baby experiences when the baby’s mother eats garlic.

Mennella and Beauchamp designed their experiment to investigate two questions: whether the consumption of garlic altered the odor of a mother’s breast milk, and if so, whether those changes affected the behavior of nursing infants. (Garlic was chosen because it is known to produce off flavors in dairy cow milk and affect human body odor.) They recruited eight women who were exclusively breastfeeding their infants, taking samples of their breast milk over a period when the participants abstained from eating sulfurous foods (garlic, onion, asparagus), and more samples after the mothers consumed either a garlic capsule or a placebo.

The results: Mothers who ingested the garlic capsules produced milk with a perceptibly more intense odor, as evaluated by several adult panelists brought in to sniff the breast milk samples. The strong odor peaked at two hours after ingestion and decreased fats, which is consistent with prior research on cows that ingested highly odorous feeds. As for the infants, those whose mothers ingested garlic attached to the breast for longer periods and sucked more when the milk smelled like garlic. This could be relevant to ongoing efforts to determine whether sensory experiences during breastfeeding can influence how readily infants accept new foods upon weaning, and perhaps even their later food preferences.

Literature

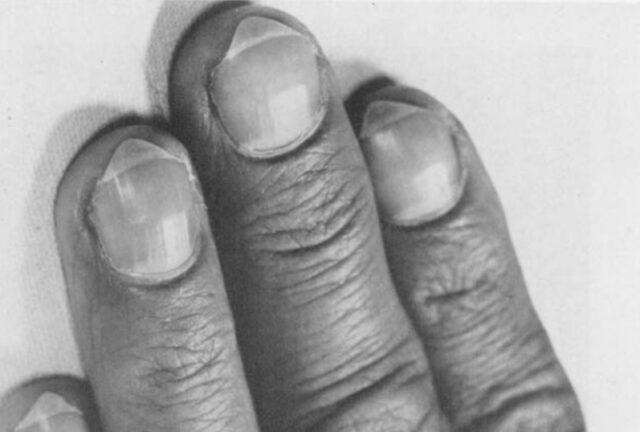

Credit: William B. Bean

Citation: The late Dr. William B. Bean, for persistently recording and analyzing the rate of growth of one of his fingernails over a period of 35 years.

If you’re surprised to see a study on fingernail growth rates under the Literature category, it will all make sense once you read the flowery prose stylings of Dr. Bean. He really did keep detailed records of how fast his fingernails grew for 35 years, claiming in his final report that “the nail provides a slowly moving keratin kymograph that measures age on the inexorable abscissa of time.” He sprinkles his observations with ponderous references to medieval astrology, James Boswell, and Moby Dick, with a dash of curmudgeonly asides bemoaning the sterile modern medical teaching methods that permeate “the teeming mass of hope and pain, technical virtuosity, and depersonalization called a ‘health center.'”

So what did our pedantic doctor discover in those 35 years, not just studying his own nails, but meticulously reviewing all the available scientific literature? Well, for starters, the rate of fingernail growth diminishes as one ages; Bean noted that his growth rates remained steady early on, but “slowed down a trifle” over the last five years of his project. Nails grow faster in children than adults. A warm environment can also accelerate growth, as does biting one’s fingernails—perhaps, he suggests, because the biting stimulates blood flow to the area. And he debunks the folklore of hair and nails growing even after death: it’s just the retraction and contraction of the skin post-mortem that makes it seem like the nails are growing.

Peace

Citation: Fritz Renner, Inge Kersbergen, Matt Field, and Jessica Werthmann, for showing that drinking alcohol sometimes improves a person’s ability to speak in a foreign language.

Alcohol is well-known to have detrimental effects on what’s known in psychological circles as “executive functioning,” impacting things like working memory and inhibitory control. But there’s a widespread belief among bilingual people that a little bit of alcohol actually improves one’s fluency in a foreign language, which also relies on executive functioning. So wouldn’t being intoxicated actually have an adverse effect on foreign language fluency? Renner et al. decided to investigate further.

They recruited 50 native German-speaking undergrad psychology students at Maastricht University in the Netherlands who were also fluent in Dutch. They were randomly divided into two groups. One group received an alcoholic drink (vodka with bitter lemon), and the other received water. Each participant consumed enough to be slightly intoxicated after 15 minutes, and then engaged in a discussion in Dutch with a native Dutch speaker. Afterward, they were asked to rate their self-perception of their skill at Dutch, with the Dutch speakers offering independent observer ratings.

The researchers were surprised to find that intoxication improved the participants’ Dutch fluency, based on the independent observer reports. (Self-evaluations were largely unaffected by intoxication levels.) One can’t simply attribute this to so-called “Dutch courage,” i.e., increased confidence associated with intoxication. Rather, the authors suggest that intoxication lowers language anxiety, thereby increasing one’s foreign language proficiency, although further research would be needed to support that hypothesis.

Jennifer is a senior writer at Ars Technica with a particular focus on where science meets culture, covering everything from physics and related interdisciplinary topics to her favorite films and TV series. Jennifer lives in Baltimore with her spouse, physicist Sean M. Carroll, and their two cats, Ariel and Caliban.

Meet the 2025 Ig Nobel Prize winners Read More »