eBay bans illicit automated shopping amid rapid rise of AI agents

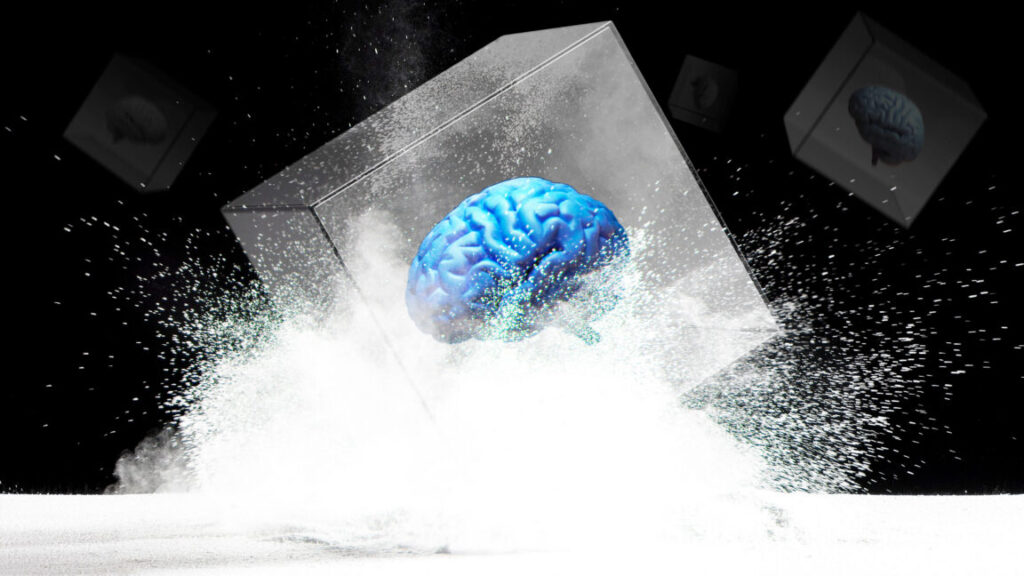

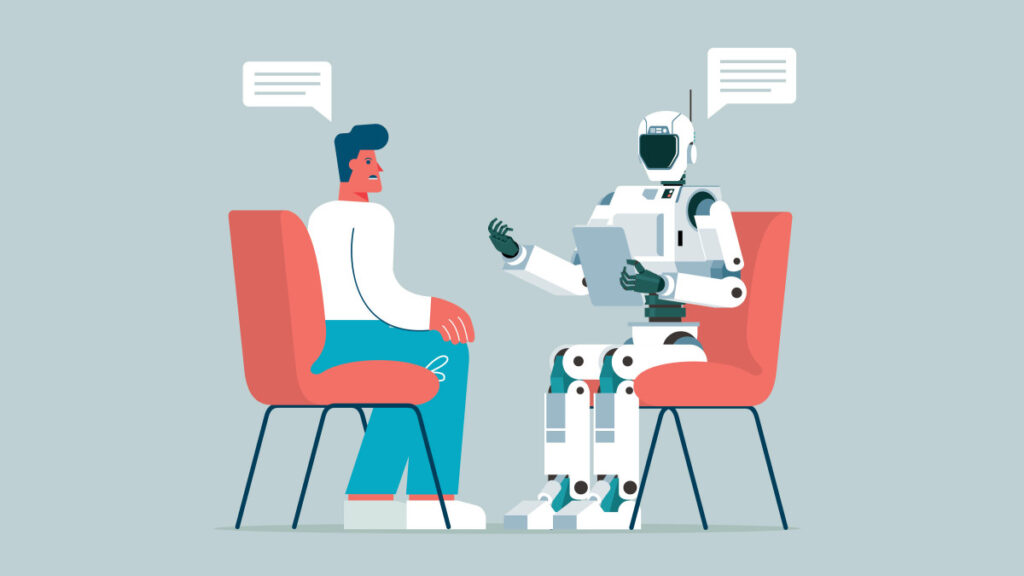

On Tuesday, eBay updated its User Agreement to explicitly ban third-party “buy for me” agents and AI chatbots from interacting with its platform without permission, first spotted by Value Added Resource. On its face, a one-line terms of service update doesn’t seem like major news, but what it implies is more significant: The change reflects the rapid emergence of what some are calling “agentic commerce,” a new category of AI tools designed to browse, compare, and purchase products on behalf of users.

eBay’s updated terms, which go into effect on February 20, 2026, specifically prohibit users from employing “buy-for-me agents, LLM-driven bots, or any end-to-end flow that attempts to place orders without human review” to access eBay’s services without the site’s permission. The previous version of the agreement contained a general prohibition on robots, spiders, scrapers, and automated data gathering tools but did not mention AI agents or LLMs by name.

At first glance, the phrase “agentic commerce” may sound like aspirational marketing jargon, but the tools are already here, and people are apparently using them. While fitting loosely under one label, these tools come in many forms.

OpenAI first added shopping features to ChatGPT Search in April 2025, allowing users to browse product recommendations. By September, the company launched Instant Checkout, which lets users purchase items from Etsy and Shopify merchants directly within the chat interface. (In November, eBay CEO Jamie Iannone suggested the company might join OpenAI’s Instant Checkout program in the future.)

eBay bans illicit automated shopping amid rapid rise of AI agents Read More »