MCP: The new “USB-C for AI” that’s bringing fierce rivals together

Model context protocol standardizes how AI uses data sources, supported by OpenAI and Anthropic.

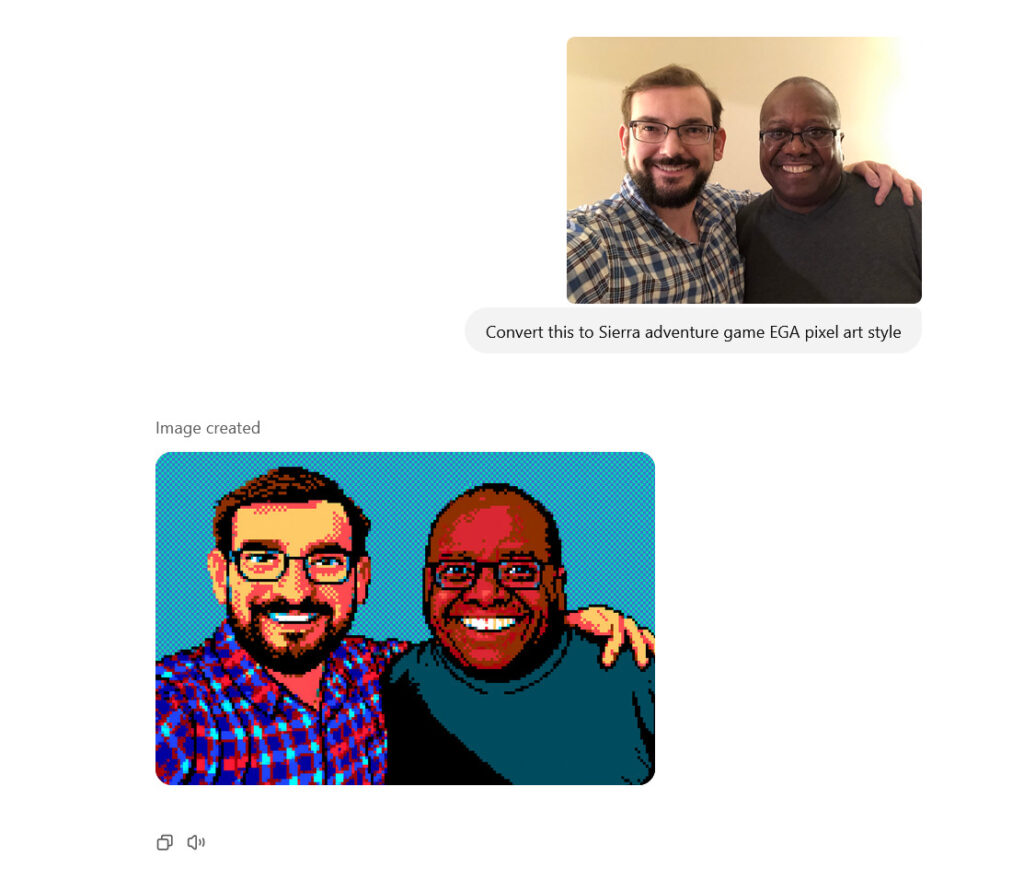

What does it take to get OpenAI and Anthropic—two competitors in the AI assistant market—to get along? Despite a fundamental difference in direction that led Anthropic’s founders to quit OpenAI in 2020 and later create the Claude AI assistant, a shared technical hurdle has now brought them together: How to easily connect their AI models to external data sources.

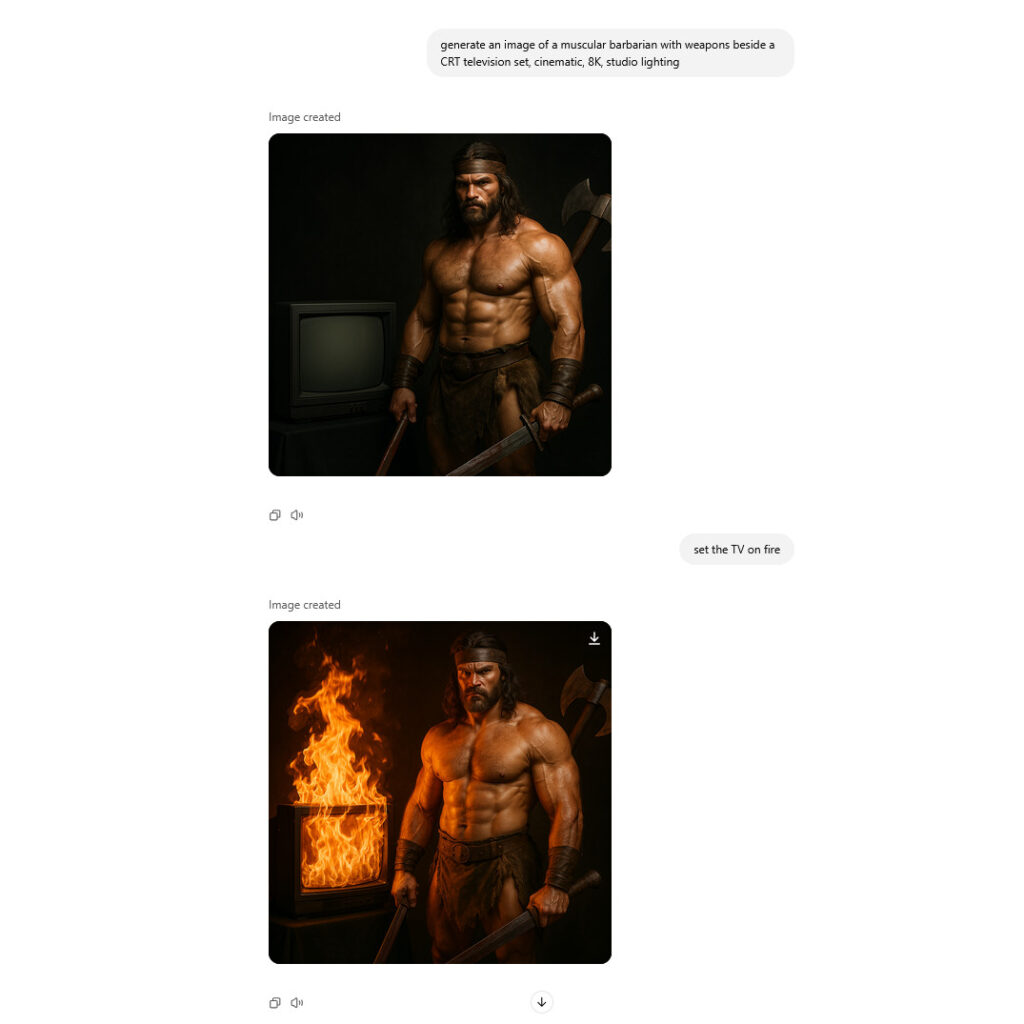

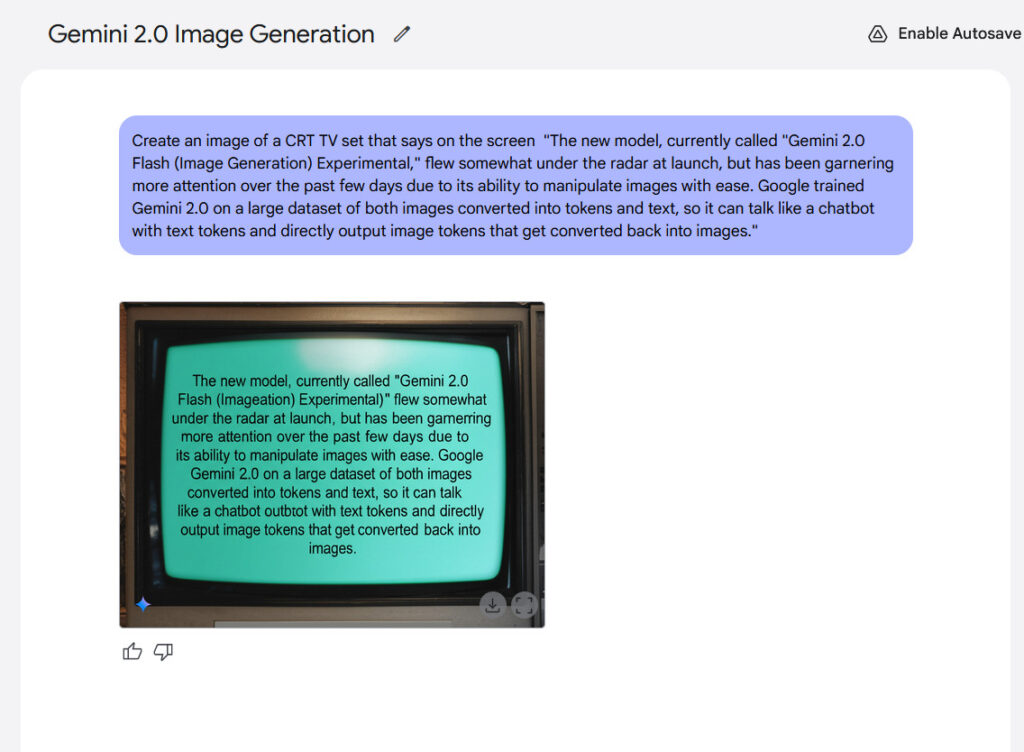

The solution comes from Anthropic, which developed and released an open specification called Model Context Protocol (MCP) in November 2024. MCP establishes a royalty-free protocol that allows AI models to connect with outside data sources and services without requiring unique integrations for each service.

“Think of MCP as a USB-C port for AI applications,” wrote Anthropic in MCP’s documentation. The analogy is imperfect, but it represents the idea that, similar to how USB-C unified various cables and ports (with admittedly a debatable level of success), MCP aims to standardize how AI models connect to the infoscape around them.

So far, MCP has also garnered interest from multiple tech companies in a rare show of cross-platform collaboration. For example, Microsoft has integrated MCP into its Azure OpenAI service, and as we mentioned above, Anthropic competitor OpenAI is on board. Last week, OpenAI acknowledged MCP in its Agents API documentation, with vocal support from the boss upstairs.

“People love MCP and we are excited to add support across our products,” wrote OpenAI CEO Sam Altman on X last Wednesday.

MCP has also rapidly begun to gain community support in recent months. For example, just browsing this list of over 300 open source servers shared on GitHub reveals growing interest in standardizing AI-to-tool connections. The collection spans diverse domains, including database connectors like PostgreSQL, MySQL, and vector databases; development tools that integrate with Git repositories and code editors; file system access for various storage platforms; knowledge retrieval systems for documents and websites; and specialized tools for finance, health care, and creative applications.

Other notable examples include servers that connect AI models to home automation systems, real-time weather data, e-commerce platforms, and music streaming services. Some implementations allow AI assistants to interact with gaming engines, 3D modeling software, and IoT devices.

What is “context” anyway?

To fully appreciate why a universal AI standard for external data sources is useful, you’ll need to understand what “context” means in the AI field.

With current AI model architecture, what an AI model “knows” about the world is baked into its neural network in a largely unchangeable form, placed there by an initial procedure called “pre-training,” which calculates statistical relationships between vast quantities of input data (“training data”—like books, articles, and images) and feeds it into the network as numerical values called “weights.” Later, a process called “fine-tuning” might adjust those weights to alter behavior (such as through reinforcement learning like RLHF) or provide examples of new concepts.

Typically, the training phase is very expensive computationally and happens either only once in the case of a base model, or infrequently with periodic model updates and fine-tunings. That means AI models only have internal neural network representations of events prior to a “cutoff date” when the training dataset was finalized.

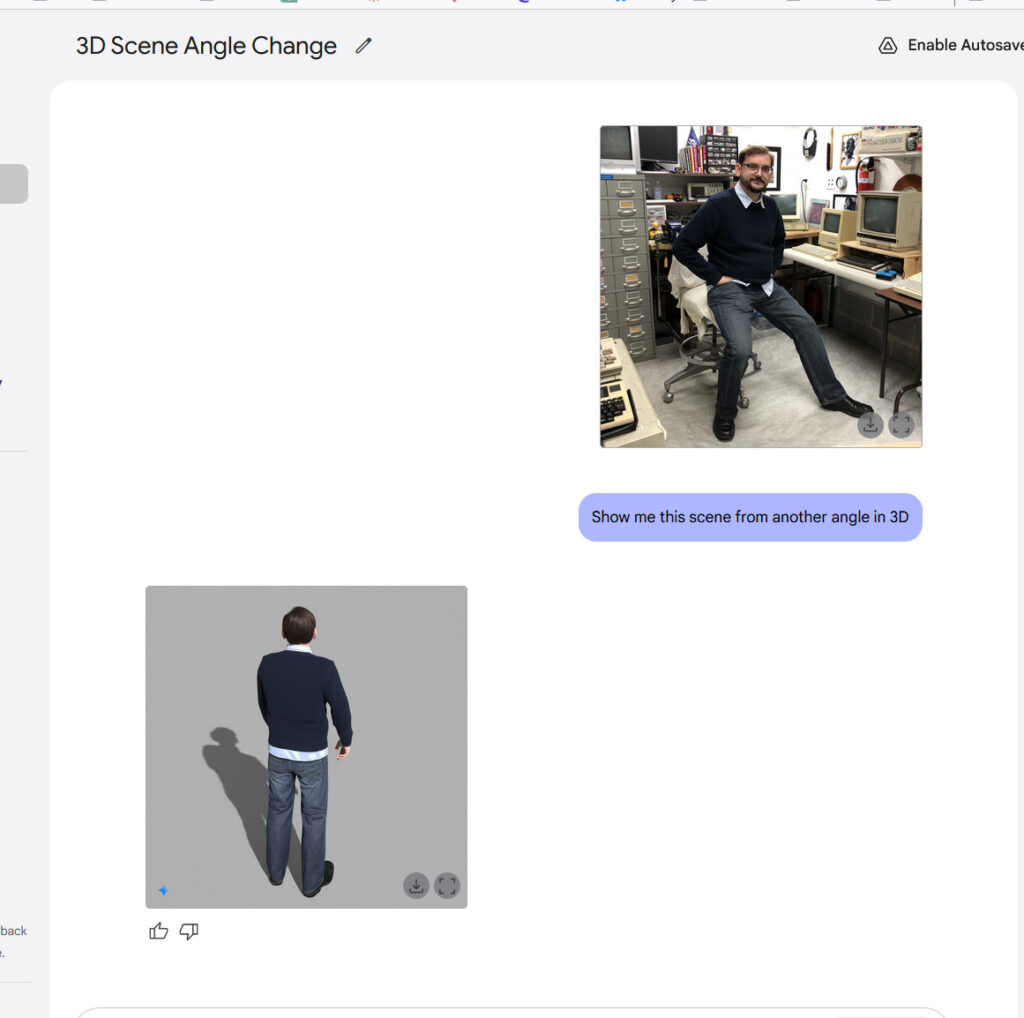

After that, the AI model is run in a kind of read-only mode called “inference,” where users feed inputs into the neural network to produce outputs, which are called “predictions.” They’re called predictions because the systems are tuned to predict the most likely next token (a chunk of data, such as portions of a word) in a user-provided sequence.

In the AI field, context is the user-provided sequence—all the data fed into an AI model that guides the model to produce a response output. This context includes the user’s input (the “prompt”), the running conversation history (in the case of chatbots), and any external information sources pulled into the conversation, including a “system prompt” that defines model behavior and “memory” systems that recall portions of past conversations. The limit on the amount of context a model can ingest at once is often called a “context window,” “context length, ” or “context limit,” depending on personal preference.

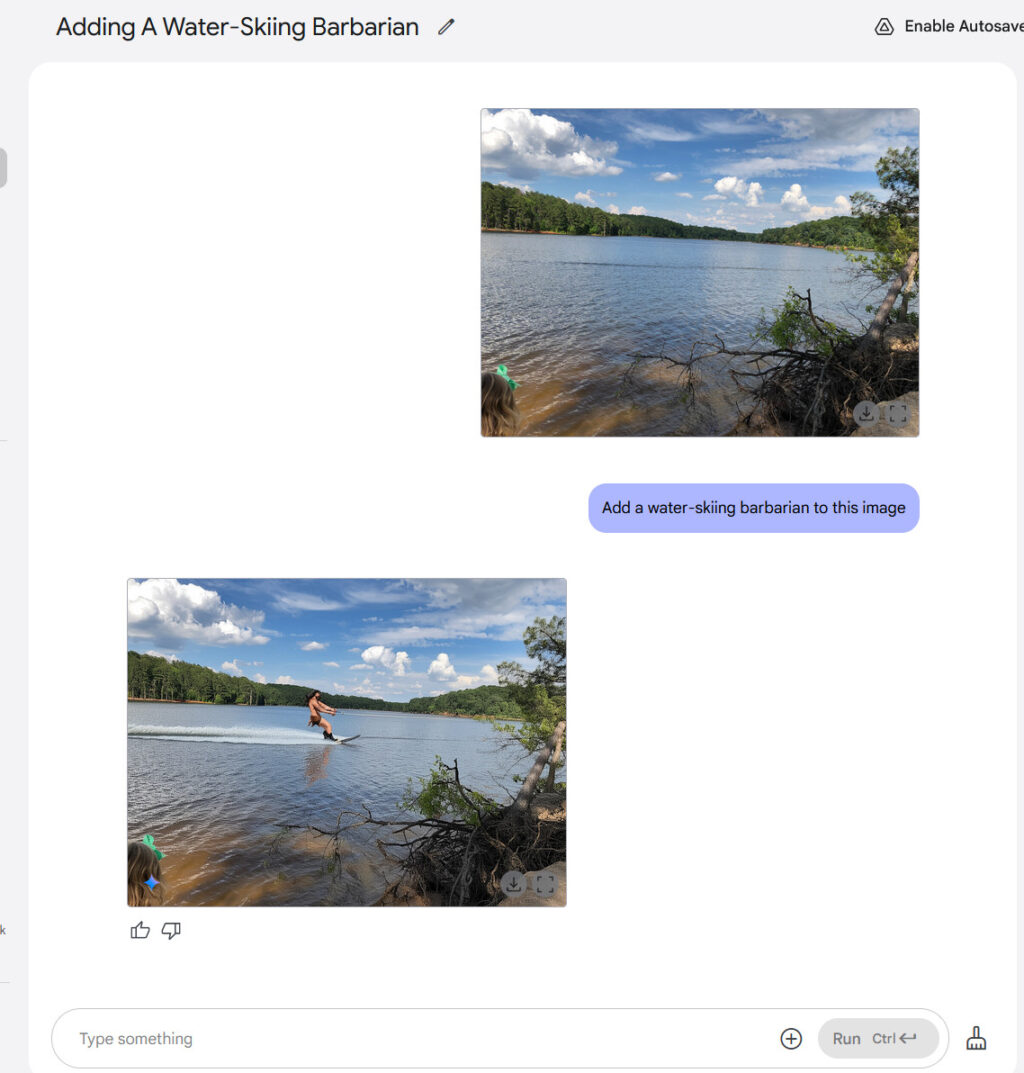

While the prompt provides important information for the model to operate upon, accessing external information sources has traditionally been cumbersome. Before MCP, AI assistants like ChatGPT and Claude could access external data (a process often called retrieval augmented generation, or RAG), but doing so required custom integrations for each service—plugins, APIs, and proprietary connectors that didn’t work across different AI models. Each new data source demanded unique code, creating maintenance challenges and compatibility issues.

MCP addresses these problems by providing a standardized method or set of rules (a “protocol”) that allows any supporting AI model framework to connect with external tools and information sources.

How does MCP work?

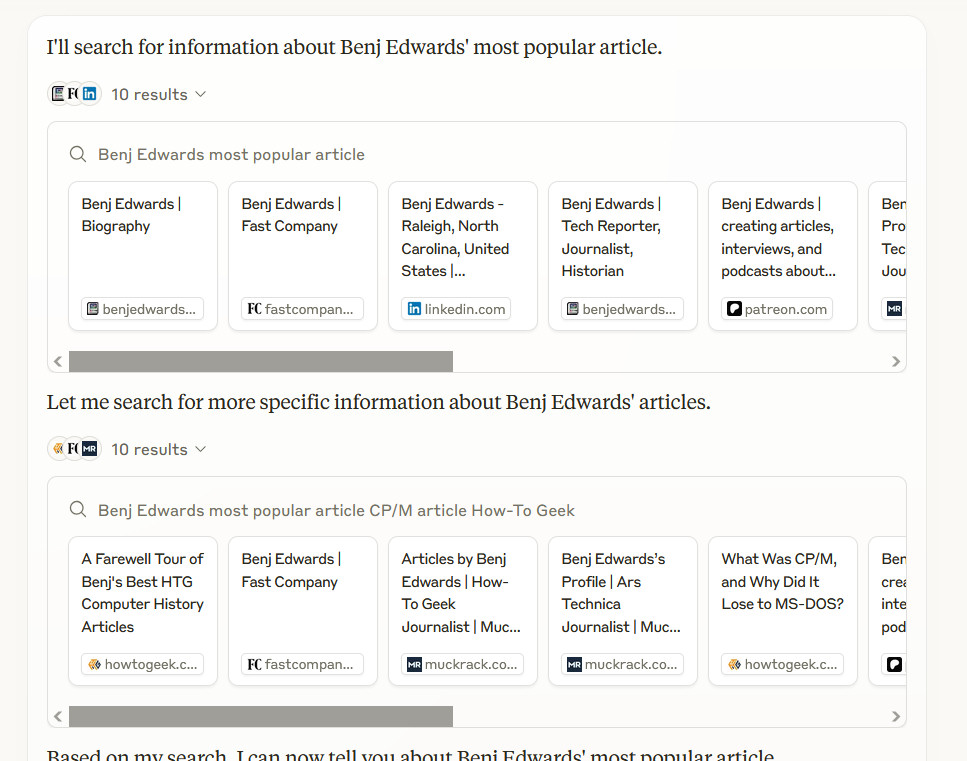

To make the connections behind the scenes between AI models and data sources, MCP uses a client-server model. An AI model (or its host application) acts as an MCP client that connects to one or more MCP servers. Each server provides access to a specific resource or capability, such as a database, search engine, or file system. When the AI needs information beyond its training data, it sends a request to the appropriate server, which performs the action and returns the result.

To illustrate how the client-server model works in practice, consider a customer support chatbot using MCP that could check shipping details in real time from a company database. “What’s the status of order #12345?” would trigger the AI to query an order database MCP server, which would look up the information and pass it back to the model. The model could then incorporate that data into its response: “Your order shipped on March 30 and should arrive April 2.”

Beyond specific use cases like customer support, the potential scope is very broad. Early developers have already built MCP servers for services like Google Drive, Slack, GitHub, and Postgres databases. This means AI assistants could potentially search documents in a company Drive, review recent Slack messages, examine code in a repository, or analyze data in a database—all through a standard interface.

From a technical implementation perspective, Anthropic designed the standard for flexibility by running in two main modes: Some MCP servers operate locally on the same machine as the client (communicating via standard input-output streams), while others run remotely and stream responses over HTTP. In both cases, the model works with a list of available tools and calls them as needed.

A work in progress

Despite the growing ecosystem around MCP, the protocol remains an early-stage project. The limited announcements of support from major companies are promising first steps, but MCP’s future as an industry standard may depend on broader acceptance, although the number of MCP servers seems to be growing at a rapid pace.

Regardless of its ultimate adoption rate, MCP may have some interesting second-order effects. For example, MCP also has the potential to reduce vendor lock-in. Because the protocol is model-agnostic, a company could switch from one AI provider to another while keeping the same tools and data connections intact.

MCP may also allow a shift toward smaller and more efficient AI systems that can interact more fluidly with external resources without the need for customized fine-tuning. Also, rather than building increasingly massive models with all knowledge baked in, companies may instead be able to use smaller models with large context windows.

For now, the future of MCP is wide open. Anthropic maintains MCP as an open source initiative on GitHub, where interested developers can either contribute to the code or find specifications about how it works. Anthropic has also provided extensive documentation about how to connect Claude to various services. OpenAI maintains its own API documentation for MCP on its website.

MCP: The new “USB-C for AI” that’s bringing fierce rivals together Read More »