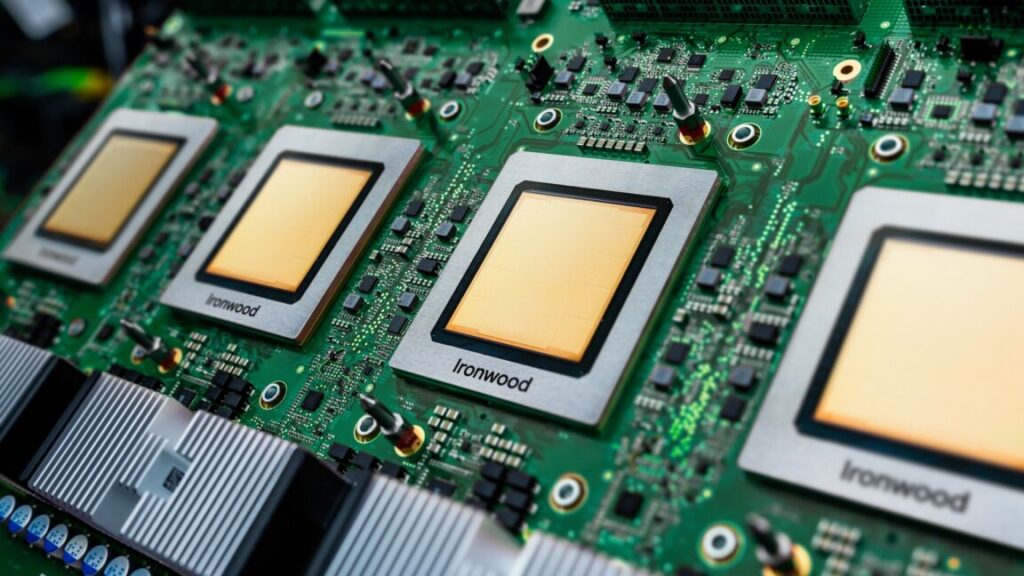

Google tells employees it must double capacity every 6 months to meet AI demand

While AI bubble talk fills the air these days, with fears of overinvestment that could pop at any time, something of a contradiction is brewing on the ground: Companies like Google and OpenAI can barely build infrastructure fast enough to fill their AI needs.

During an all-hands meeting earlier this month, Google’s AI infrastructure head Amin Vahdat told employees that the company must double its serving capacity every six months to meet demand for artificial intelligence services, reports CNBC. The comments show a rare look at what Google executives are telling its own employees internally. Vahdat, a vice president at Google Cloud, presented slides to its employees showing the company needs to scale “the next 1000x in 4-5 years.”

While a thousandfold increase in compute capacity sounds ambitious by itself, Vahdat noted some key constraints: Google needs to be able to deliver this increase in capability, compute, and storage networking “for essentially the same cost and increasingly, the same power, the same energy level,” he told employees during the meeting. “It won’t be easy but through collaboration and co-design, we’re going to get there.”

It’s unclear how much of this “demand” Google mentioned represents organic user interest in AI capabilities versus the company integrating AI features into existing services like Search, Gmail, and Workspace. But whether users are using the features voluntarily or not, Google isn’t the only tech company struggling to keep up with a growing user base of customers using AI services.

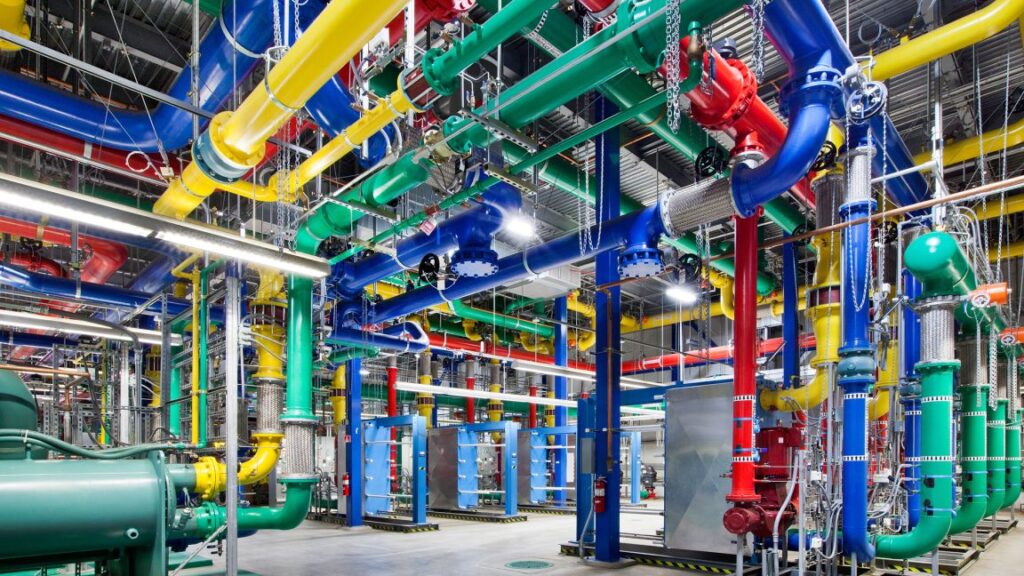

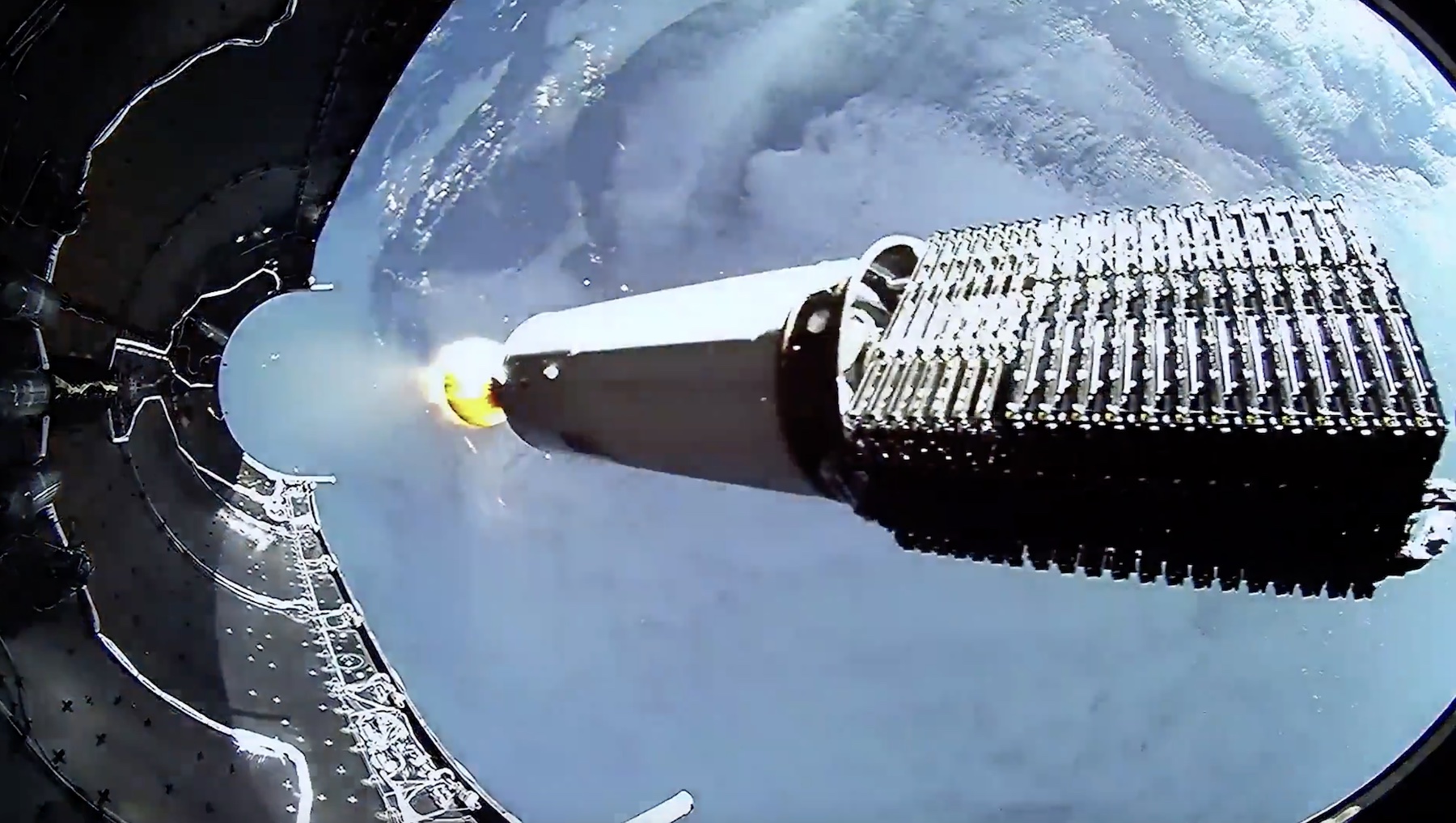

Major tech companies are in a race to build out data centers. Google competitor OpenAI is planning to build six massive data centers across the US through its Stargate partnership project with SoftBank and Oracle, committing over $400 billion in the next three years to reach nearly 7 gigawatts of capacity. The company faces similar constraints serving its 800 million weekly ChatGPT users, with even paid subscribers regularly hitting usage limits for features like video synthesis and simulated reasoning models.

“The competition in AI infrastructure is the most critical and also the most expensive part of the AI race,” Vahdat said at the meeting, according to CNBC’s viewing of the presentation. The infrastructure executive explained that Google’s challenge goes beyond simply outspending competitors. “We’re going to spend a lot,” he said, but noted the real objective is building infrastructure that is “more reliable, more performant and more scalable than what’s available anywhere else.”

Google tells employees it must double capacity every 6 months to meet AI demand Read More »