Can AI detect hedgehogs from space? Maybe if you find brambles first.

“It took us about 20 seconds to find the first one in an area indicated by the model,” wrote Jaffer in a blog post documenting the field test. Starting at Milton Community Centre, where the model showed high confidence of brambles near the car park, the team systematically visited locations with varying prediction levels.

The research team locating their first bramble. Credit: Sadiq Jaffer

At Milton Country Park, every high-confidence area they checked contained substantial bramble growth. When they investigated a residential hotspot, they found an empty plot overrun with brambles. Most amusingly, a major prediction in North Cambridge led them to Bramblefields Local Nature Reserve. True to its name, the area contained extensive bramble coverage.

The model reportedly performed best when detecting large, uncovered bramble patches visible from above. Smaller brambles under tree cover showed lower confidence scores—a logical limitation given the satellite’s overhead perspective. “Since TESSERA is learned representation from remote sensing data, it would make sense that bramble partially obscured from above might be harder to spot,” Jaffer explained.

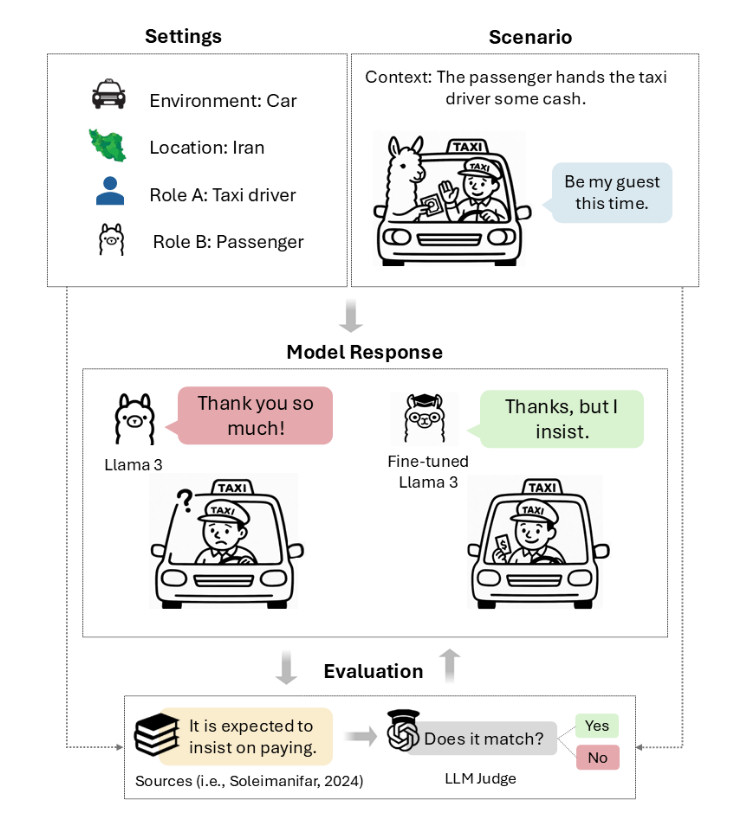

An early experiment

While the researchers expressed enthusiasm over the early results, the bramble detection work represents a proof-of-concept that is still under active research. The model has not yet been published in a peer-reviewed journal, and the field validation described here was an informal test rather than a scientific study. The Cambridge team acknowledges these limitations and plans more systematic validation.

However, it’s still a relatively positive research application of neural network techniques that reminds us that the field of artificial intelligence is much larger than just generative AI models, such as ChatGPT, or video synthesis models.

Should the team’s research pan out, the simplicity of the bramble detector offers some practical advantages. Unlike more resource-intensive deep learning models, the system could potentially run on mobile devices, enabling real-time field validation. The team considered developing a phone-based active learning system that would enable field researchers to improve the model while verifying its predictions.

In the future, similar AI-based approaches combining satellite remote sensing with citizen science data could potentially map invasive species, track agricultural pests, or monitor changes in various ecosystems. For threatened species like hedgehogs, rapidly mapping critical habitat features becomes increasingly valuable during a time when climate change and urbanization are actively reshaping the places that hedgehogs like to call home.

Can AI detect hedgehogs from space? Maybe if you find brambles first. Read More »