AI in Wyoming may soon use more electricity than state’s human residents

Wyoming’s data center boom

Cheyenne is no stranger to data centers, having attracted facilities from Microsoft and Meta since 2012 due to its cool climate and energy access. However, the new project pushes the state into uncharted territory. While Wyoming is the nation’s third-biggest net energy supplier, producing 12 times more total energy than it consumes (dominated by fossil fuels), its electricity supply is finite.

While Tallgrass and Crusoe have announced the partnership, they haven’t revealed who will ultimately use all this computing power—leading to speculation about potential tenants.

A potential connection to OpenAI’s Stargate AI infrastructure project, announced in January, remains a subject of speculation. When asked by The Associated Press if the Cheyenne project was part of this effort, Crusoe spokesperson Andrew Schmitt was noncommittal. “We are not at a stage that we are ready to announce our tenant there,” Schmitt said. “I can’t confirm or deny that it’s going to be one of the Stargate.”

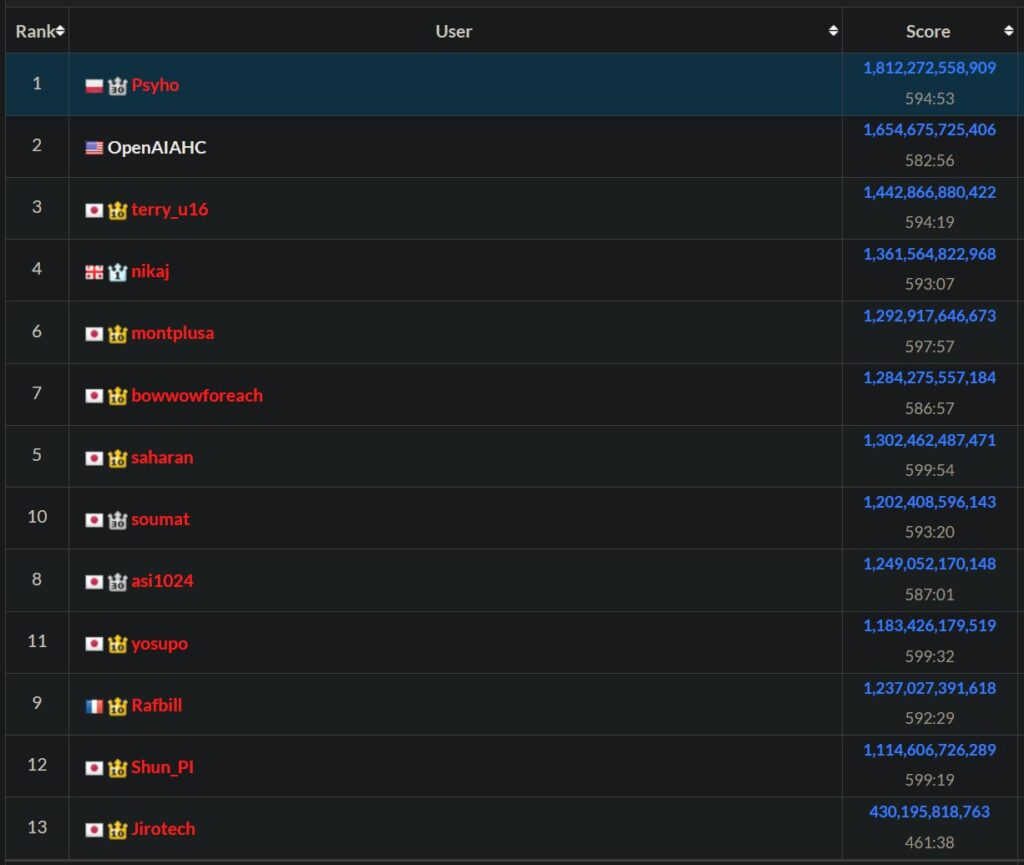

OpenAI recently activated the first phase of a Crusoe-built data center complex in Abilene, Texas, in partnership with Oracle. Chris Lehane, OpenAI’s chief global affairs officer, told The Associated Press last week that the Texas facility generates “roughly and depending how you count, about a gigawatt of energy” and represents “the largest data center—we think of it as a campus—in the world.”

OpenAI has committed to developing an additional 4.5 gigawatts of data center capacity through an agreement with Oracle. “We’re now in a position where we have, in a really concrete way, identified over five gigawatts of energy that we’re going to be able to build around,” Lehane told the AP. The company has not disclosed locations for these expansions, and Wyoming was not among the 16 states where OpenAI said it was searching for data center sites earlier this year.

AI in Wyoming may soon use more electricity than state’s human residents Read More »