Physics of badminton’s new killer spin serve

Serious badminton players are constantly exploring different techniques to give them an edge over opponents. One of the latest innovations is the spin serve, a devastatingly effective method in which a player adds a pre-spin just before the racket contacts the shuttlecock (aka the birdie). It’s so effective—some have called it “impossible to return“—that the Badminton World Federation (BWF) banned the spin serve in 2023, at least until after the 2024 Paralympic Games in Paris.

The sanction wasn’t meant to quash innovation but to address players’ concerns about the possible unfair advantages the spin serve conferred. The BWF thought that international tournaments shouldn’t become the test bed for the technique, which is markedly similar to the previously banned “Sidek serve.” The BWF permanently banned the spin serve earlier this year. Chinese physicists have now teased out the complex fundamental physics of the spin serve, publishing their findings in the journal Physics of Fluids.

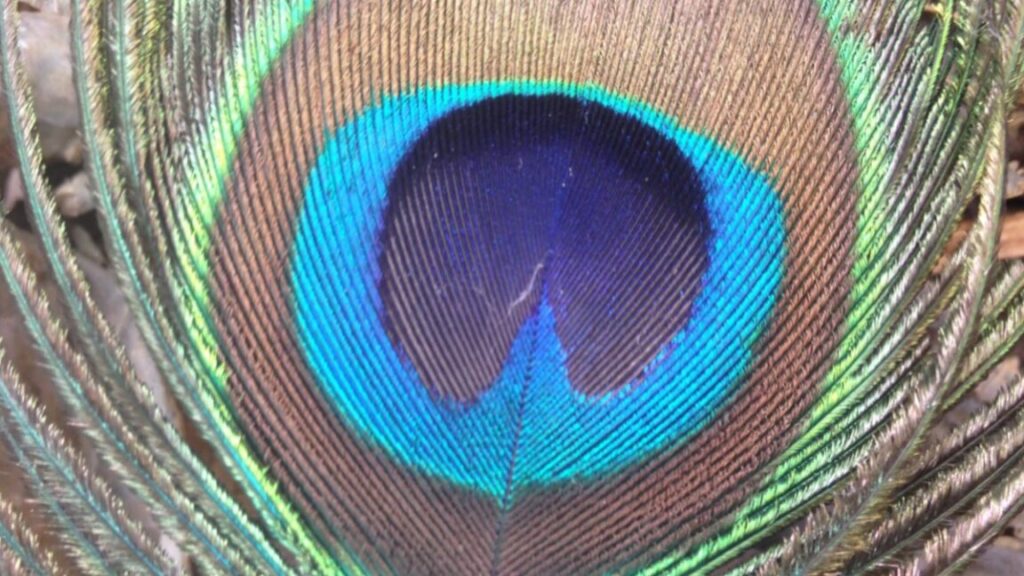

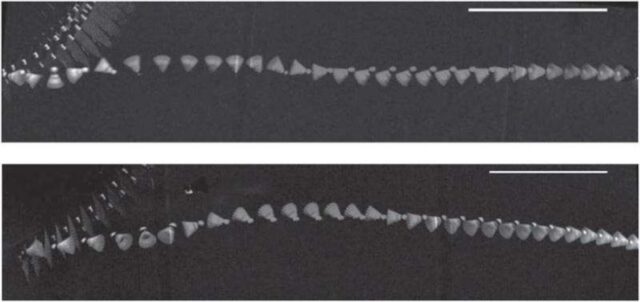

Shuttlecocks are unique among the various projectiles used in different sports due to their open conical shape. Sixteen overlapping feathers protrude from a rounded cork base that is usually covered in thin leather. The birdies one uses for leisurely backyard play might be synthetic nylon, but serious players prefer actual feathers.

Those overlapping feathers give rise to quite a bit of drag, such that the shuttlecock will rapidly decelerate as it travels and its parabolic trajectory will fall at a steeper angle than its rise. The extra drag also means that players must exert quite a bit of force to hit a shuttlecock the full length of a badminton court. Still, shuttlecocks can achieve top speeds of more than 300 mph. The feathers also give the birdie a slight natural spin around its axis, and this can affect different strokes. For instance, slicing from right to left, rather than vice versa, will produce a better tumbling net shot.

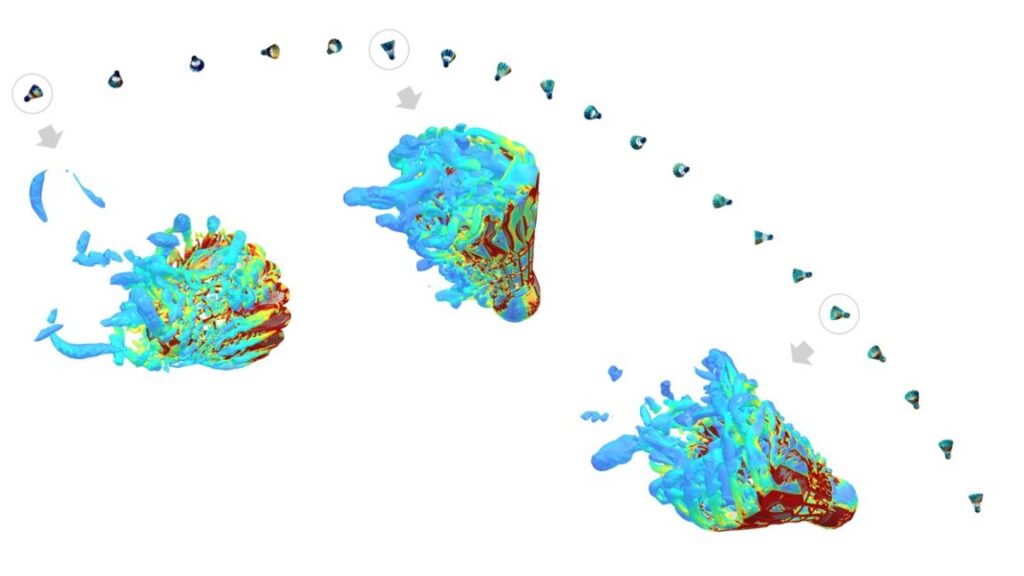

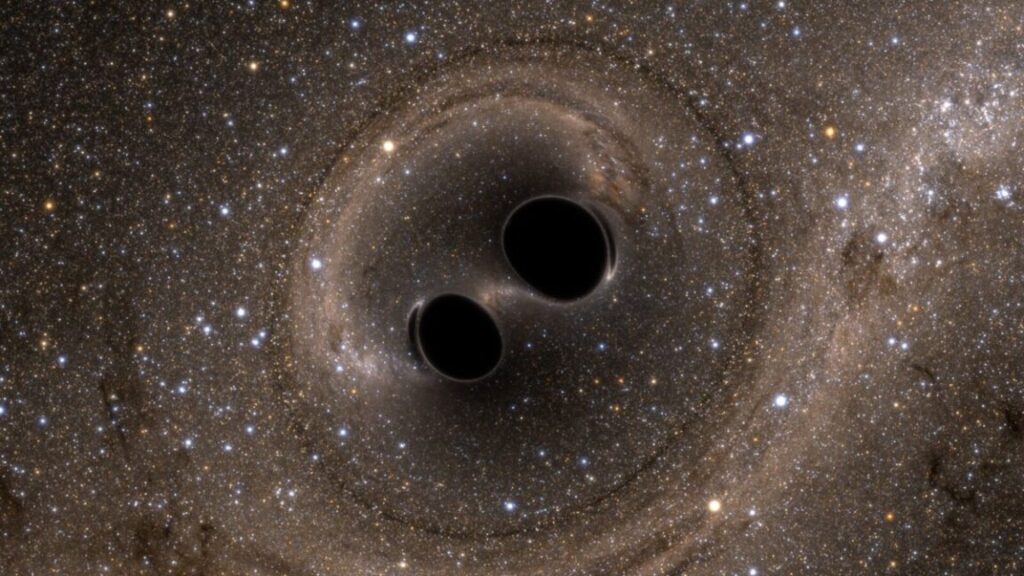

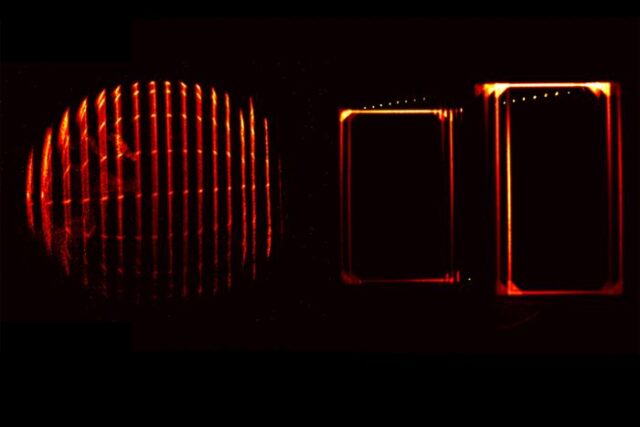

Chronophotographies of shuttlecocks after an impact with a racket. Credit: Caroline Cohen et al., 2015

The cork base makes the birdie aerodynamically stable: No matter how one orients the birdie, once airborne, it will turn so that it is traveling cork-first and will maintain that orientation throughout its trajectory. A 2015 study examined the physics of this trademark flip, recording flips with high-speed video and conducting free-fall experiments in a water tank to study how its geometry affects the behavior. The latter confirmed that shuttlecock feather geometry hits a sweet spot in terms of an opening inclination angle that is neither too small nor too large. And they found that feather shuttlecocks are indeed better than synthetic ones, deforming more when hit to produce a more triangular trajectory.

Physics of badminton’s new killer spin serve Read More »