Microsoft demonstrates working qubits based on exotic physics

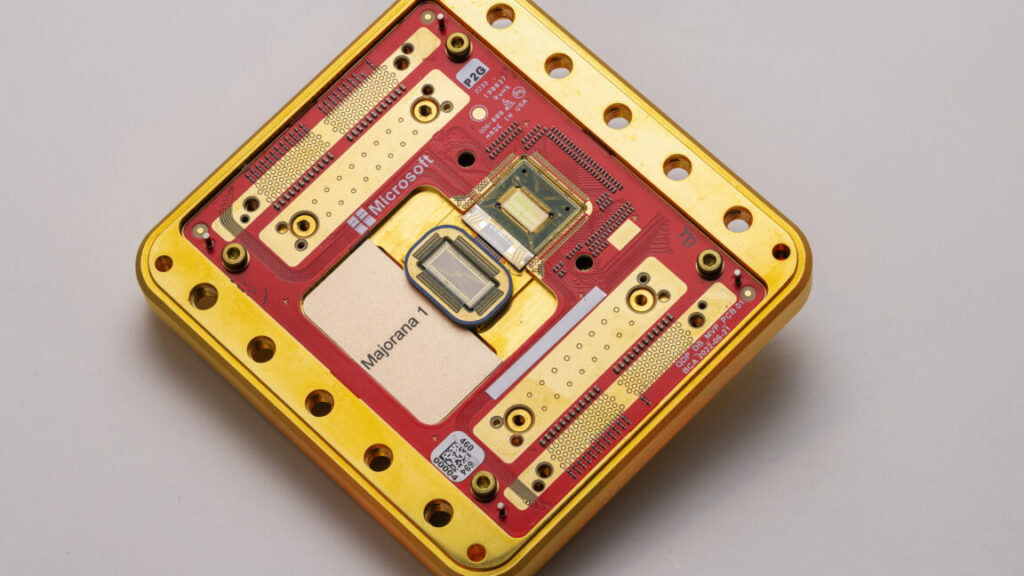

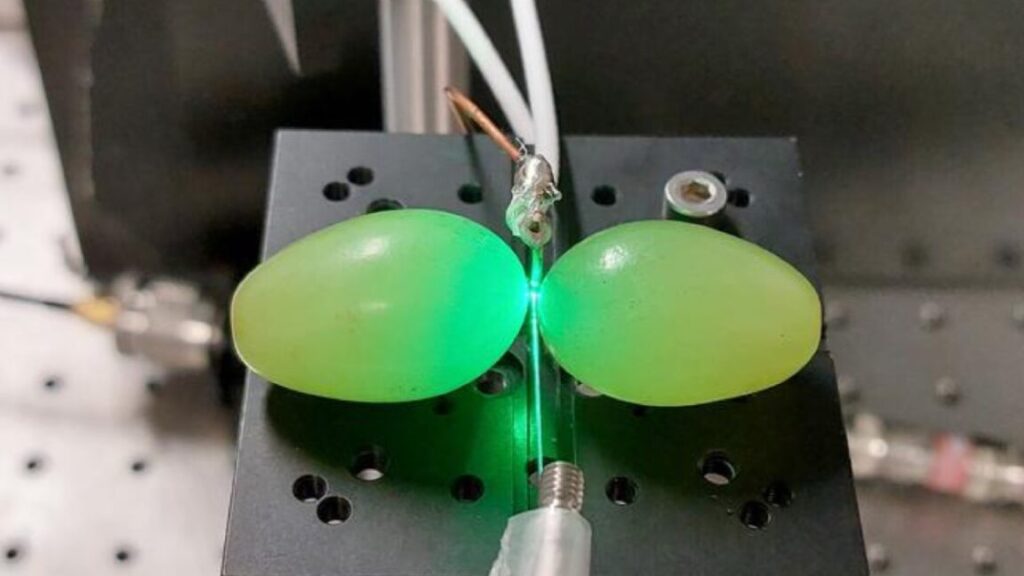

Microsoft’s first entry into quantum hardware comes in the form of Majorana 1, a processor with eight of these qubits.

Given that some of its competitors have hardware that supports over 1,000 qubits, why does the company feel it can still be competitive? Nayak described three key features of the hardware that he feels will eventually give Microsoft an advantage.

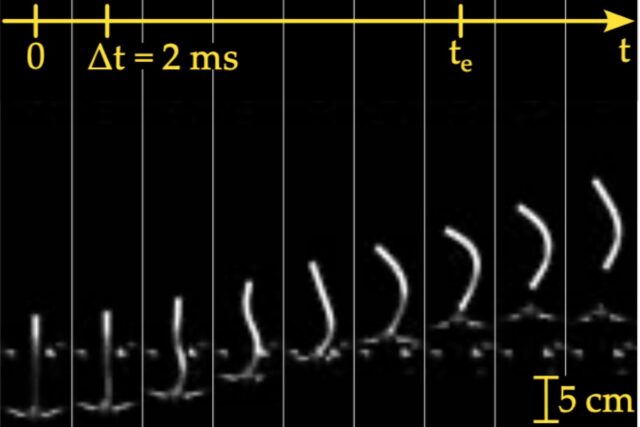

The first has to do with the fundamental physics that governs the energy needed to break apart one of the Cooper pairs in the topological superconductor, which could destroy the information held in the qubit. There are a number of ways to potentially increase this energy, from lowering the temperature to making the indium arsenide wire longer. As things currently stand, Nayak said that small changes in any of these can lead to a large boost in the energy gap, making it relatively easy to boost the system’s stability.

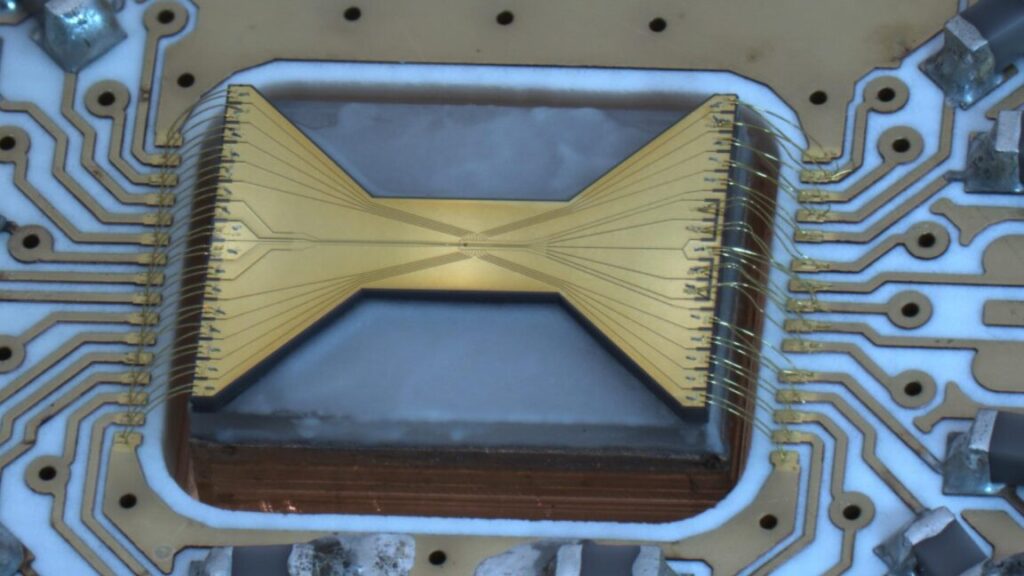

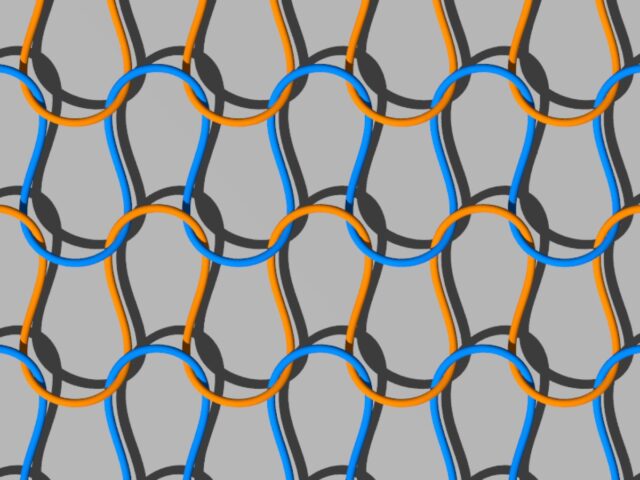

Another key feature, he argued, is that the hardware is relatively small. He estimated that it should be possible to place a million qubits on a single chip. “Even if you put in margin for control structures and wiring and fan out, it’s still a few centimeters by a few centimeters,” Nayak said. “That was one of the guiding principles of our qubits.” So unlike some other technologies, the topological qubits won’t require anyone to figure out how to link separate processors into a single quantum system.

Finally, all the measurements that control the system run through the quantum dot, and controlling that is relatively simple. “Our qubits are voltage-controlled,” Nayak told Ars. “What we’re doing is just turning on and off coupling of quantum dots to qubits to topological nano wires. That’s a digital signal that we’re sending, and we can generate those digital signals with a cryogenic controller. So we actually put classical control down in the cold.”

Microsoft demonstrates working qubits based on exotic physics Read More »