Google gets an error-corrected quantum bit to be stable for an hour

Using almost the entire chip for a logical qubit provides long-term stability.

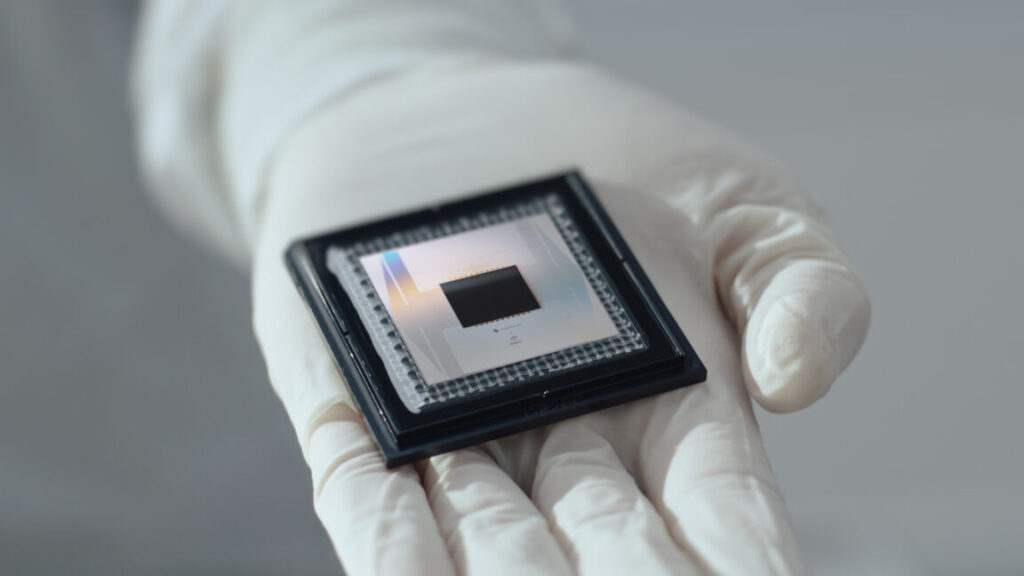

Google’s new Willow chip is its first new generation of chips in about five years. Credit: Google

On Monday, Nature released a paper from Google’s quantum computing team that provides a key demonstration of the potential of quantum error correction. Thanks to an improved processor, Google’s team found that increasing the number of hardware qubits dedicated to an error-corrected logical qubit led to an exponential increase in performance. By the time the entire 105-qubit processor was dedicated to hosting a single error-corrected qubit, the system was stable for an average of an hour.

In fact, Google told Ars that errors on this single logical qubit were rare enough that it was difficult to study them. The work provides a significant validation that quantum error correction is likely to be capable of supporting the execution of complex algorithms that might require hours to execute.

A new fab

Google is making a number of announcements in association with the paper’s release (an earlier version of the paper has been up on the arXiv since August). One of those is that the company is committed enough to its quantum computing efforts that it has built its own fabrication facility for its superconducting processors.

“In the past, all the Sycamore devices that you’ve heard about were fabricated in a shared university clean room space next to graduate students and people doing kinds of crazy stuff,” Google’s Julian Kelly said. “And we’ve made this really significant investment in bringing this new facility online, hiring staff, filling it with tools, transferring their process over. And that enables us to have significantly more process control and dedicated tooling.”

That’s likely to be a critical step for the company, as the ability to fabricate smaller test devices can allow the exploration of lots of ideas on how to structure the hardware to limit the impact of noise. The first publicly announced product of this lab is the Willow processor, Google’s second design, which ups its qubit count to 105. Kelly said one of the changes that came with Willow actually involved making the individual pieces of the qubit larger, which makes them somewhat less susceptible to the influence of noise.

All of that led to a lower error rate, which was critical for the work done in the new paper. This was demonstrated by running Google’s favorite benchmark, one that it acknowledges is contrived in a way to make quantum computing look as good as possible. Still, people have figured out how to make algorithm improvements for classical computers that have kept them mostly competitive. But, with all the improvements, Google expects that the quantum hardware has moved firmly into the lead. “We think that the classical side will never outperform quantum in this benchmark because we’re now looking at something on our new chip that takes under five minutes, would take 1025 years, which is way longer than the age of the Universe,” Kelly said.

Building logical qubits

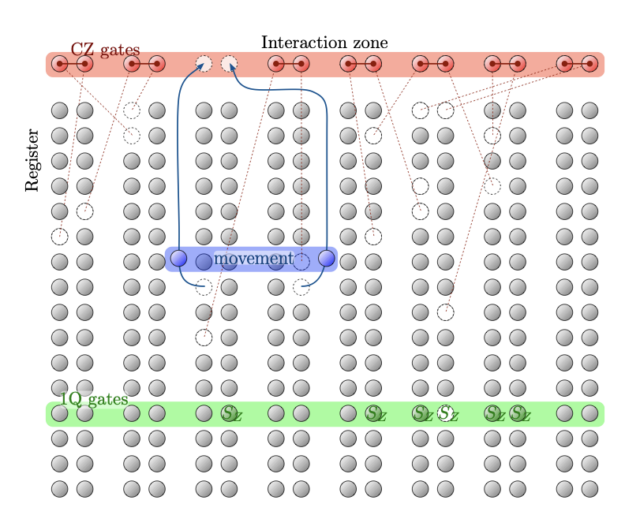

The work focuses on the behavior of logical qubits, in which a collection of individual hardware qubits are grouped together in a way that enables errors to be detected and corrected. These are going to be essential for running any complex algorithms, since the hardware itself experiences errors often enough to make some inevitable during any complex calculations.

This naturally creates a key milestone. You can get better error correction by adding more hardware qubits to each logical qubit. If each of those hardware qubits produces errors at a sufficient rate, however, then you’ll experience errors faster than you can correct for them. You need to get hardware qubits of a sufficient quality before you start benefitting from larger logical qubits. Google’s earlier hardware had made it past that milestone, but only barely. Adding more hardware qubits to each logical qubit only made for a marginal improvement.

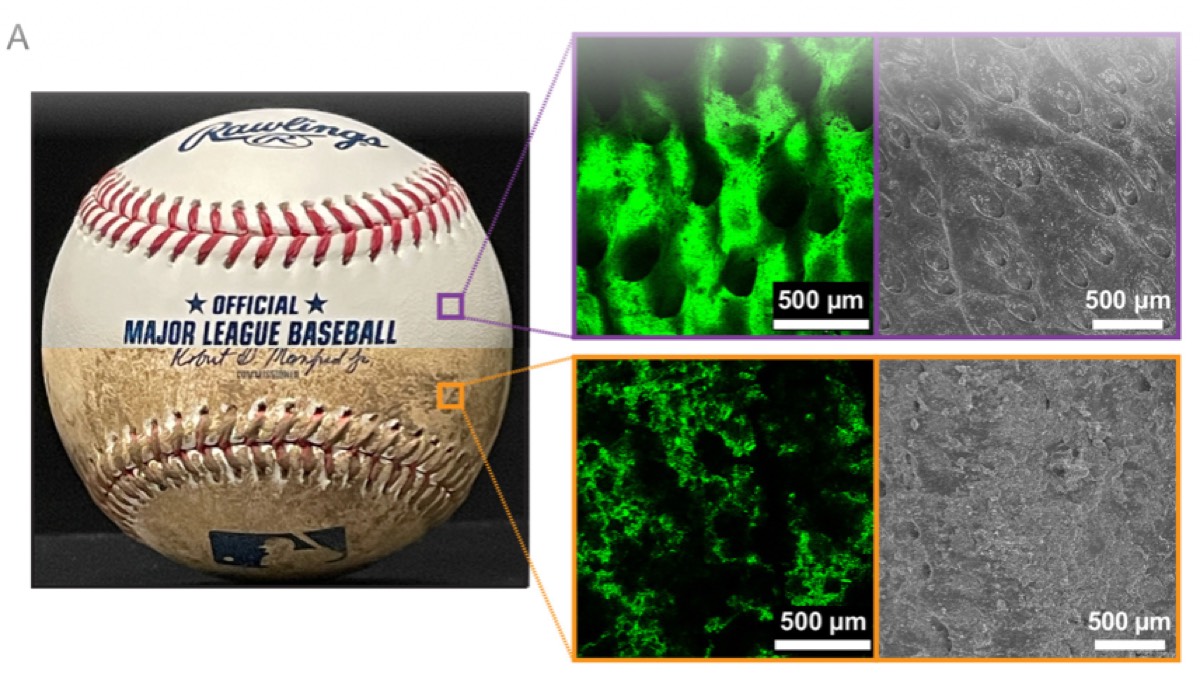

That’s no longer the case. Google’s processors have the hardware qubits laid out on a square grid, with each connected to its nearest neighbors (typically four except at the edges of the grid). And there’s a specific error correction code structure, called the surface code, that fits neatly into this grid. And you can use surface codes of different sizes by using progressively more of the grid. The size of the grid being used is measured by a term called distance, with larger distance meaning a bigger logical qubit, and thus better error correction.

(In addition to a standard surface code, Google includes a few qubits that handle a phenomenon called “leakage,” where a qubit ends up in a higher-energy state, instead of the two low-energy states defined as zero and one.)

The key result is that going from a distance of three to a distance of five more than doubled the ability of the system to catch and correct errors. Going from a distance of five to a distance of seven doubled it again. Which shows that the hardware qubits have reached a sufficient quality that putting more of them into a logical qubit has an exponential effect.

“As we increase the grid from three by three to five by five to seven by seven, the error rate is going down by a factor of two each time,” said Google’s Michael Newman. “And that’s that exponential error suppression that we want.”

Going big

The second thing they demonstrated is that, if you make the largest logical qubit that the hardware can support, with a distance of 15, it’s possible to hang onto the quantum information for an average of an hour. This is striking because Google’s earlier work had found that its processors experience widespread simultaneous errors that the team ascribed to cosmic ray impacts. (IBM, however, has indicated it doesn’t see anything similar, so it’s not clear whether this diagnosis is correct.) Those happened every 10 seconds or so. But this work shows that a sufficiently large error code can correct for these events, whatever their cause.

That said, these qubits don’t survive indefinitely. One of them seems to be a localized temporary increase in errors. The second, more difficult to deal with problem involves a widespread spike in error detection affecting an area that includes roughly 30 qubits. At this point, however, Google has only seen six of these events, so they told Ars that it’s difficult to really characterize them. “It’s so rare it actually starts to become a bit challenging to study because you have to gain a lot of statistics to even see those events at all,” said Kelly.

Beyond the relative durability of these logical qubits, the paper notes another advantage to going with larger code distances: it enhances the impact of further hardware improvements. Google estimates that at a distance of 15, improving hardware performance by a factor of two would drop errors in the logical qubit by a factor of 250. At a distance of 27, the same hardware improvement would lead to an improvement of over 10,000 in the logical qubit’s performance.

Note that none of this will ever get the error rate to zero. Instead, we just need to get the error rate to a level where an error is unlikely for a given calculation (more complex calculations will require a lower error rate). “It’s worth understanding that there’s always going to be some type of error floor and you just have to push it low enough to the point where it practically is irrelevant,” Kelly said. “So for example, we could get hit by an asteroid and the entire Earth could explode and that would be a correlated error that our quantum computer is not currently built to be robust to.”

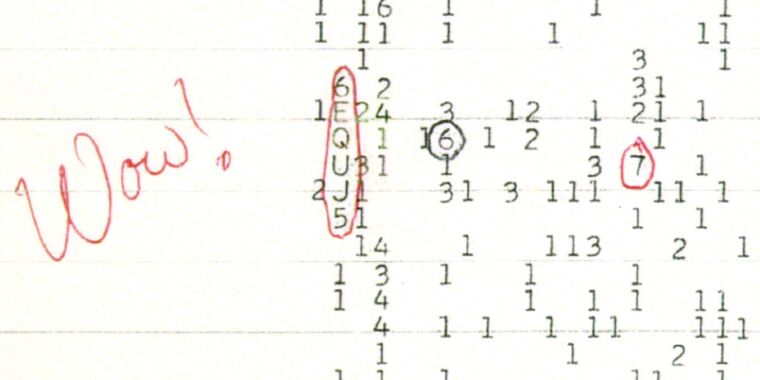

Obviously, a lot of additional work will need to be done to both make logical qubits like this survive for even longer, and to ensure we have the hardware to host enough logical qubits to perform calculations. But the exponential improvements here, to Google, suggest that there’s nothing obvious standing in the way of that. “We woke up one morning and we kind of got these results and we were like, wow, this is going to work,” Newman said. “This is really it.”

Nature, 2024. DOI: 10.1038/s41586-024-08449-y (About DOIs).

John is Ars Technica’s science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots.

Google gets an error-corrected quantum bit to be stable for an hour Read More »