What ice fishing can teach us about making foraging decisions

Ice fishing is a longstanding tradition in Nordic countries, with competitions proving especially popular. Those competitions can also tell scientists something about how social cues influence how we make foraging decisions, according to a new paper published in the journal Science.

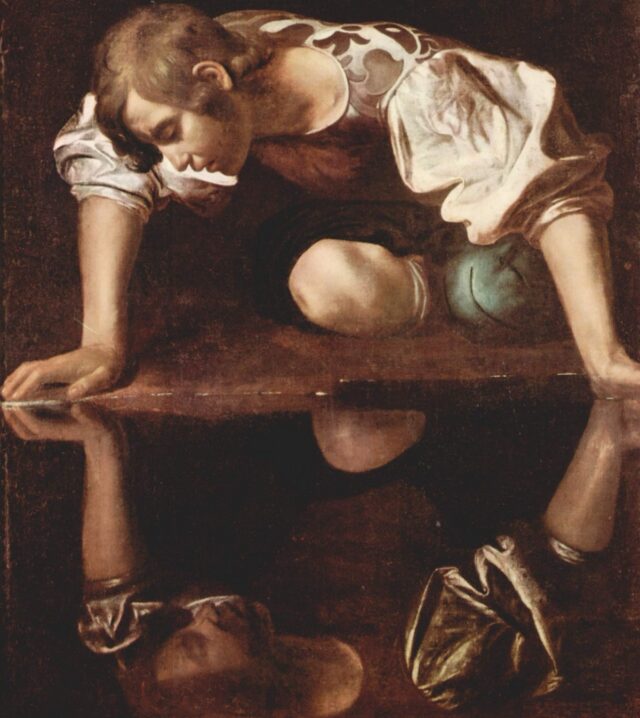

Humans are natural foragers in even the most extreme habitats, digging up tubers in the tropics, gathering mushrooms, picking berries, hunting seals in the Arctic, and fishing to meet our dietary needs. Human foraging is sufficiently complex that scientists believe that meeting so many diverse challenges helped our species develop memory, navigational abilities, social learning skills, and similar advanced cognitive functions.

Researchers are interested in this question not just because it could help refine existing theories of social decision-making, but also could improve predictions about how different groups of humans might respond and adapt to changes in their environment. Per the authors, prior research in this area has tended to focus on solitary foragers operating in a social vacuum. And even when studying social foraging decisions, it’s typically done using computational modeling and/or in the laboratory.

“We wanted to get out of the lab,” said co-author Ralf Kurvers of Max Planck Institute for Human Development and TU Berlin. “The methods commonly used in cognitive psychology are difficult to scale to large, real-world social contexts. Instead, we took inspiration from studies of animal collective behavior, which routinely use cameras to automatically record behavior and GPS to provide continuous movement data for large groups of animals.”

Kurvers et al. organized 10 three-hour ice-fishing competitions on 10 lakes in eastern Finland for their study, with 74 experienced ice fishers participating. Each ice fisher wore a GPS tracker and a head-mounted camera so that the researchers could capture real-time data on their movements, interactions, and how successful they were in their fishing attempts. All told, they recorded over 16,000 individual decisions specifically about location choice and when to change locations. That data was then compared to the team’s computational cognitive models and agent-based simulations.

What ice fishing can teach us about making foraging decisions Read More »