What climate targets? Top fossil fuel producing nations keep boosting output

Top producers are planning to mine and drill even more of the fuels in 2030.

Machinery transfers coal at a port in China’s Chongqing municipality on April 20. Credit: STR/AFP via Getty Images

The last two years have witnessed the hottest one in history, some of the worst wildfire seasons across Canada, Europe and South America and deadly flooding and heat waves throughout the globe. Over that same period, the world’s largest fossil fuel producers have expanded their planned output for the future, setting humanity on an even more dangerous path into a warmer climate.

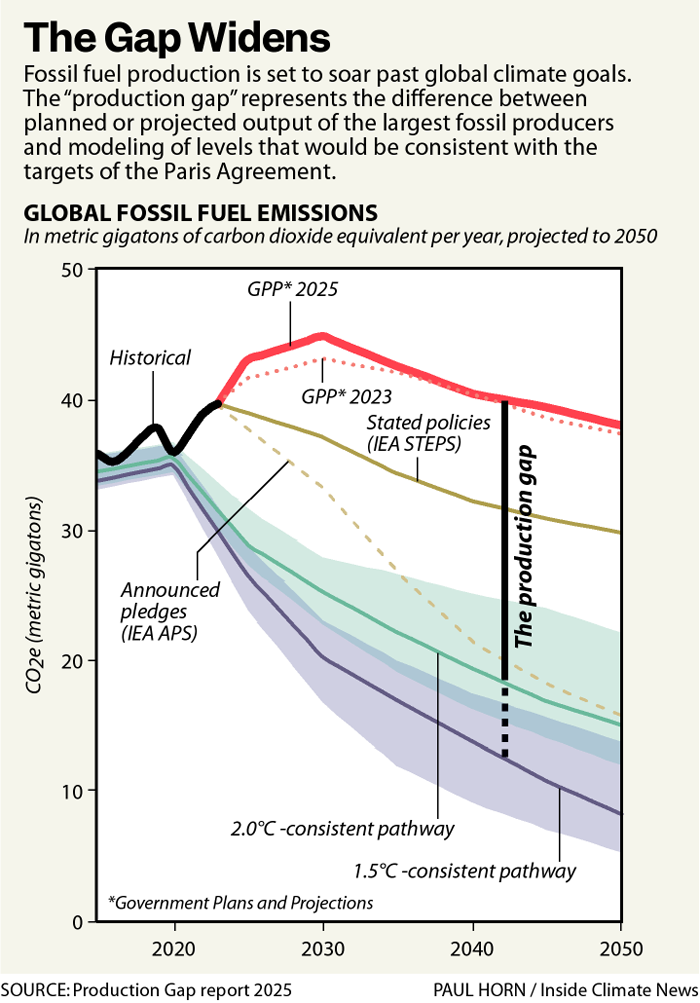

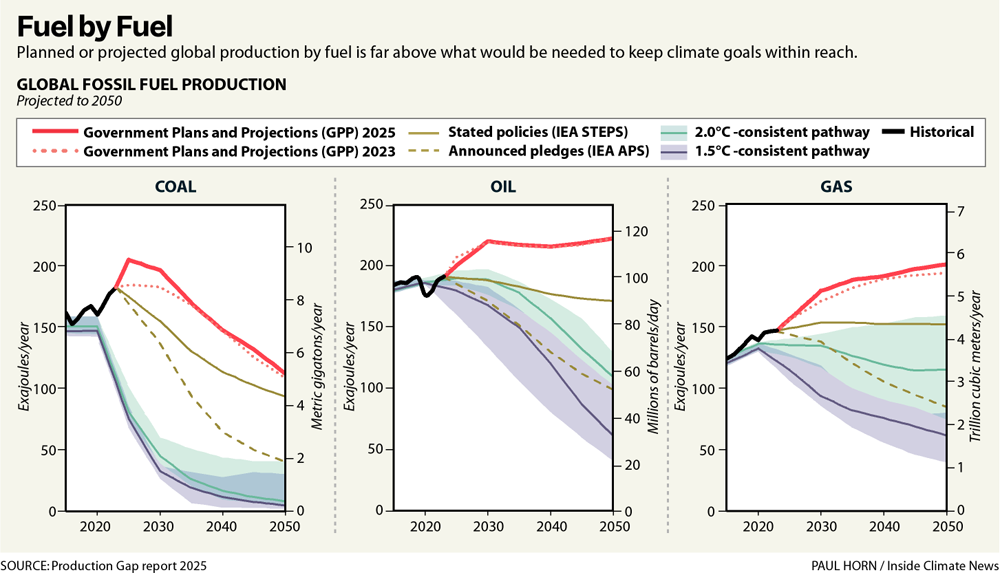

Governments now expect to produce more than twice as much coal, oil and gas in 2030 as would be consistent with the goals of the Paris Agreement, according to a report released Monday. That level is slightly higher than what it was in 2023, the last time the biennial Production Gap report was published.

The increase is driven by a slower projected phaseout of coal and higher outlook for gas production by some of the top producers, including China and the United States.

“The Production Gap Report has long served as a mirror held up to the world, revealing the stark gap between fossil fuel production plans and international climate goals,” said Christiana Figueres, former executive secretary of the United Nations Framework Convention on Climate Change, in a foreword to the report. “This year’s findings are especially alarming. Despite record climate impacts, a winning economic case for renewables, and strong societal appetite for action, governments continue to expand fossil fuel production beyond what the climate can withstand.”

The peer-reviewed report, written by researchers at the Stockholm Environment Institute, Climate Analytics and the International Institute for Sustainable Development, aims to focus attention on the supply side of the climate equation and the government policies that encourage or steer fossil fuel production.

“Governments have such a significant role in setting up the rules of the game,” said Neil Grant, a senior expert at Climate Analytics and one of the authors, in a briefing for reporters. “What this report shows is most governments are not using that influence for good.”

Credit: Inside Climate News

The report’s blaring message is that these subsidies, tax incentives, permitting and other policies have largely failed to adapt to the climate targets nations have adopted. The result is a split screen. Governments say they will cut their own climate-warming pollution, yet they plan to continue producing the fossil fuels that are driving that pollution far beyond what their climate targets would allow.

The report singles out the United States as “the starkest case of a country recommitting to fossil fuels.” The data for the United States, which draws on the latest projections of the US Energy Information Administration, does not reflect most of the policies the Trump administration and Congress have put in place this year to promote fossil fuels.

Since January, Congress has enacted billions of dollars in new subsidies to oil and gas companies while the Trump administration has forced retiring coal plants to continue operating, expanded mining and drilling access on public lands, delayed deadlines for drillers to comply with limits on methane pollution and fast-tracked fossil fuel permitting while setting roadblocks for building wind and solar energy projects.

In response to the report, White House spokesperson Taylor Rogers said in an email, “As promised, President Trump ended Joe Biden’s war on American energy and unleashed American energy on day one in the best interest of our country’s economic and national security. He will continue to restore American’s energy dominance.”

Credit: Inside Climate News

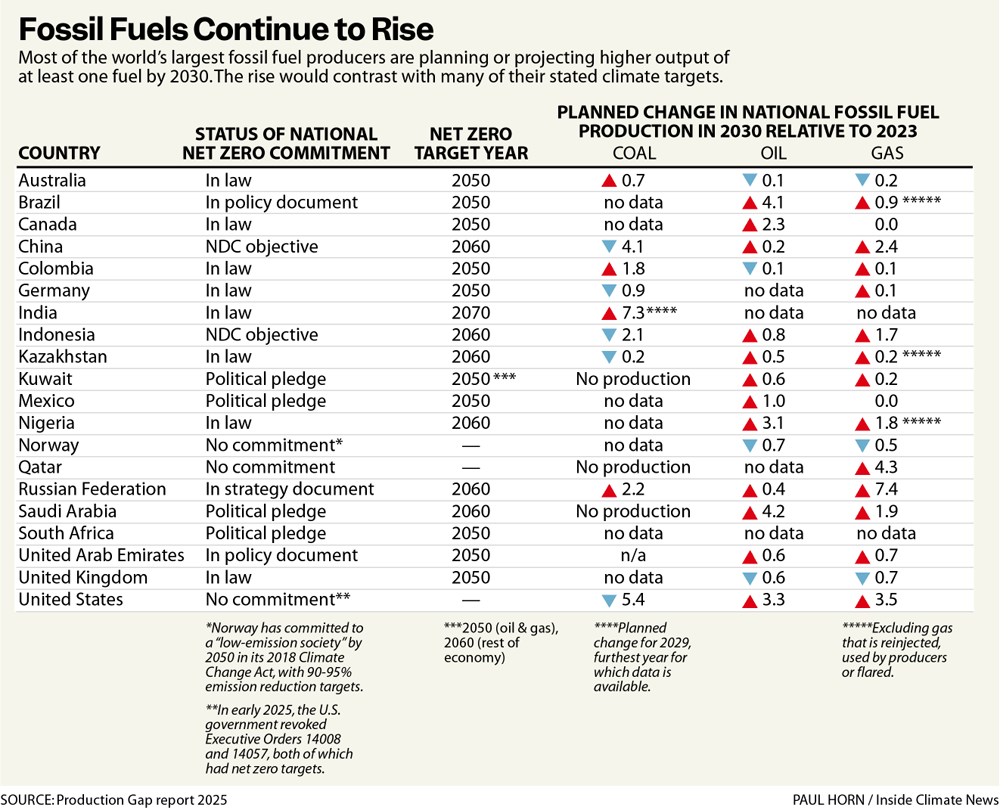

The Production Gap report assessed the government plans or projections of 20 of the world’s top producers. Some have state-owned enterprises while others are dominated by publicly listed companies. The countries, which were chosen for their production levels, availability of data and presence of clear climate targets, account for more than 80 percent of fossil fuel output. The report models total global production by scaling the data up to account for the rest.

All but three of the 20 nations are planning or projecting increased production in 2030 of at least one fossil fuel. Eleven now project higher production of at least one fuel in 2030 than they did two years ago.

Expected global output of coal, oil, and gas for 2030 is now 120 percent more than what would be consistent with pathways to limit warming to 1.5 degrees Celsius (2.7 degrees Fahrenheit) and 77 percent higher than scenarios to keep warming to less than 2 degrees Celsius (3.6 degrees Fahrenheit). The greater the warming, the more severe the consequences will be on extreme weather, rising seas and other impacts.

While previous installments of the report were published under the auspices of the United Nations Environment Program, this year’s version was issued independently.

In a sign of the world’s continuing failure to limit fossil fuel use, the modeling scenarios the report uses are becoming obsolete. Because nations have continued to burn more coal, gas and oil every year, future cuts would now need to be even steeper than what is reflected in the report to keep climate targets within reach.

“We’re already going into sort of the red and burning up our debt,” Grant said.

Three nations alone—China, the United States and Russia—were responsible for more than half of “extraction-based” emissions in 2022, or the pollution that comes when the fossil fuels are burned.

Ira Joseph, a senior research associate at the Center on Global Energy Policy at Columbia University, who was not involved in the report, said its focus on supply highlights an important part of understanding global energy markets.

“Any type of tax breaks or subsidies or however you want to call them lowers the break-even cost for producing oil and gas,” Joseph said. Lower costs mean more supply, which in turn lowers prices and spurs more demand. The projections and plans the report is based on, Joseph said, reflect this global give and take.

Credit: Inside Climate News

The biggest changes since the last report come from a slower projected decline in China’s coal mining and faster expected growth in gas production in the United States. Smaller producers are also expecting sharper increases in gas output.

The report did highlight some bright spots. Two additional governments—Brazil and Colombia—are developing plans that would align fossil fuel production with climate goals, bringing the total to six out of the 20. Germany now expects a more accelerated phase-out of coal production. China is speeding its deployment of wind and solar energy. Some countries have also reduced subsidies for fossil fuels.

Yet these measures clearly fall far short, the report said.

The authors called on governments to coordinate their policies and plan for how they can collectively lower production in a way that keeps climate targets within reach without shocking the economies that depend on the jobs and revenue provided by mining, drilling, and processing the fuels. They pointed to a handful of efforts—called Just Energy Transition Partnerships—to provide financing from wealthy countries to support phasing out coal in developing or emerging economies. These programs have struggled to mobilize much money, however, and the Trump administration has withdrawn the United States from them.

Grant said the policies indicate that government officials are failing to adapt to a more uncertain future.

“Change doesn’t happen in straight lines, but I think if you look at the Production Gap report this year, what you see is that many governments are still thinking in straight lines,” Grant said.

The policies the team examined foresee fossil fuel use remaining steady or declining gradually. The result, Grant argued, could be one of two scenarios: Either fossil fuel use remains high for years, in line with these production plans, or it declines more quickly and governments are unprepared for the sudden drop in sales.

“Those would lead to either climate chaos or significant negative economic impacts on countries,” Grant said. “So we need to try to avoid both of those. And the way to do that is to try to align our fossil fuel production plans with our climate goals.”

This story originally appeared on Inside Climate News.

What climate targets? Top fossil fuel producing nations keep boosting output Read More »