Idaho has become the wild frontier of vaccination policy and public health

Idaho charts a new path as trust in public health craters.

Some 280,000 people live in the five northernmost counties of Idaho. One of the key public officials responsible for their health is Thomas Fletcher, a retired radiologist who lives on a 160-acre farm near Sandpoint.

Fletcher grew up in Texas and moved to Idaho in 2016, looking for a place where he could live a rural life alongside likeminded conservatives. In 2022, he joined the seven-member board of health of the Panhandle Health District, the regional public health authority, and he was appointed chairman last summer.

PHD handles everything from cancer screenings to restaurant hygiene inspections, and the business of the board is often mundane, almost invisible. Then, this February, Fletcher issued a short announcement online. Parents, he wrote, should be informed of the potential harms of common childhood vaccines. It was time for the board to discuss how best to communicate those risks, rather than “withholding information contra the CDC narrative.” Fletcher invited everyone who believes in “full disclosure and transparency when providing informed consent on childhood vaccines” to attend the next monthly meeting of the board, on a Thursday afternoon.

PHD board meetings tend to be sparsely attended. This one was standing-room only—the start of a monthslong debate over vaccine safety and the question of what, exactly, it means to provide informed consent.

Versions of that debate are playing out across the United States in the aftermath of the COVID-19 pandemic, which many Americans believe was badly mismanaged. The backlash has upended longstanding norms in public health: The nation’s top health official, Robert F. Kennedy Jr., publicly questions the value of common vaccines. Prominent vaccine skeptics now sit on a key advisory committee that shapes immunization practices nationwide. Polls suggest that trust in health authorities is politically polarized — and perhaps historically low. Immunization rates are dropping across the country. And many advocates are promoting a vision of public health that’s less dependent on mandates and appeals to authority, and more deferent to individuals’ beliefs.

Much of that energy has been reflected in Kennedy’s Make American Healthy Again, or MAHA, movement. The coalition is diverse — and has sometimes fractured over vaccination issues—but often channels a long-running argument that Americans should have more freedom to choose or reject vaccines and other health measures.

The backlash against traditional health authorities, said Columbia University medical historian James Colgrove, is unprecedented in recent US history. “It’s been a very, very long time since we’ve been in a place like this,” he said.

Perhaps more than anywhere else in the country, Idaho has experienced these shifts—an ongoing experiment that shows what it looks like to put a vision of individual health freedom into practice. And places like the Panhandle Health District have become testing grounds for big questions: What happens when communities move away from widespread and mandated vaccination? And what does it mean to turn MAHA principles into local public health policy?

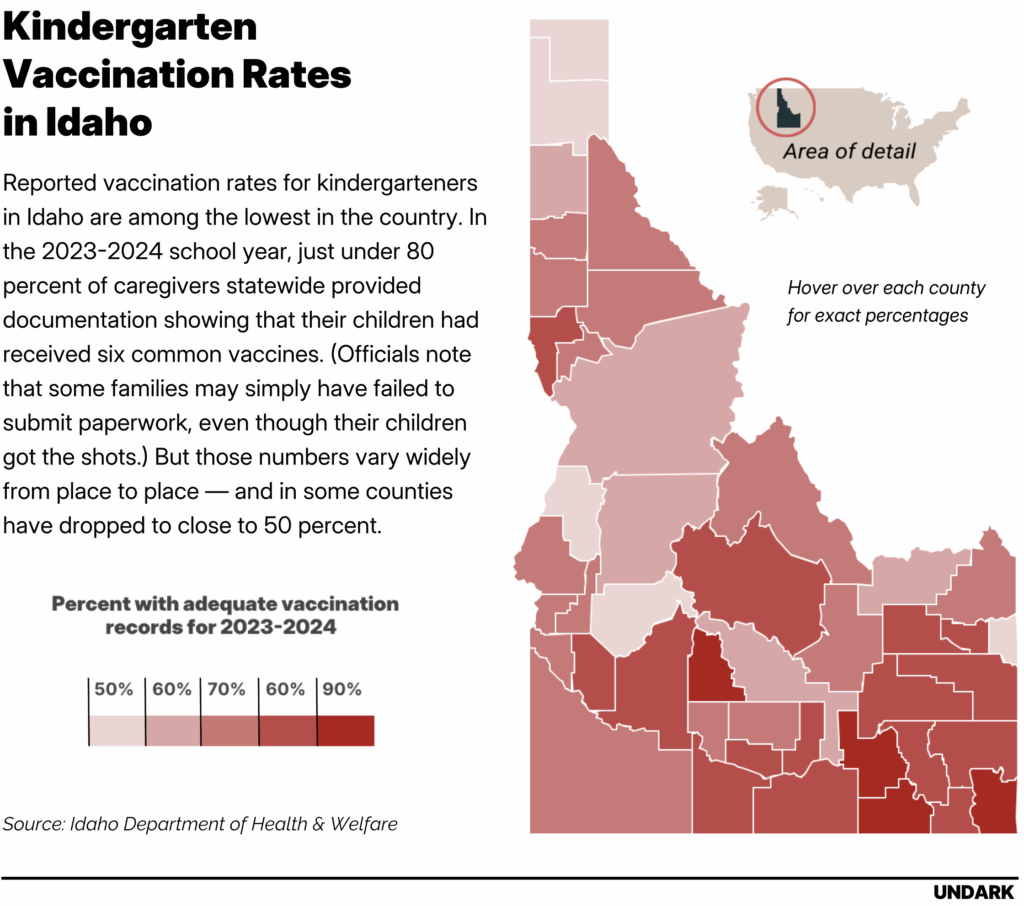

During a recent visit to Idaho, Kennedy described the state as “the home of medical freedom.” In April, Gov. Brad Little signed the Idaho Medical Freedom Act, which bans schools, businesses, and government agencies from requiring people to participate in medical interventions, such as mask-wearing or vaccination, in order to receive services. It’s the first legislation of its kind in the country. The bill has a carveout that keeps school vaccine requirements in place, but those requirements are already mostly symbolic: The state’s exemption policy is so broad that, as one Idaho pediatrician told Undark, “you can write on a napkin, ‘I don’t want my kids to get shots because of philosophical reasons,’ and they can go to kindergarten.” Overall, reported vaccination rates for kindergarteners in Idaho are now lower than in any other state that reported data to the federal government—especially in the Panhandle Health District, where fewer than two-thirds arrive with records showing that they are up-to-date on common shots.

“It’s really kind of like watching a car accident in slow motion,” said Ted Epperly, a physician and the CEO of Full Circle Health, which operates a network of clinics in the Boise area.

A view of Sandpoint, Idaho, which sits on the shores of Lake Pend Oreille. The city, a part of Bonner County, is served by the Panhandle Health District. Credit: Kirk Fisher/iStock/Getty Images Plus

Public health leaders often ascribe the low vaccination rates to the work of bad-faith actors who profit from falsehoods, to the spread of misinformation, or to failures of communication: If only leaders could better explain the benefits of vaccination, this thinking goes, more people would get shots.

In interviews and public statements, health freedom advocates in Idaho describe a far deeper rift: They do not believe that public health institutions are competent or trustworthy. And restoring that trust, they argue, will require radical changes.

Fletcher, for his part, describes himself as an admirer of RFK Jr. and the Make America Healthy Again movement. With the recent appointment of a new member, he said, MAHA supporters now hold a majority on the board, where they are poised to reimagine public health work in the district.

Local public health

In the US, public health is mostly local. Agencies like the Centers for Disease Control and Prevention conduct research and issue influential recommendations. But much of the actual power rests with the country’s thousands of state, local, and tribal public health authorities—with institutions, in other words, like the Panhandle Health District, and with leaders like Fletcher and his fellow PHD board of health member Duke Johnson.

Johnson says he grew up in Coeur d’Alene, Idaho, in the 1960s, the descendant of homesteaders who arrived in the 19th century. He attended medical school at the University of California, Los Angeles and eventually returned to Idaho, where he runs a family medical practice and dietary supplement business in the town of Hayden.

In Idaho, health boards are appointed by elected county commissioners. The commissioners of Kootenai County gave Johnson the nod in July 2023. Johnson took the role, he said, in order to restore trust in a medical system that he characterized as beholden to rigid dogmas and protocols rather than independent thinking.

In interviews and public statements, health freedom advocates in Idaho describe a far deeper rift: They do not believe that public health institutions are competent or trustworthy.

Last winter, Johnson took a tour of one of the PHD clinics. Among other services, it provides routine childhood immunizations, especially for families with limited access to health care. As is standard in pediatrics practices, the clinic hands out flyers from the CDC that review the potential side effects of common vaccines, including “a very remote chance” of severe outcomes. Johnson was unimpressed with the CDC writeup. “I thought: This isn’t completely covering all of the risk-benefit ratio,” Johnson said. He felt families could be better informed about what he sees as the substantial risks of common shots.

Johnson is an outlier among physicians. The overwhelming majority of laboratory scientists, epidemiologists, and pediatricians who have devoted their lives to the study of childhood disease say that routine immunizations are beneficial, and that serious side effects are rare. Large-scale studies have repeatedly failed to find purported links between the measles-mumps-rubella, or MMR, vaccine and autism, or to identify high rates of severe side effects for other routine childhood immunizations. The introduction of mass vaccinations in the US in the 1950s and 1960s was followed by dramatic declines in the rates of childhood diseases like polio and measles that once killed hundreds of American children each year, and sent tens of thousands more to the hospital. Similar declines have been recorded around the world.

Children can suffer side effects from common shots like the MMR vaccine, ranging from mild symptoms like a rash or fever to rare fatal complications. Public health agencies and vaccine manufacturers study and track those side effects. But today, many Americans simply do not trust that those institutions are being transparent about the risks of vaccination.

Johnson shares some of those concerns. The website for his clinic, Heart of Hope Health, describes offering services for “injection-injured” patients, encouraging them to receive a $449 heart scan, and advertises “no forced masks or vaccinations.” (During a PHD board meeting, Johnson said that one of his own children suffered an apparent bad reaction to a vaccine many years ago.) “The lack of trust in established medicine is probably 10 times bigger than the people at Harvard Medical School realize,” Johnson told Undark during an evening phone call, after a long day seeing patients. Top medical institutions have brilliant scientists on staff, he continued. But, he suggested, those experts have lost touch with how they’re seen by much of the public: “I think sometimes you can spend so much time talking to the same people who agree with you that you’re not reaching the people on the street who are the ones who need the care. And I’m in the trenches.”

Many public health experts agree that restoring trust is an urgent priority, and they are convinced that it will come through better communication, a reduction in the circulation of misinformation, and a re-building of relationships. Johnson and others in the health freedom movement frequently adopt the language of restoring trust, too. But for them, the process tends to mean something different: an overhaul of public health institutions and a frank accounting of their perceived failures.

At the board meeting in February, Johnson laid out the proposal for a change in policy: What if the board wrote up its own document for parents, explaining the evidence behind specific vaccines, and laying out the risks and benefits of the shots? The goal, he told Undark, was “to make sure that the people that we’re responsible for in our in our district can make an informed decision.”

Fletcher was also hoping to change the way PHD communicated about vaccines. Why did a push for informed consent appeal to him? “I can summarize the answer to that question with one word,” Fletcher said. “COVID.”

Nobody’s telling me what to do

Idaho is ideologically diverse, with blue pockets in cities like Boise, and texture to its overwhelming Republican majority. (Latter-Day Saint conservatives in East Idaho, for example, may not always be aligned with government-skeptical activists clustered in the north.) Parts of the state have a reputation for libertarian politics—and for resistance to perceived excesses of government authority.

People came West because “they wanted to get out to a place where nobody would tell them what to do,” said Epperly, the Boise-area physician and administrator. That libertarian ethos, he said, can sometimes translate into a skepticism of things like school vaccination requirements, even as plenty of Idahoans, including Epperly, embrace them.

Like all US states, Idaho technically requires vaccination for children to attend school. But it is relatively easy to opt out of the requirement. In 2021, Idaho lawmakers went further, instructing schools to be proactive and notify parents they had the option to claim an exemption.

“Idaho has some of the strongest languages in the US when it comes to parental rights and vaccine exemptions,” the vaccine-skeptical advocacy group Health Freedom Idaho wrote in 2021. In the 2024–2025 school year, more than 15 percent of kindergarten parents in the state claimed a non-medical exemption, the highest percentage, by far, of the states that reported data.

The pandemic, Epperly and other Idaho health care practitioners said, accelerated many of these trends. In his view, much of that backlash was about authority and control. “The pandemic acted as a catalyst to increase this sense of governmental overreach, if you will,” he said. The thinking, he added, was: “‘How dare the federal government mandate that we wear masks, that we socially distance, that we hand-wash?’”

Recently, advocates have pushed to remove medical mandates in the state altogether through the Idaho Medical Freedom Act, which curtails the ability of local governments, businesses, and schools to impose things like mask mandates or vaccine requirements.

The author of the original bill is Leslie Manookian, an Idaho activist who has campaigned against what she describes as the pervasive dangers of some vaccines, and who leads a national nonprofit, the Health Freedom Defense Fund. In testimony to an Idaho state Senate committee this February, she described feeling shocked by mitigation measures during the COVID-19 pandemic. “Growing up, I could have never, ever imagined that Idaho would become a place that locked its people down, forced citizens to cover their faces, stand on floor markers 6 feet apart, or produce proof of vaccination in order to enter a venue or a business,” Manookian told the senators.

“Idaho has some of the strongest languages in the US when it comes to parental rights and vaccine exemptions.”

Where some public health officials saw vital interventions for the public’s well-being, Manookian saw a form of government overreach, based on scant evidence. Her home state, she argued, could be a leader in building a post-COVID vision of public health. “Idaho wants to be the shining light on the Hill, that leads the way for the rest of the nation in understanding that we and we alone are sovereign over our bodies, and that our God-given rights belong to us and to no one else,” Manookian said during the hearing. A modified version of the bill passed both houses with large majorities, and became law in April.

Epperly, like many physicians and public health workers in the state, has watched these changes with concern. The family medicine specialist grew up in Idaho. During the pandemic, he was a prominent local figure advocating for masking and COVID-19 vaccinations. When the pandemic began, he had been serving on the board of the Boise-area Central District Health department for more than a decade. Then, in 2021, Ada County commissioners declined to renew his appointment, selecting a physician and vocal opponent of COVID-19 vaccines instead.

A transformative experience

For Thomas Fletcher, the Panhandle Health District board of health chair, the experience of the pandemic was transformative. Fletcher has strong political views; he moved away from Texas, in part, over concerns that the culture there was growing too liberal, and out of a desire to live in a place that was, as he put it, “more representative of America circa 1950.” But before the pandemic, he said, although he was a practicing physician, he rarely thought about public health.

Then COVID-19 arrived, and it felt to him that official messaging was disconnected from reality. In early 2020, the World Health Organization said that COVID-19 was not an airborne virus. (There’s a scientific consensus today that it actually is.) Prominent scientists argued that it was a conspiracy theory to say that COVID-19 emerged from a lab. (The issue is still hotly debated, but many scientists now acknowledge that a lab leak is a real possibility.) The World Health Organization appeared to indicate that the fatality rate of COVID-19 was upwards of 3 percent. (It’s far lower.)

Many people today understand these reversals as the results of miscommunications, evolving evidence, or good-faith scientific error. Fletcher came to believe that Anthony Fauci—a member of the White House Coronavirus Task Force during the pandemic—and other public health leaders were intentionally, maliciously misleading the public. Fletcher reads widely on the platform Substack, particularly writers who push against the medical establishment, and he concluded that COVID-19 vaccines were dangerous, too—a toxic substance pushed by pharma, and backed knowingly by the medical elite. “They lied to us,” he said.

That shift ultimately led the retired physician to question foundational ideas in his field. “Once you realize they’re lying to us, then you ask the question, ‘Well, where else are they lying?’” Fletcher said during one of several lengthy phone conversations with Undark. “I was a card-carrying allopathic physician,” he said. “I believed in the gospel.” But he soon began to question the evidence behind cholesterol medication, and then antidepressants, and then the childhood vaccination schedule.

In 2022, lawmakers in Bonner County appointed Fletcher to the board of health. Last year, he took the helm of the board, which oversees an approximately 90-person agency with a $12 million budget.

“As Chairman of Panhandle Health, I feel a certain urge to restore the trust—public trust in public health—because that trust has been violated,” he said.

The informed consent measure seemed like one way to get there.

Conversations around informed consent

On a February afternoon, in a conference room at the health district office in Hayden, a few dozen attendees and board members gathered to discuss vaccination policy and informed consent in the district.

During the lengthy public comment periods, members of the public spoke about their experiences with vaccination. One woman described witnessing the harms of diseases that have been suppressed by vaccination, noting that her mother has experienced weakness in her limbs as the result of a childhood polio infection. Several attendees reported firsthand encounters with what they understood to be vaccine side effects; one cited rising autism rates. They wanted parents to hear more about those possibilities before getting shots.

In response, some local pediatrics providers insisted they already facilitated informed consent, through detailed conversations with caregivers. They also stressed the importance of routine shots; one brought up the measles outbreak emerging in Texas, which would go on to be implicated in the deaths of two unvaccinated children.

“Once you realize they’re lying to us, then you ask the question, ‘Well, where else are they lying?’”

Johnson, defending the measure, proposed a document that listed both pros and cons for vaccination. The PHD Board, he argued, “would have a much better chance of providing good information than the average person on the Internet.”

The conversation soon bogged down over what, exactly, the document should look like. “If the vote is yay or nay for informed consent, I’m all in with two hands,” said board member Jessica Jameson, an anesthesiologist who ultimately voted against the measure. “But my concern is that we have to be very careful about the information we present and the way that it’s presented.” The board members, she added, were neither “the subject matter experts nor the stakeholders,” and studies that seemed strong on first-glance could be subject to critique.

Marty Williams, a nurse practitioner in Coeur d’Alene who works in pediatrics, had heard about the meeting that morning, as materials about the measure circulated online.

Williams is a former wildland firefighter, a father of five, and a Christian; he snowboards and bowhunts in his free time, and speaks with the laid-back affect of someone who has spent years coaching anxious parents through childhood scrapes and illnesses. A document associated with the proposal looked to him less like an attempt at informed consent, and more like a bid to talk parents out of giving their children immunizations. “If you read this, you would be like, ‘Well, I would never vaccinate my child,’” he recalled. “It was beyond informed consent. It seemed to be full of bias.”

He and his practice partner, Jeanna Padilla, canceled appointments in order to attend the meeting and speak during a public comment period. “The thought of it coming from our public health department made me sick,” Williams said. “We’re in the business of trying to prevent disease, and I had a strong feeling that this was going to bring more fear onto an already anxiety-provoking subject.” The issue felt high-stakes to him: That winter, he had seen more cases of pertussis, a vaccine-preventable illness, than at any point in his 18-year career.

Williams has always encountered some parents who are hesitant about vaccination. But those numbers began to rise during the COVID-19 pandemic. Trust in public health was dropping, and recommendations to vaccinate children against COVID-19, in particular, worried him. “Is this going to push people over the edge, where they just withdraw completely from vaccines?” he wondered at the time. Something did shift, he said: “We have families that historically have vaccinated their children, and now they have a new baby, and they’re like, ‘Nope, we’re not doing it. Nope, nope, nope.’”

In his practice, Williams described a change in how he’s approached parents. “I don’t say, ‘Well, you know, it’s time for Junior’s two months shots. Here’s what we’re going to do.’ I don’t approach it that way anymore, because greater than 40 or 50 percent of people are going to say, ‘Well, no, I’m not doing vaccines. And they get defensive right away,’” he said. Instead, he now opens up a conversation, asking families whether they’ve thought about vaccination, answering their questions, providing resources, talking about his personal experiences treating illness—even inviting them to consider the vaccine schedules used in Denmark or Sweden, which recommend shots for fewer diseases, if they are adamant about not following CDC guidelines.

The approach can be effective, he said, but also time-consuming and draining. “It’s emotional for me too, because there’s a piece of this that being questioned every single day in regards to the standard of care, as if you’re harming children,” he said.

“If you read this, you would be like, ‘Well, I would never vaccinate my child.’ It was beyond informed consent. It seemed to be full of bias.”

Williams doubts his comments at the February meeting achieved much. “I was shocked by what I was hearing, because it was so one-sided,” he said. What seemed to be missing, he said, was an honest account of the alternatives: “There was no discussion of, OK, then, if we don’t vaccinate children, what is our option? How else are we going to protect them from diseases that our grandparents dealt with that we don’t have to deal with in this country?”

The board punted: They’d discuss the issue again down the road.

This isn’t new

Versions of this debate have played out across Idaho—and across the country — since the end of COVID-19’s emergency phase. In an apparent national first, one Idaho health district banned COVID-19 vaccines altogether. In Louisiana, Surgeon General Ralph Abraham told public health departments to stop recommending specific vaccines. “Government should admit the limitations of its role in people’s lives and pull back its tentacles from the practice of medicine,” Abraham and his deputy wrote in a statement explaining the decision. “The path to regaining public trust lies in acknowledging past missteps, refocusing on unbiased data collection, and providing transparent, balanced information for people to make their own health decisions.”

In several states, Republican lawmakers have moved to make it easier for people to opt out of vaccines. Not all those efforts have been successful: In West Virginia this past March, for example, the Republican-dominated legislature rejected a bill that would have made it easier to obtain exemptions. Keith Marple, a Republican lawmaker who voted against the measure, cited his personal experiences with people who had been left disabled by polio. “West Virginia needs to look after its children,” he said, according to the news site West Virginia Watch.

In an apparent national first, one Idaho health district banned COVID-19 vaccines altogether.

In Idaho, like many states, vaccination rates have dropped. In the 2023-2024 school year, a bit more than 65 percent of kindergarten families in the Panhandle Health District furnished records showing they’ve received the MMR vaccine and five other common immunizations, down from just over 69 percent in the 2019-2020 school year. (State officials note that some children may have received shots, but their parents did not submit the paperwork to prove it.) Such figures, infectious disease modelers say, leave the area vulnerable to outbreaks of measles and other illnesses.

During an interview with Undark earlier this year, Sarah Leeds, who directs the immunization program for the Idaho Department of Health and Welfare, noted her colleagues across the country are reporting resistance to their work. “Sometimes it’s hard when you might be feeling like people think we’re the villain,” she said. “But I know our team and our leadership knows we do good work, and it’s based on sound science, and it’s important work for the community. And we just keep that at the front of our minds.”

When the board reconvened in early March, more advocates for the informed consent policy came out to back it. Among them was Rick Kirschner, a retired naturopathic doctor, author, and speaker. (His best-known book is titled “Dealing With People You Can’t Stand.”) Kirschner lived for decades in Ashland, Oregon. Early in 2020, he began to diverge from his neighbors over COVID-19 policies. He and his wife visited north Idaho that summer, and bought a home there weeks later. Compared to pandemic-conscious Oregon, it felt like a different reality. That Thanksgiving, he said during a recent Zoom interview, they attended a celebration “with 10 families and all their kids running around. It just was, ‘Oh, we’re Americans again.’ And it was just terrific.”

At the meeting in March, several people said that it was necessary to restore trust in public health institutions. But what, exactly, did that mean? Kirschner argued that it required more information, including more detailed accountings of all the ways public health interventions like vaccination could cause harm, and more detail on where the scientific literature falls short. “Denying information risks backfiring when risks that were hidden become known and trust in authorities craters,” he said during the hearing.

“I find that people are smarter than these public health people give them credit for,” he said during his call with Undark. There was a tendency in public health, he felt, to treat people like cattle. “The mindset of public health is, ‘They’re dummies, and we need to direct them and to what we think is in their interest,’” he said.

Others at the meeting pushed back against suggestions that public health workers and clinicians were not already providing detailed information to patients. “It’s not like Panhandle Health is against informed consent, or does not have that as part of the process” said Peggy Cuvala, a member of the board. Cuvala has personal experience with the issue: She spent more than three decades as a public health nurse and nurse practitioner with the Panhandle Health District. “I would never force anyone into vaccination,” she said in a phone interview.

Cuvala is well aware that vaccine side effects happen—one of her own children, she said, suffered an adverse reaction to a shot—but she’s also seen transformative benefits. For years, she had to fill out reports on cases of Haemophilus influenzae that had caused meningitis in young children, including one case in which an infant died. Then a vaccine arrived. “Within a year of that vaccine coming out, I didn’t have to do those reports anymore,” she told Undark.

Cuvala describes herself as feeling perplexed by the recent direction of the board. “I think protecting and promoting the health and well being of the residents in North Idaho is critical,” she wrote in an email. “This work should be directed by the board collectively without political bias.”

During the meeting, legal questions came up, too: What were the liability implications of drawing up a custom PHD vaccine safety document?

In a previous meeting, Fletcher had pushed for a document that just gave basic details on the duration and scope of the randomized controlled trials that common vaccines had been subjected to. Such information, he argued, would demonstrate how poorly vetted the shots were—and show how they could be dangerous, even fatal. After that, he said in an interview, it was the parent’s choice. “If some mom wants her kid to get it, fine, give it to him,” Fletcher said. The ultimate arbiter of who was correct would be the brutal process of natural selection: “Let Darwin figure it out.”

In the March meeting, the board voted against creating a subcommittee to explore how to draft the document. “It’s dead,” said Fletcher during a phone call in early May.

A matter of trust

The discussion around the informed consent measure, though, was not entirely gone. On a Saturday morning in early May, the board held a lengthy public planning session at a government building in Coeur d’Alene. During a visioning session, attendees put stickers on pieces of paper next to words describing opportunities for the district. At the bottom of the page, someone wrote, in large, all-caps: “TRUST.”

Kirschner spoke again at the meeting, urging the board to revive the measure. So did a handful of other attendees, including Ron Korn, a county commissioner.

In a short interview at the meeting, PHD spokesperson Katherine Hoyer expressed some uncertainty about what substantive differences, precisely, the measure would offer over what’s already taking place in clinics. “What they’re proposing is that we provide patients with information on medical practices and vaccines,” she said. “That is happening.”

Fletcher sees opportunities ahead. In July, the board unanimously reelected him as chair. And, he said, he has a new ally in the push for an informed consent policy. Jessica Jameson, one of the board members who opposed the measure, recently resigned. Fletcher described her successor, a naturopathic doctor who was appointed to the board last month, as aligned with the MAHA movement. That brings the total MAHA-aligned members, by his count, to four — securing a majority on the seven-member board. “My plan is unfolding just as I wanted,” he said during a call in late July.

During an earlier conversation, Fletcher had reflected on the strange position of RFK Jr., who is perched atop the Department of Health and Human Services, which is staffed by many of the people he spent his career opposing. “He has hundreds of thousands of employees; 99.99 percent of them think he’s full of shit,” Fletcher said. Fletcher, in some ways, has his own miniature version of that problem: An antagonist of institutional public health, overseeing a public health organization.

The precise informed consent measure, he acknowledged, may not come to pass. But the debate itself has merit, he said: “Even if we lose, whatever lose means, even if we don’t make any positive forward motion — you never know. Every time you talk about this, you maybe change someone’s sentiment. You maybe move things forward a little bit. Which is why I do it.”

Fletcher’s role is small. But, he suggested, added together, the cumulative efforts of local politicking could amount to a revolution. “Robert Kennedy needs as many people putting their oar in the water and stroking in the same direction,” Fletcher said. “He can’t do it alone. So if there are 10,000 Thomas Fletchers out there, all going in the same direction, then maybe we can have hope.”

Rajah Bose contributed reporting from Idaho.

This article was originally published on Undark. Read the original article.

Idaho has become the wild frontier of vaccination policy and public health Read More »