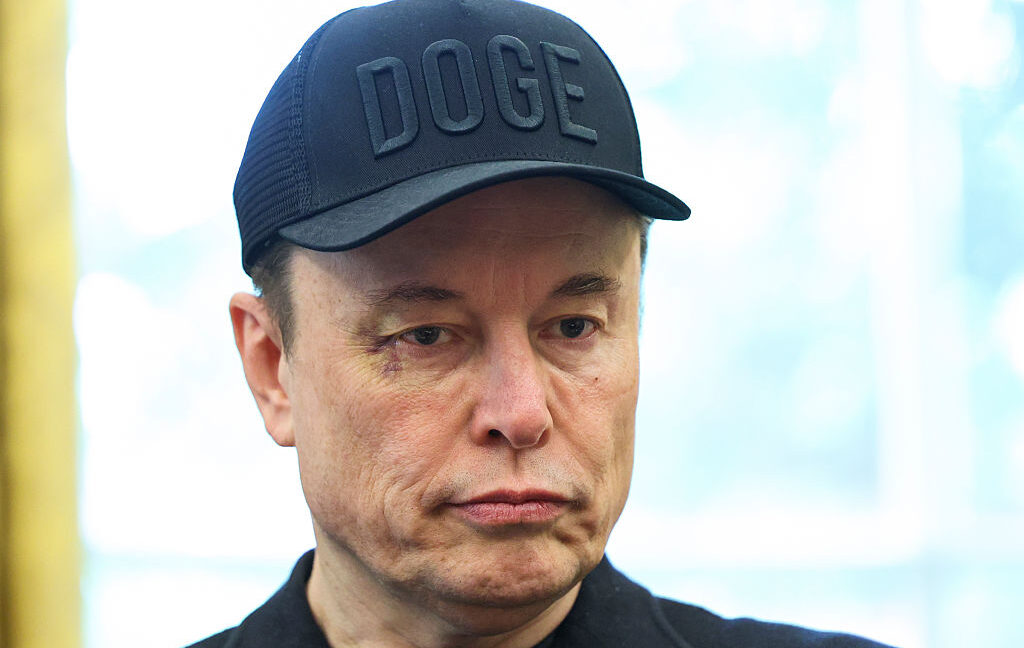

Judge gives Musk bad news, says Trump hasn’t intervened to block SEC lawsuit

Now, Musk may be running out of arguments after Sooknanan shot down his First Amendment claims and other claims nitpicking the statute as unconstitutionally vague.

Whether Musk can defeat the SEC lawsuit without Trump’s intervention remains to be seen as the lawsuit advances. In her opinion, the judge found that the government’s interest in requiring disclosures to ensure fair markets outweighed Musk’s fears that disclosures compelled speech revealing his “thoughts” and “strategy.” Accepting Musk’s arguments would be an “odd” choice to break “new ground,” she suggested, as it could foreseeably impact a wide range of laws.

“Many laws require regulated parties to state or explain their purposes, plans, or intentions,” Sooknanan wrote, noting courts have long upheld those laws. Additionally, it seemed to be “common sense” for the SEC to compel disclosures “alerting the investing public to potential changes in control,” she said.

“The Court does not doubt that Mr. Musk would prefer to avoid having to disclose information that might raise stock prices while he makes a play for corporate control,” Sooknanan wrote. But there was no violation of the First Amendment, she said, as Congress struck the appropriate balance when it wrote the statute requiring disclosures.

Musk may be able to develop his arguments on selective enforcement as a possible path to victory. But Sooknanan noted that “despite having very able counsel,” his case right now seems weak.

In her opinion, Sooknanan also denied as premature Musk’s motions to strike from potential remedies the SEC requests for disgorgement and injunctive relief.

Likely troubling Musk, instead of balking at the potential fines, the judge suggested that “the SEC’s request to disgorge $150 million” appeared reasonable. That amount, while larger than past cases flagged by Musk, “corresponds to the Complaint’s allegation” that Musk’s violation of SEC requirements “allowed him to net that amount,” Sooknanan wrote.

“A straightforward application of the law reveals that none” of Musk’s arguments “warrant dismissal of this lawsuit,” Sooknanan said.

Judge gives Musk bad news, says Trump hasn’t intervened to block SEC lawsuit Read More »